共计 15086 个字符,预计需要花费 38 分钟才能阅读完成。

elasticsearch插件

elasticsearch插件默认安装在/usr/share/elasticsearch/plugins中

[root@elastic-01 ~]# ll /usr/share/elasticsearch/plugins/

total 0

安装插件时可以使用自带的命令进行安装

[root@elastic-01 ~]# /usr/share/elasticsearch/bin/elasticsearch-plugin -h

A tool for managing installed elasticsearch plugins

Commands

--------

list - Lists installed elasticsearch plugins

install - Install a plugin

remove - removes a plugin from Elasticsearch

Non-option arguments:

command

Option Description

------ -----------

-E <KeyValuePair> Configure a setting

-h, --help Show help

-s, --silent Show minimal output

-v, --verbose Show verbose output

iK分词器

iK分词器地址:https://github.com/medcl/elasticsearch-analysis-ik

[root@elastic-01 ~]# /usr/share/elasticsearch/bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.7.0/elasticsearch-analysis-ik-7.7.0.zip

-> Installing https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.7.0/elasticsearch-analysis-ik-7.7.0.zip

-> Downloading https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.7.0/elasticsearch-analysis-ik-7.7.0.zip

[=================================================] 100%

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin requires additional permissions @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

* java.net.SocketPermission * connect,resolve

See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

Continue with installation? [y/N]y

-> Installed analysis-ik

[root@elastic-01 ~]# ll /usr/share/elasticsearch/plugins/

total 0

drwxr-xr-x 2 root root 229 Aug 02 14:35 analysis-ik

[root@elastic-01 ~]# ll /usr/share/elasticsearch/plugins/analysis-ik/

total 1428

-rw-r--r-- 1 root root 263965 Aug 02 14:35 commons-codec-1.9.jar

-rw-r--r-- 1 root root 61829 Aug 02 14:35 commons-logging-1.2.jar

-rw-r--r-- 1 root root 54599 Aug 02 14:35 elasticsearch-analysis-ik-7.7.0.jar

-rw-r--r-- 1 root root 736658 Aug 02 14:35 httpclient-4.5.2.jar

-rw-r--r-- 1 root root 326724 Aug 02 14:35 httpcore-4.4.4.jar

-rw-r--r-- 1 root root 1805 Aug 02 14:35 plugin-descriptor.properties

-rw-r--r-- 1 root root 125 Aug 02 14:35 plugin-security.policy

# 安装完插件后需要重启elasticsearch

[root@elastic-01 ~]# systemctl restart elasticsearch

测试iK分词器功能

以下操作均在kibana中dev tools中完成

直接调用分词器测试分词后结果

分词器两种模式

- ik_max_word: 会将文本做最细粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,中华人民,中华,华人,人民共和国,人民,人,民,共和国,共和,和,国国,国歌”,会穷尽各种可能的组合,适合 Term Query;

- ik_smart: 会做最粗粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,国歌”,适合 Phrase 查询

POST _analyze

{

"analyzer": "ik_smart",

"text": ["xadocker个人技术博客站点,记录自己运维生涯"]

}获取输出的结果

{

"tokens" : [

{

"token" : "xadocker",

"start_offset" : 0,

"end_offset" : 8,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "个人",

"start_offset" : 8,

"end_offset" : 10,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "技术",

"start_offset" : 10,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "博客",

"start_offset" : 12,

"end_offset" : 14,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "站点",

"start_offset" : 14,

"end_offset" : 16,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "记录",

"start_offset" : 17,

"end_offset" : 19,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "自己",

"start_offset" : 19,

"end_offset" : 21,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "运",

"start_offset" : 21,

"end_offset" : 22,

"type" : "CN_CHAR",

"position" : 7

},

{

"token" : "维",

"start_offset" : 22,

"end_offset" : 23,

"type" : "CN_CHAR",

"position" : 8

},

{

"token" : "生涯",

"start_offset" : 23,

"end_offset" : 25,

"type" : "CN_WORD",

"position" : 9

}

]

}以上方式属即时调用,不会留存数据,下面则是在创建索引阶段设置mapping来对指定字段数据进行分词

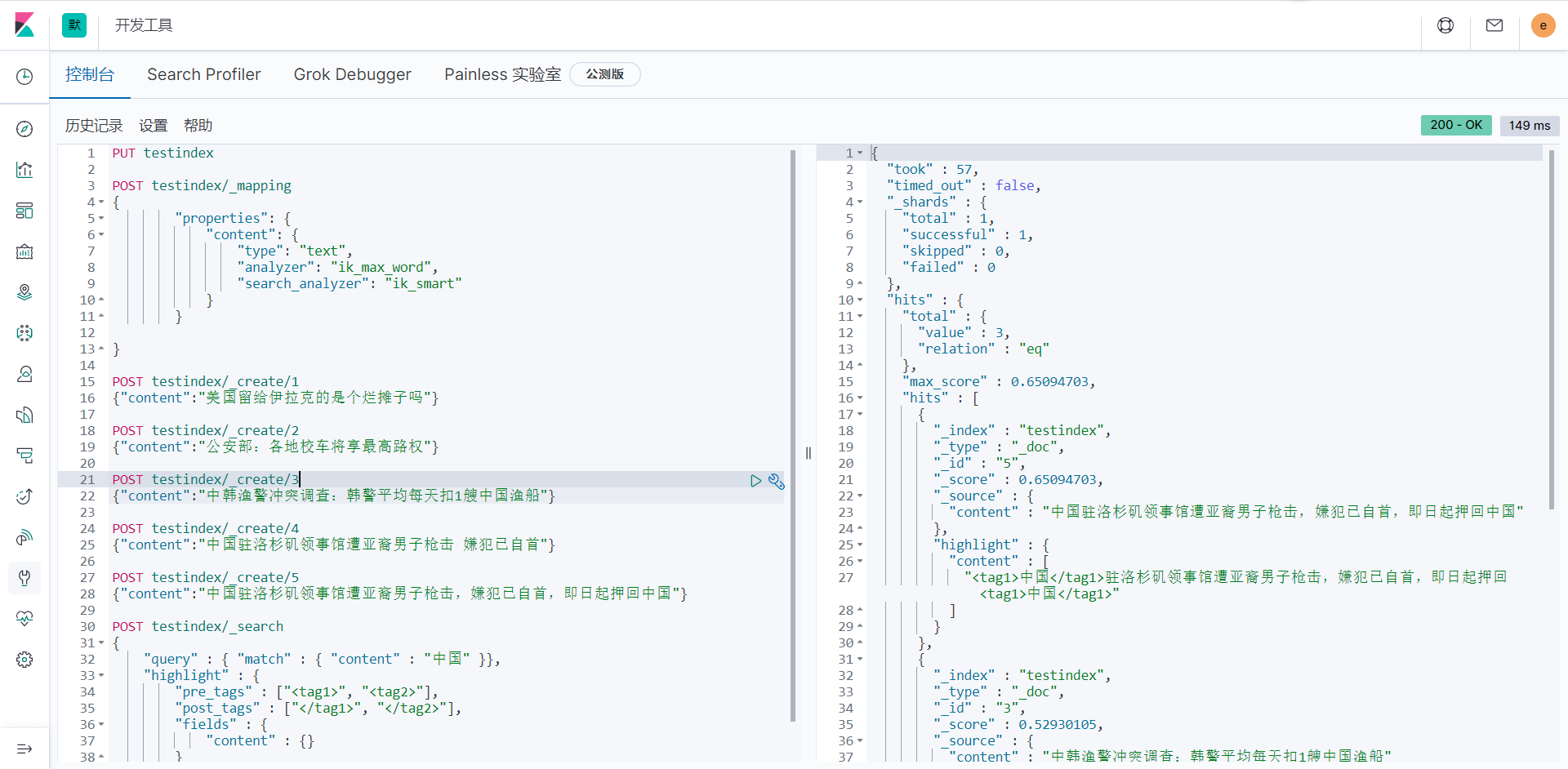

创建索引

PUT testindex创建mapping映射,在mapping中对字段content使用分词器

POST testindex/_mapping

{

"properties": {

"content": {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

}

}

}创建模拟数据

POST testindex/_create/1

{"content":"美国留给伊拉克的是个烂摊子吗"}

POST testindex/_create/2

{"content":"公安部:各地校车将享最高路权"}

POST testindex/_create/3

{"content":"中韩渔警冲突调查:韩警平均每天扣1艘中国渔船"}

POST testindex/_create/4

{"content":"中国驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首"}

POST testindex/_create/5

{"content":"中国驻洛杉矶领事馆遭亚裔男子枪击,嫌犯已自首,即日起押回中国"}highlight查询测试

POST testindex/_search

{

"query" : { "match" : { "content" : "中国" }},

"highlight" : {

"pre_tags" : ["<tag1>", "<tag2>"],

"post_tags" : ["</tag1>", "</tag2>"],

"fields" : {

"content" : {}

}

}

}查询结果输出

{

"took" : 57,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 3,

"relation" : "eq"

},

"max_score" : 0.65094703,

"hits" : [

{

"_index" : "testindex",

"_type" : "_doc",

"_id" : "5",

"_score" : 0.65094703,

"_source" : {

"content" : "中国驻洛杉矶领事馆遭亚裔男子枪击,嫌犯已自首,即日起押回中国"

},

"highlight" : {

"content" : [

"<tag1>中国</tag1>驻洛杉矶领事馆遭亚裔男子枪击,嫌犯已自首,即日起押回<tag1>中国</tag1>"

]

}

},

{

"_index" : "testindex",

"_type" : "_doc",

"_id" : "3",

"_score" : 0.52930105,

"_source" : {

"content" : "中韩渔警冲突调查:韩警平均每天扣1艘中国渔船"

},

"highlight" : {

"content" : [

"中韩渔警冲突调查:韩警平均每天扣1艘<tag1>中国</tag1>渔船"

]

}

},

{

"_index" : "testindex",

"_type" : "_doc",

"_id" : "4",

"_score" : 0.52930105,

"_source" : {

"content" : "中国驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首"

},

"highlight" : {

"content" : [

"<tag1>中国</tag1>驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首"

]

}

}

]

}

}自定义分词字典

如果想要按照自己分词规则去分词,可以创建自己的字典,我们查看下ik分词器的配置字典

# 注意安装完iK分词器后,它的配置文件会在/etc/elasticsearch/analysis-ik/目录中,而不是

# 在/usr/share/elasticsearch/plugins/analysis-ik/中,起初博主也搞错

# 去/usr/share/elasticsearch/plugins/analysis-ik/创建config目录并配置,一直不生效

# 知道看到以下日志后才知道

[root@elastic-01 config]# cat /var/log/elasticsearch/elasticsearch.log | grep IKAnalyzer.cfg.xml

[2020-08-02T14:48:05,104][INFO ][o.w.a.d.Monitor ] [node-1] try load config from /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

[2020-08-02T16:04:16,422][INFO ][o.w.a.d.Monitor ] [node-1] try load config from /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

[2020-08-02T16:15:36,370][INFO ][o.w.a.d.Monitor ] [node-1] try load config from /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

# ik分词器配置目录

[root@elastic-01 elasticsearch]# pwd

/etc/elasticsearch

[root@elastic-01 elasticsearch]# ll analysis-ik/

total 8264

-rw-rw---- 1 elasticsearch elasticsearch 5225922 Aug 02 14:35 extra_main.dic

-rw-rw---- 1 elasticsearch elasticsearch 63188 Aug 02 14:35 extra_single_word.dic

-rw-rw---- 1 elasticsearch elasticsearch 63188 Aug 02 14:35 extra_single_word_full.dic

-rw-rw---- 1 elasticsearch elasticsearch 10855 Aug 02 14:35 extra_single_word_low_freq.dic

-rw-rw---- 1 elasticsearch elasticsearch 156 Aug 02 14:35 extra_stopword.dic

-rw-rw---- 1 elasticsearch elasticsearch 634 Aug 02 16:23 IKAnalyzer.cfg.xml

-rw-rw---- 1 elasticsearch elasticsearch 3058510 Aug 02 14:35 main.dic

-rw-rw---- 1 elasticsearch elasticsearch 123 Aug 02 14:35 preposition.dic

-rw-rw---- 1 elasticsearch elasticsearch 1824 Aug 02 14:35 quantifier.dic

-rw-rw---- 1 elasticsearch elasticsearch 164 Aug 02 14:35 stopword.dic

-rw-rw---- 1 elasticsearch elasticsearch 192 Aug 02 14:35 suffix.dic

-rw-rw---- 1 elasticsearch elasticsearch 752 Aug 02 14:35 surname.dic

# 以dic结尾的都是字典,文件中每个词占一行

[root@elastic-01 elasticsearch]# wc -l analysis-ik/*.dic

398715 analysis-ik/extra_main.dic

12637 analysis-ik/extra_single_word.dic

12637 analysis-ik/extra_single_word_full.dic

2713 analysis-ik/extra_single_word_low_freq.dic

30 analysis-ik/extra_stopword.dic

275908 analysis-ik/main.dic

3 analysis-ik/mydic.dic

24 analysis-ik/preposition.dic

315 analysis-ik/quantifier.dic

32 analysis-ik/stopword.dic

36 analysis-ik/suffix.dic

130 analysis-ik/surname.dic

703180 total

配置自己的分词字典

创建一个测试字典

[root@elastic-01 elasticsearch]# cat analysis-ik/mydic.dic

川普

川建国

建国同志配置iK分词器配置加载自建字典

[root@elastic-01 elasticsearch]# cat /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict">mydic.dic</entry>

<!--用户可以在这里配置自己的扩展停止词字典-->

<entry key="ext_stopwords"></entry>

<!--用户可以在这里配置远程扩展字典 -->

<!-- <entry key="remote_ext_dict">words_location</entry> -->

<!--用户可以在这里配置远程扩展停止词字典-->

<!-- <entry key="remote_ext_stopwords">words_location</entry> -->

</properties>

重启服务后测试

测试语句

POST _analyze

{

"analyzer": "ik_smart",

"text": ["trump,一个远在异国的建国同志川普,国内亲切称其为川建国"]

}以下是未使用自建分词器时的结果

{

"tokens" : [

{

"token" : "trump",

"start_offset" : 0,

"end_offset" : 5,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "一个",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "远在",

"start_offset" : 8,

"end_offset" : 10,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "异国",

"start_offset" : 10,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "的",

"start_offset" : 12,

"end_offset" : 13,

"type" : "CN_CHAR",

"position" : 4

},

{

"token" : "建国",

"start_offset" : 13,

"end_offset" : 15,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "同志",

"start_offset" : 15,

"end_offset" : 17,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "川",

"start_offset" : 17,

"end_offset" : 18,

"type" : "CN_CHAR",

"position" : 7

},

{

"token" : "普",

"start_offset" : 18,

"end_offset" : 19,

"type" : "CN_CHAR",

"position" : 8

},

{

"token" : "国内",

"start_offset" : 20,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 9

},

{

"token" : "亲切",

"start_offset" : 22,

"end_offset" : 24,

"type" : "CN_WORD",

"position" : 10

},

{

"token" : "称",

"start_offset" : 24,

"end_offset" : 25,

"type" : "CN_CHAR",

"position" : 11

},

{

"token" : "其为",

"start_offset" : 25,

"end_offset" : 27,

"type" : "CN_WORD",

"position" : 12

},

{

"token" : "川",

"start_offset" : 27,

"end_offset" : 28,

"type" : "CN_CHAR",

"position" : 13

},

{

"token" : "建国",

"start_offset" : 28,

"end_offset" : 30,

"type" : "CN_WORD",

"position" : 14

}

]

}使用自建分词字典后的结果

{

"tokens" : [

{

"token" : "trump",

"start_offset" : 0,

"end_offset" : 5,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "一个",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "远在",

"start_offset" : 8,

"end_offset" : 10,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "异国",

"start_offset" : 10,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "的",

"start_offset" : 12,

"end_offset" : 13,

"type" : "CN_CHAR",

"position" : 4

},

{

"token" : "建国同志",

"start_offset" : 13,

"end_offset" : 17,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "川普",

"start_offset" : 17,

"end_offset" : 19,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "国内",

"start_offset" : 20,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "亲切",

"start_offset" : 22,

"end_offset" : 24,

"type" : "CN_WORD",

"position" : 8

},

{

"token" : "称",

"start_offset" : 24,

"end_offset" : 25,

"type" : "CN_CHAR",

"position" : 9

},

{

"token" : "其为",

"start_offset" : 25,

"end_offset" : 27,

"type" : "CN_WORD",

"position" : 10

},

{

"token" : "川建国",

"start_offset" : 27,

"end_offset" : 30,

"type" : "CN_WORD",

"position" : 11

}

]

}iK分词器动态字典

之前介绍的静态字典,每次更新字典就需要重新启动es服务,不适用。此处讲下动态更新字典:

通过上文在 IK 配置文件中提到的如下配置

<!--用户可以在这里配置远程扩展字典 -->

<entry key="remote_ext_dict">location</entry>

<!--用户可以在这里配置远程扩展停止词字典-->

<entry key="remote_ext_stopwords">location</entry>其中 location 是指一个 url,比如 http://yoursite.com/getCustomDict,该请求只需满足以下两点即可完成分词热更新。

- 该 http 请求需要返回两个头部(header),一个是

Last-Modified,一个是ETag,这两者都是字符串类型,只要有一个发生变化,该插件就会去抓取新的分词进而更新词库。 - 该 http 请求返回的内容格式是一行一个分词,换行符用

\n即可。

满足上面两点要求就可以实现热更新分词了,不需要重启 ES 实例。

可以将需自动更新的热词放在一个 UTF-8 编码的 .txt 文件里,放在 nginx 或其他简易 http server 下,当 .txt 文件修改时,http server 会在客户端请求该文件时自动返回相应的 Last-Modified 和 ETag。可以另外做一个工具来从业务系统提取相关词汇,并更新这个 .txt 文件。

以上来自官方解释,此处我们创建一个nginx静态站点来提供字典即可

yum install nginx -y

cat >/etc/nginx/conf.d/ik.xadocker.cn.conf<<-'EOF'

server {

listen 80;

listen [::]:80;

server_name ik.xadocker.cn;

location / {

root /usr/share/nginx/www;

index index.html;

}

}

EOF将自定义字典放入/usr/share/nginx/www即可

[root@elastic-01 nginx]# cp /etc/elasticsearch/analysis-ik/mydic.dic /usr/share/nginx/www

[root@elastic-01 nginx]# nginx -t

[root@elastic-01 nginx]# systemctl restart nginx

[root@elastic-01 nginx]# curl ik.xadocker.cn/mydic.dic

川普

川建国

建国同志

调整ik分词器配置并重启配置

[root@elastic-01 nginx]# cat >/etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml<<-'EOF'

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict"></entry>

<!--用户可以在这里配置自己的扩展停止词字典-->

<entry key="ext_stopwords"></entry>

<!--用户可以在这里配置远程扩展字典 -->

<entry key="remote_ext_dict">http://ik.xadocker.cn/mydic.dic</entry>

<!--用户可以在这里配置远程扩展停止词字典-->

<!-- <entry key="remote_ext_stopwords">words_location</entry> -->

</properties>

EOF

[root@elastic-01 nginx]# systemctl restart elasticsearch.service测试下效果

POST _analyze

{

"analyzer": "ik_smart",

"text": ["trump,一个远在异国的建国同志川普,国内亲切称其为川建国,可真是奥里给"]

}分词结果为

{

"tokens" : [

{

"token" : "trump",

"start_offset" : 0,

"end_offset" : 5,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "一个",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "远在",

"start_offset" : 8,

"end_offset" : 10,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "异国",

"start_offset" : 10,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "的",

"start_offset" : 12,

"end_offset" : 13,

"type" : "CN_CHAR",

"position" : 4

},

{

"token" : "建国同志",

"start_offset" : 13,

"end_offset" : 17,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "川普",

"start_offset" : 17,

"end_offset" : 19,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "国内",

"start_offset" : 20,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "亲切",

"start_offset" : 22,

"end_offset" : 24,

"type" : "CN_WORD",

"position" : 8

},

{

"token" : "称",

"start_offset" : 24,

"end_offset" : 25,

"type" : "CN_CHAR",

"position" : 9

},

{

"token" : "其为",

"start_offset" : 25,

"end_offset" : 27,

"type" : "CN_WORD",

"position" : 10

},

{

"token" : "川建国",

"start_offset" : 27,

"end_offset" : 30,

"type" : "CN_WORD",

"position" : 11

},

{

"token" : "可真是",

"start_offset" : 31,

"end_offset" : 34,

"type" : "CN_WORD",

"position" : 12

},

{

"token" : "奥",

"start_offset" : 34,

"end_offset" : 35,

"type" : "CN_CHAR",

"position" : 13

},

{

"token" : "里",

"start_offset" : 35,

"end_offset" : 36,

"type" : "CN_CHAR",

"position" : 14

},

{

"token" : "给",

"start_offset" : 36,

"end_offset" : 37,

"type" : "CN_CHAR",

"position" : 15

}

]

}我们对字典新增一个词 奥里给

[root@elastic-01 nginx]# cat /usr/share/nginx/www/mydic.dic

川普

川建国

建国同志

奥里给

此时分词结果为

{

"tokens" : [

{

"token" : "trump",

"start_offset" : 0,

"end_offset" : 5,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "一个",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "远在",

"start_offset" : 8,

"end_offset" : 10,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "异国",

"start_offset" : 10,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "的",

"start_offset" : 12,

"end_offset" : 13,

"type" : "CN_CHAR",

"position" : 4

},

{

"token" : "建国同志",

"start_offset" : 13,

"end_offset" : 17,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "川普",

"start_offset" : 17,

"end_offset" : 19,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "国内",

"start_offset" : 20,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "亲切",

"start_offset" : 22,

"end_offset" : 24,

"type" : "CN_WORD",

"position" : 8

},

{

"token" : "称",

"start_offset" : 24,

"end_offset" : 25,

"type" : "CN_CHAR",

"position" : 9

},

{

"token" : "其为",

"start_offset" : 25,

"end_offset" : 27,

"type" : "CN_WORD",

"position" : 10

},

{

"token" : "川建国",

"start_offset" : 27,

"end_offset" : 30,

"type" : "CN_WORD",

"position" : 11

},

{

"token" : "可真是",

"start_offset" : 31,

"end_offset" : 34,

"type" : "CN_WORD",

"position" : 12

},

{

"token" : "奥里给",

"start_offset" : 34,

"end_offset" : 37,

"type" : "CN_WORD",

"position" : 13

}

]

}热更新字典可以在日志体现

[2022-12-12T17:05:00,169][INFO ][o.w.a.d.Monitor ] [node-1] start to reload ik dict.

[2020-08-02T17:05:00,170][INFO ][o.w.a.d.Monitor ] [node-1] try load config from /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

[2020-08-02T17:05:00,310][INFO ][o.w.a.d.Monitor ] [node-1] [Dict Loading] http://ik.xadocker.cn/mydic.dic

[2020-08-02T17:05:00,314][INFO ][o.w.a.d.Monitor ] [node-1] 川普

[2020-08-02T17:05:00,314][INFO ][o.w.a.d.Monitor ] [node-1] 川建国

[2020-08-02T17:05:00,314][INFO ][o.w.a.d.Monitor ] [node-1] 建国同志

[2020-08-02T17:05:00,315][INFO ][o.w.a.d.Monitor ] [node-1] reload ik dict finished.

[2020-08-02T17:18:00,168][INFO ][o.w.a.d.Monitor ] [node-1] start to reload ik dict.

[2020-08-02T17:18:00,168][INFO ][o.w.a.d.Monitor ] [node-1] try load config from /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

[2020-08-02T17:18:00,298][INFO ][o.w.a.d.Monitor ] [node-1] [Dict Loading] http://ik.xadocker.cn/mydic.dic

[2020-08-02T17:18:00,302][INFO ][o.w.a.d.Monitor ] [node-1] 川普

[2020-08-02T17:18:00,302][INFO ][o.w.a.d.Monitor ] [node-1] 川建国

[2020-08-02T17:18:00,302][INFO ][o.w.a.d.Monitor ] [node-1] 建国同志

[2020-08-02T17:18:00,303][INFO ][o.w.a.d.Monitor ] [node-1] 奥里给

[2020-08-02T17:18:00,303][INFO ][o.w.a.d.Monitor ] [node-1] reload ik dict finished.

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站