共计 15956 个字符,预计需要花费 40 分钟才能阅读完成。

早些年在学习k8s的时候,就被它的这一机制吸引,另外一个则是自动发现。以往博主再还没有k8s平台时,曾使用zabbix的阈值触发调用python脚本去处理一系列资源创建,服务接入等~~

k8s的HPA

k8s中的HPA(HorizontalPodAutoscaler)弹性伸缩是指资源水平扩容,即扩容对象的pod数量,一般扩缩对象为deployment或statefulset,当然也存在不支持的扩缩对象如daemonset

基本使用方式

创建样例服务

[root@k8s-master hpa]# cat php-apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: registry.k8s.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

为服务创建hpa

[root@k8s-master hpa]# kubectl autoscale deployment php-apache --cpu-percent=10 --min=1 --max=10查看hpa状态

[root@k8s-master ~]# kubectl describe hpa php-apache

####### 略

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedComputeMetricsReplicas 22m (x12 over 24m) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 9m48s (x58 over 24m) horizontal-pod-autoscaler unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 4m56s (x19 over 9m32s) horizontal-pod-autoscaler unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server could not find the requested resource (get pods.metrics.k8s.io)

可以看到博主这里获取指标信息失败,因为博主这里没有部署metrics-server,而默认hpa是去metrics-server上抓取数据,所以需要提前部署下

部署metrics-server

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# 修改components.yaml,增加一行 - --kubelet-insecure-tls

[root@k8s-master ~]# vim components.yaml

####### 略

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

####### 略

# 启动metrics-server

[root@k8s-master ~]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader configured

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 使用kubectl top测试下

[root@k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 827m 10% 3530Mi 45%

[root@k8s-master ~]# kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-5b8b769fcd-8hlzn 2m 11Mi

calico-node-fwcss 84m 53Mi

coredns-65556b4c97-dhkz4 6m 19Mi

etcd-k8s-master 54m 212Mi

kube-apiserver-k8s-master 135m 647Mi

kube-controller-manager-k8s-master 50m 68Mi

kube-proxy-hftdw 1m 14Mi

kube-scheduler-k8s-master 6m 19Mi

metrics-server-86499f7fd8-pdw6d 6m 15Mi

nfs-client-provisioner-df46b8d64-jwgd4 4m 10Mi

此时再查看hpa状态

[root@k8s-master ~]# kubectl get hpa php-apache

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/10% 1 10 1 38m

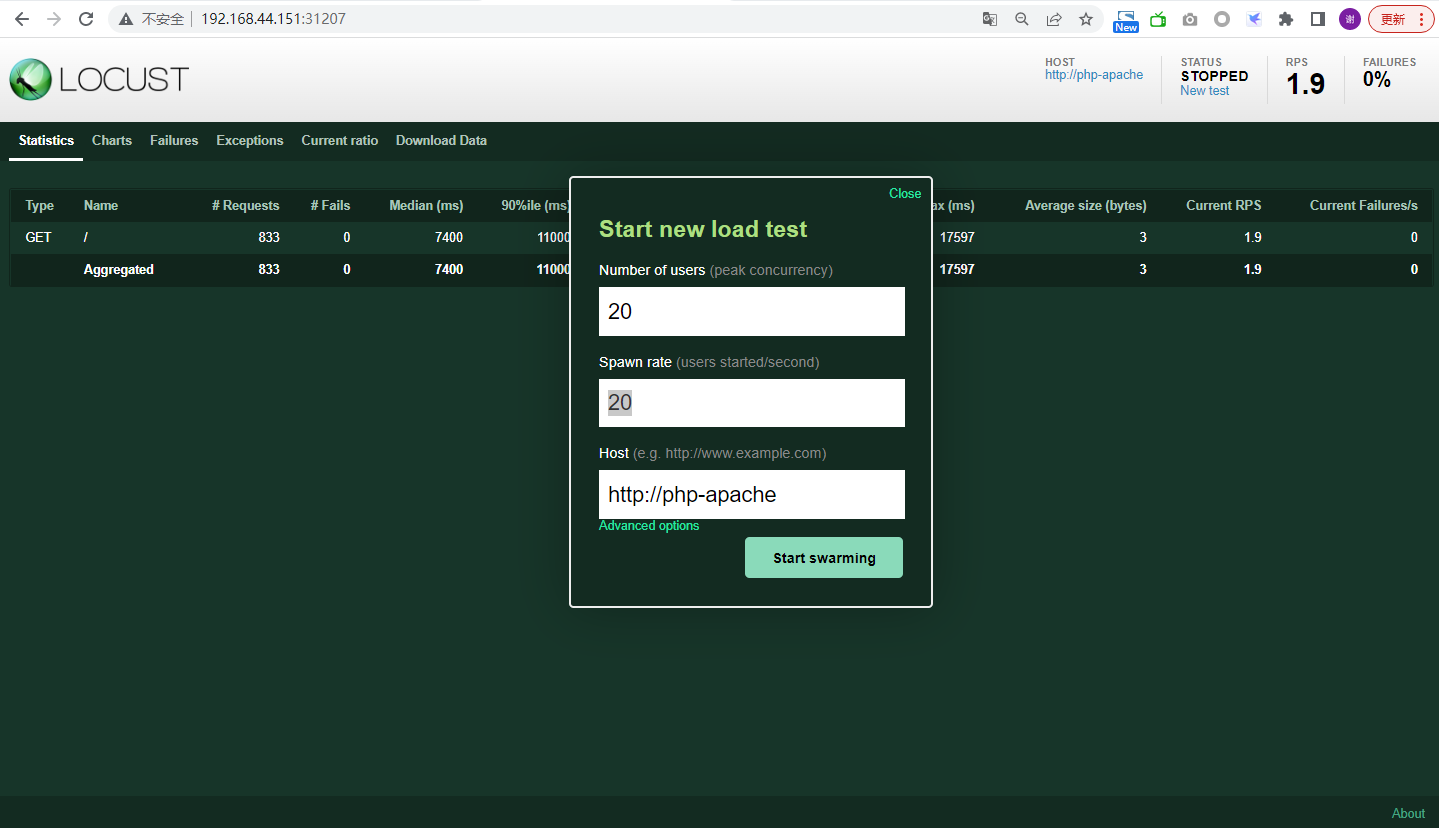

压测模拟扩缩容

部署locust

[root@k8s-master ~]# cat locust/locust.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: locust-script

data:

locustfile.py: |-

from locust import HttpUser, task, between

class QuickstartUser(HttpUser):

wait_time = between(0.7, 1.3)

@task

def hello_world(self):

self.client.get("/")

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: locust

spec:

selector:

matchLabels:

app: locust

template:

metadata:

labels:

app: locust

spec:

containers:

- name: locust

image: locustio/locust

ports:

- containerPort: 8089

volumeMounts:

- mountPath: /home/locust

name: locust-script

volumes:

- name: locust-script

configMap:

name: locust-script

---

apiVersion: v1

kind: Service

metadata:

name: locust

spec:

ports:

- port: 8089

targetPort: 8089

selector:

app: locust

type: NodePort

[root@k8s-master ~]# kubectl apply locust/locust.yaml

[root@k8s-master ~]# kubectl get svc locust

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

locust NodePort 10.96.221.85 <none> 8089:31207/TCP 18h

查看此时服务hpa扩容情况

# 微微压一下就扩了...

[root@k8s-master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 25%/10% 1 10 10 50m

[root@k8s-master ~]# kubectl describe hpa php-apache

Name: php-apache

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Mon, 08 Nov 2021 19:06:10 +0800

Reference: Deployment/php-apache

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 25% (51m) / 10%

Min replicas: 1

Max replicas: 10

Deployment pods: 10 current / 10 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited True TooManyReplicas the desired replica count is more than the maximum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedComputeMetricsReplicas 44m (x12 over 47m) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 32m (x58 over 47m) horizontal-pod-autoscaler unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 27m (x19 over 32m) horizontal-pod-autoscaler unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server could not find the requested resource (get pods.metrics.k8s.io)

Normal SuccessfulRescale 3m49s horizontal-pod-autoscaler New size: 2; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 3m33s horizontal-pod-autoscaler New size: 4; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 3m18s horizontal-pod-autoscaler New size: 8; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 3m2s horizontal-pod-autoscaler New size: 10; reason: cpu resource utilization (percentage of request) above target

[root@k8s-master ~]# kubectl get event

####### 略

15m Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 2; reason: cpu resource utilization (percentage of request) above target

15m Normal ScalingReplicaSet deployment/php-apache Scaled up replica set php-apache-84497cc9dd to 2

14m Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 4; reason: cpu resource utilization (percentage of request) above target

14m Normal ScalingReplicaSet deployment/php-apache Scaled up replica set php-apache-84497cc9dd to 4

14m Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 8; reason: cpu resource utilization (percentage of request) above target

14m Normal ScalingReplicaSet deployment/php-apache Scaled up replica set php-apache-84497cc9dd to 8

14m Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 10; reason: cpu resource utilization (percentage of request) above target

14m Normal ScalingReplicaSet deployment/php-apache Scaled up replica set php-apache-84497cc9dd to 10

[root@k8s-master ~]# kubectl get deploy php-apache

NAME READY UP-TO-DATE AVAILABLE AGE

php-apache 10/10 10 10 52m缩容测试

停止locust压测任务后

[root@k8s-master ~]# kubectl get hpa php-apache

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/10% 1 10 1 56m

# 查看hpa缩容事件

[root@k8s-master ~]# kubectl describe hpa php-apache

Name: php-apache

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Mon, 08 Nov 2021 19:06:10 +0800

Reference: Deployment/php-apache

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 0% (1m) / 10%

Min replicas: 1

Max replicas: 10

Deployment pods: 1 current / 1 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited True TooFewReplicas the desired replica count is less than the minimum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedComputeMetricsReplicas 53m (x12 over 56m) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 41m (x58 over 56m) horizontal-pod-autoscaler unable to get metrics for resource cpu: no metrics returned from resource metrics API

Warning FailedGetResourceMetric 36m (x19 over 40m) horizontal-pod-autoscaler unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server could not find the requested resource (get pods.metrics.k8s.io)

Normal SuccessfulRescale 12m horizontal-pod-autoscaler New size: 2; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 12m horizontal-pod-autoscaler New size: 4; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 12m horizontal-pod-autoscaler New size: 8; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 11m horizontal-pod-autoscaler New size: 10; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 77s horizontal-pod-autoscaler New size: 7; reason: All metrics below target

Normal SuccessfulRescale 62s horizontal-pod-autoscaler New size: 1; reason: All metrics below target

[root@k8s-master ~]# kubectl get event

####### 略

3m47s Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 7; reason: All metrics below target

3m47s Normal ScalingReplicaSet deployment/php-apache Scaled down replica set php-apache-84497cc9dd to 7

3m32s Normal SuccessfulRescale horizontalpodautoscaler/php-apache New size: 1; reason: All metrics below target

3m32s Normal ScalingReplicaSet deployment/php-apache Scaled down replica set php-apache-84497cc9dd to 1

# 查看deploy副本数已恢复

[root@k8s-master ~]# kubectl get deploy php-apache

NAME READY UP-TO-DATE AVAILABLE AGE

php-apache 1/1 1 1 61m

HPA的yaml文件声明方式

autoscaling/v1版本

# 博主这里是autoscaling/v1,有的读者可能是autoscaling/v2,各版本语法得根据集群版本自行查阅判断了

[root@k8s-master hpa]# cat hpa-v1.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

annotations:

name: php-apache

namespace: default

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 10

# 似乎博主这个版本只有targetCPUUtilizationPercentage,emmmmm

[root@k8s-master hpa]# kubectl explain hpa.spec.

KIND: HorizontalPodAutoscaler

VERSION: autoscaling/v1

RESOURCE: spec <Object>

DESCRIPTION:

behaviour of autoscaler. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status.

specification of a horizontal pod autoscaler.

FIELDS:

maxReplicas <integer> -required-

upper limit for the number of pods that can be set by the autoscaler;

cannot be smaller than MinReplicas.

minReplicas <integer>

minReplicas is the lower limit for the number of replicas to which the

autoscaler can scale down. It defaults to 1 pod. minReplicas is allowed to

be 0 if the alpha feature gate HPAScaleToZero is enabled and at least one

Object or External metric is configured. Scaling is active as long as at

least one metric value is available.

scaleTargetRef <Object> -required-

reference to scaled resource; horizontal pod autoscaler will learn the

current resource consumption and will set the desired number of pods by

using its Scale subresource.

targetCPUUtilizationPercentage <integer>

target average CPU utilization (represented as a percentage of requested

CPU) over all the pods; if not specified the default autoscaling policy

will be used.目前博主这个集群没有v2,但是有autoscaling/v2beta1版本,不管v2还是v2beta1都比v1功能多,不局限于cpu利用率这个mterics

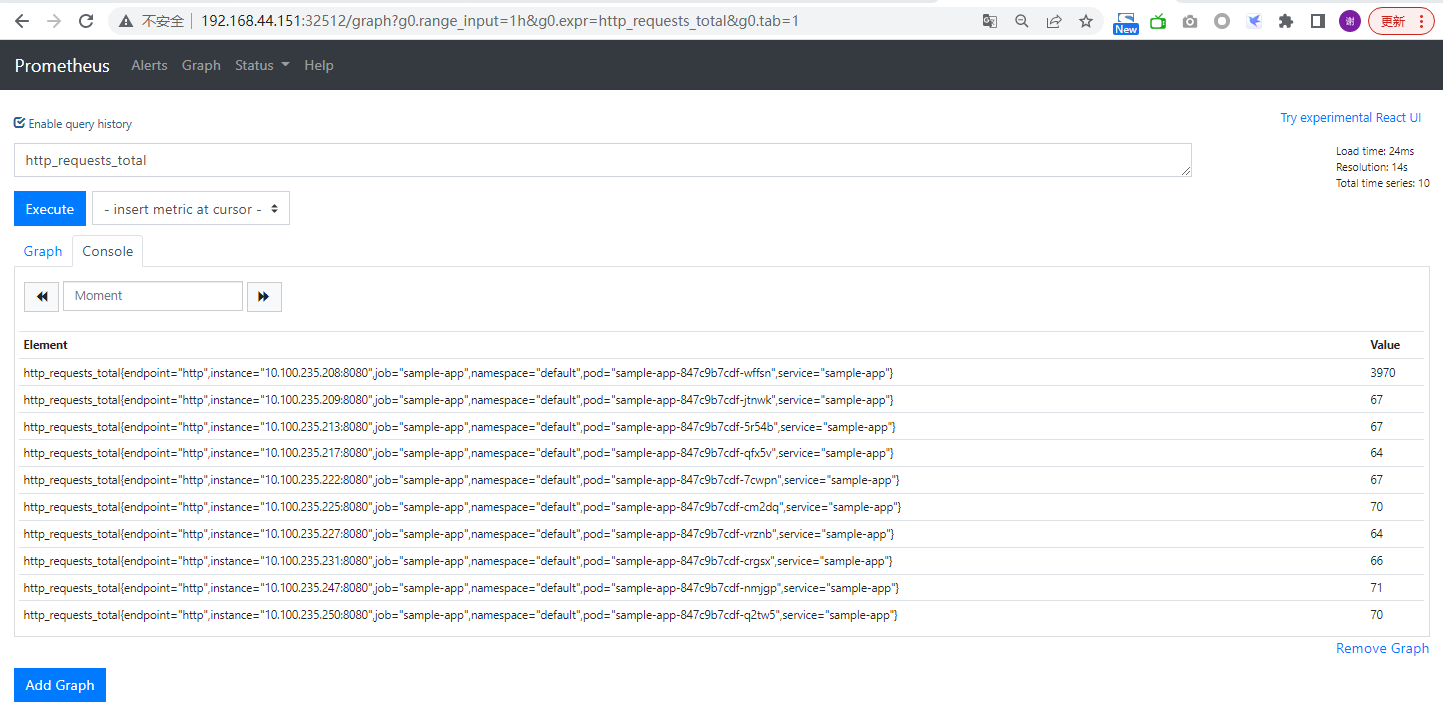

使用客户自定义metrics扩缩容

使用prometheus官方样例部署演示

[root@k8s-master experimental]# pwd

/root/prometheus/kube-prometheus/experimental

[root@k8s-master experimental]# ll

total 4

drwxr-xr-x 2 root root 4096 Jan 9 23:44 custom-metrics-api

drwxr-xr-x 2 root root 292 Jan 9 19:21 metrics-server

[root@k8s-master experimental]# cd custom-metrics-api/

[root@k8s-master custom-metrics-api]# ll

total 36

-rw-r--r-- 1 root root 312 Sep 24 18:32 custom-metrics-apiserver-resource-reader-cluster-role-binding.yaml

-rw-r--r-- 1 root root 311 Sep 24 18:32 custom-metrics-apiservice.yaml

-rw-r--r-- 1 root root 194 Sep 24 18:32 custom-metrics-cluster-role.yaml

-rw-r--r-- 1 root root 3232 Sep 24 18:32 custom-metrics-configmap.yaml

-rw-r--r-- 1 root root 382 Sep 24 18:32 deploy.sh

-rw-r--r-- 1 root root 318 Sep 24 18:32 hpa-custom-metrics-cluster-role-binding.yaml

-rw-r--r-- 1 root root 1473 Sep 24 18:32 README.md

-rw-r--r-- 1 root root 1207 Jan 9 23:44 sample-app.yaml

-rw-r--r-- 1 root root 387 Sep 24 18:32 teardown.sh

[root@k8s-master custom-metrics-api]# bash deploy.sh

# 自定义指标依赖adapter组件,使用kubectl查询api测试获取数据

# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/monitoring/pods/*/fs_usage_bytes" | jq .

# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/monitoring/pods/*/fs_usage_bytes" | jq .

[root@k8s-master hpa]# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/*/http_requests" | jq .

{

"kind": "MetricValueList",

"apiVersion": "custom.metrics.k8s.io/v1beta1",

"metadata": {

"selfLink": "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/%2A/http_requests"

},

"items": [

{

"describedObject": {

"kind": "Pod",

"namespace": "default",

"name": "sample-app-847c9b7cdf-5lzvb",

"apiVersion": "/v1"

},

"metricName": "http_requests",

"timestamp": "2023-01-09T17:25:52Z",

"value": "200m"

},

####### 略查看此时该应用的hpa

[root@k8s-master custom-metrics-api]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/10% 1 10 1 50s

sample-app Deployment/sample-app 200m/500m 1 10 1 17s

[root@k8s-master custom-metrics-api]# kubectl describe hpa sample-app

Name: sample-app

Namespace: default

Labels: <none>

Annotations: CreationTimestamp: Mon, 9 Nov 2021 22:34:09 +0800

Reference: Deployment/sample-app

Metrics: ( current / target )

"http_requests" on pods: 200m / 500m

Min replicas: 1

Max replicas: 10

Deployment pods: 1 current / 1 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from pods metric http_requests

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events: <none>

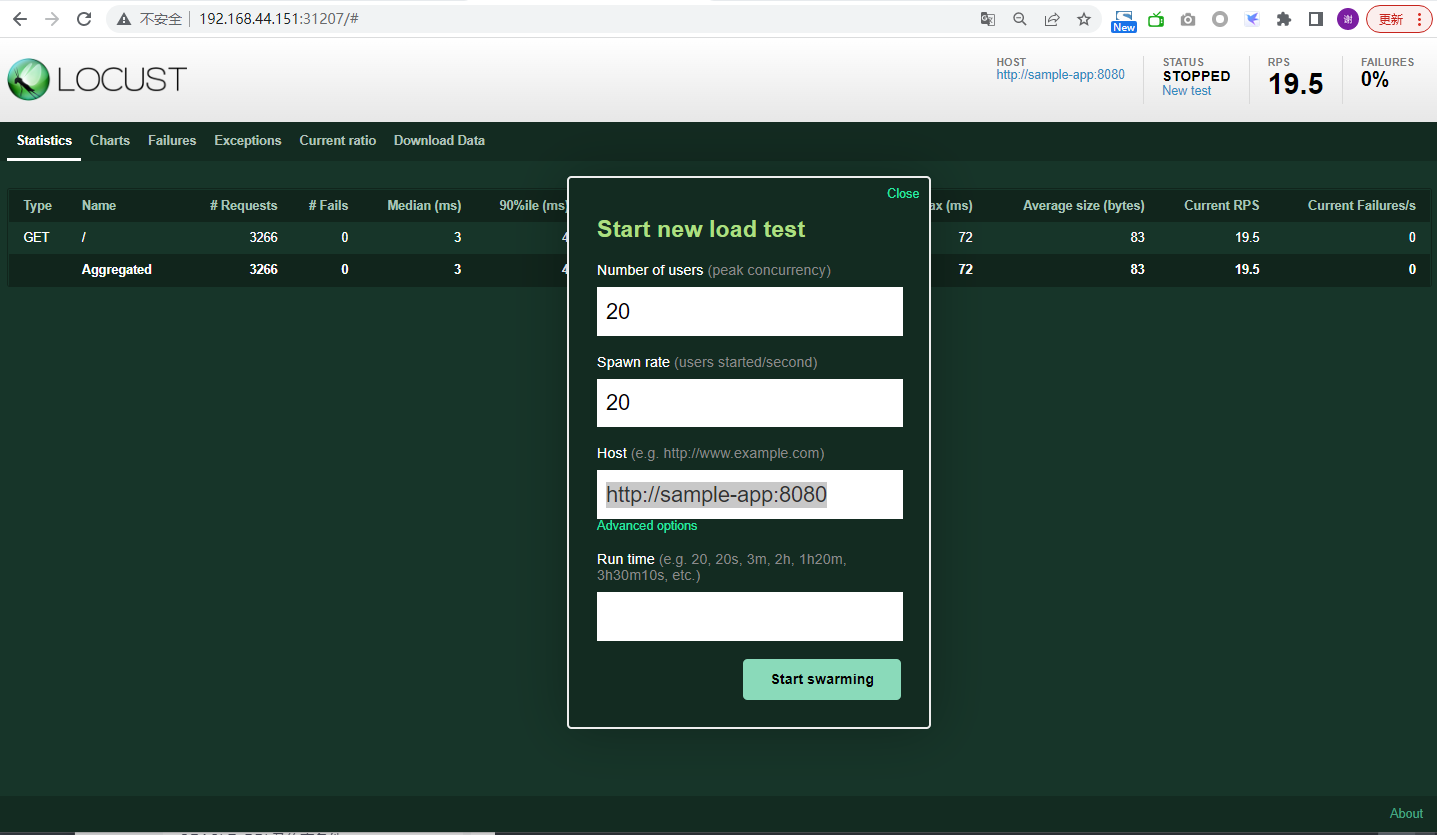

用locaust压测测试

[root@k8s-master custom-metrics-api]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/10% 1 10 1 4m44s

sample-app Deployment/sample-app 1890m/500m 1 10 8 4m11s

[root@k8s-master custom-metrics-api]# kubectl describe hpa sample-app

Name: sample-app

Namespace: default

Labels: <none>

Annotations: CreationTimestamp: Mon, 9 Nov 2021 22:34:09 +0800

Reference: Deployment/sample-app

Metrics: ( current / target )

"http_requests" on pods: 1890m / 500m

Min replicas: 1

Max replicas: 10

Deployment pods: 8 current / 10 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededRescale the HPA controller was able to update the target scale to 10

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from pods metric http_requests

ScalingLimited True TooManyReplicas the desired replica count is more than the maximum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 34s horizontal-pod-autoscaler New size: 4; reason: pods metric http_requests above target

Normal SuccessfulRescale 18s horizontal-pod-autoscaler New size: 8; reason: pods metric http_requests above target

Normal SuccessfulRescale 3s horizontal-pod-autoscaler New size: 10; reason: pods metric http_requests above target

回过头来查看下这个hpa定义了如下内容,使用的autoscaling/v2beta1版本

---

kind: HorizontalPodAutoscaler

apiVersion: autoscaling/v2beta1

metadata:

name: sample-app

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: sample-app

minReplicas: 1

maxReplicas: 10

metrics:

- type: Pods

pods:

metricName: http_requests

targetAverageValue: 500m

监听的是http_requests指标,但是我们发现服务暴露的自定义指标是http_requests_total

[root@k8s-master custom-metrics-api]# curl 10.96.27.235:8080/metrics

# HELP http_requests_total The amount of requests served by the server in total

# TYPE http_requests_total counter

http_requests_total 56

而在prometheus上没有http_requests这个指标,原来这个自定义hpa使用通过prometheus-adapter去代理查询的,hpa中的metrics name名称需要prometheus-adapter中的配置对应,不然adapter无法进行查询

# 看一下adapter cm

[root@k8s-master custom-metrics-api]# cat custom-metrics-configmap.yaml

####### 略

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters: []

resources:

template: <<.Resource>>

name:

matches: ^(.*)_seconds_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>)

####### 略

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站