共计 26650 个字符,预计需要花费 67 分钟才能阅读完成。

最近挺多人咨询prometheus高可用的事项,说是从博主之前这边文章过来的:多集群多prometheus实例下的管理,开始我还是郁闷的,因为那篇内容博主的本意不是讲高可用的~~~那篇主要是实现不同角色人员的监控视角隔离(各项目团队)与聚合(运维管理团队)>﹏<。。。而今天这里,就来实现下它的高可用和多集群数据聚合查询的功能

Prometheus高可用

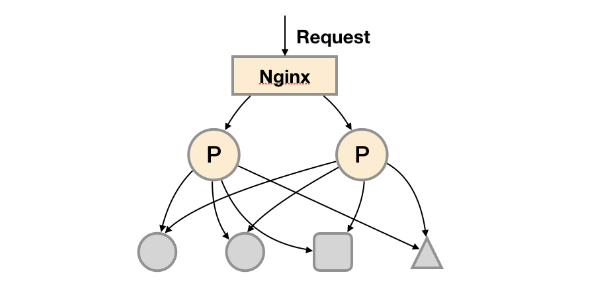

最简单的HA

对于服务本身,我们可以建立多个副本,并在入口处提供负载进行流量分发。但是prometheus自身的数据源存储是在本地,每个实例即都是用自己实例内的存储进行读写,在查询时可能会出现数据不一致的情况,且数据未持久化存在丢失的风险。同时这种多副本模式,每个副本全量采集target,会导致采集请求数随着副本数的数量增加而翻倍。

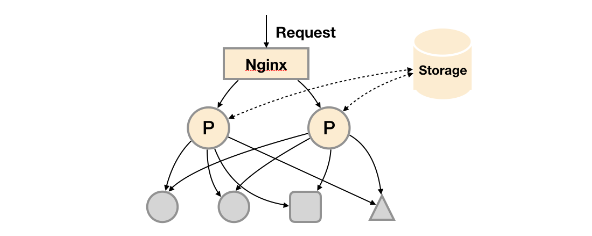

增加远程存储

prometheus自身可以通过remote storage的方式将数据同步到远程存储,这样便实现了数据的远程持久化,但和上面比也仅仅优化了这点。远程存储可以使用adapter组件来实现,自动选择其中一个实例的数据写入到远程存储,从而减少远程存储数据量。

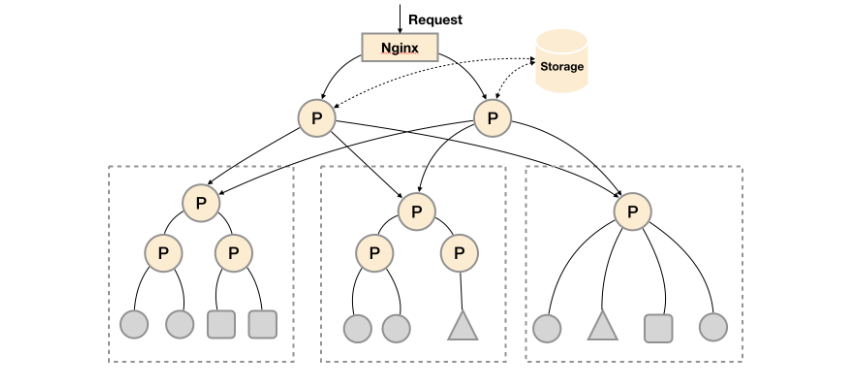

使用集群联邦分散采集任务

这种多层级的联邦,顶层实例则是一个数据聚合层,下层则可以来自不同集群数据,也可以是同一集群中同一采集任务单不同的实例。其实这种模式主要是为解决采集任务繁重,让底层promehteus按功能划分去采集不同的集群,又或者是采集同一任务的不同实例。这种方式反而对最底层的prometheus来讲,又是个单点

从上面这些模式的演变看下来,似乎对数据采集和数据存储都做了一定的扩展,而对数据读的一致性似乎没有涉及,也许监控这种场景对数据强一致性没有太大要求,只需要保持服务可用和数据能持久即可。只能说prometheus自身不支持直接远程数据源读写,要是支持的话就没那么多事了。

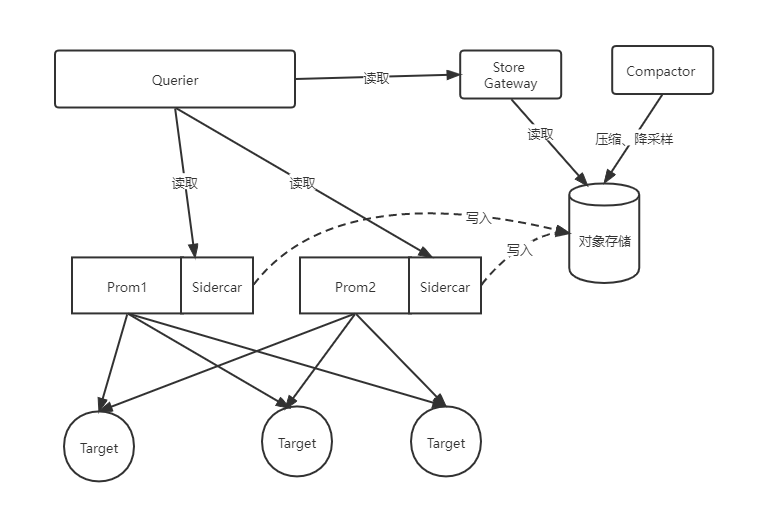

Thanos

前面理的是prometheus自身的一些模式组合,此处则主要使用thanos组件来扩展prometheus功能。thanos

主要有以下特点:

- 数据去重与合并

- 数据源存储支持多种对象存储

- 提供多集群的全局视图查询功能

- 无缝兼容prometheus API接口

- 提供数据压缩,提升查询速度

thanos不是一个组件,而是多个组件:

- sidecar:该组件与prometheus实例集成,比如和prometheus在同一个pod内,用来读取promethues的数据,提供querier组件进行查询,同时将数据上传到对象存储中进行保存

- querier:用来查询数据,从sidercar中或store gateway中查询数据。兼容prometheus的Prom语法,可以在grafana中作为数据源来查询聚合数据

- store gateway:对象存储网关组件。sidecar将数据存储到对象存储后,prometheus会根据策略清除本地数据。当querier组件需要查询这些历史数据时,就会通过store gateway来读取对象存储中的数据

- comactor:数据压缩组件,用来数据压缩和历史数据降采样,从而提升长期数据的查询效率

- ruler:用于对多个alertmanager的告警规则进行同一管理,此处忽略不涉及,建议直走自身集群的alertmanager链路更短,更及时

- receiver:接收器,receiver模式下的数据收集,此处忽略

thanos sidecar模式

thanos有两种模式(sidecar和receiver),此处主要涉及sidecar模式,alertmanager不涉及,让它走各自集群即可(链路最短,时效快)

我们用terratorm和ansible快速创建三个1.22集群,单master节点模拟

[root@cluster-oa-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

cluster-oa-master Ready control-plane,master 3h10m v1.22.3

[root@cluster-bi-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

cluster-bi-master Ready control-plane,master 3h10m v1.22.3

[root@cluster-pms-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

cluster-pms-master Ready control-plane,master 3h10m v1.22.3prometheus-operator部署

在kube-promethues仓库中部署prometheus-operator,该仓库已集成thanos,只是默认未启用。我们先运行其prometheus先,后面逐步调整开启组件

基本HA模式部署,每个集群都部署

# 拉取kube-prometheus仓库

[root@cluster-pms-master ~]# git clone https://github.com/prometheus-operator/kube-prometheus.git

Cloning into 'kube-prometheus'...

remote: Enumerating objects: 17732, done.

remote: Counting objects: 100% (51/51), done.

remote: Compressing objects: 100% (32/32), done.

remote: Total 17732 (delta 20), reused 44 (delta 16), pack-reused 17681

Receiving objects: 100% (17732/17732), 9.36 MiB | 15.18 MiB/s, done.

Resolving deltas: 100% (11712/11712), done.

[root@cluster-pms-master ~]# cd kube-prometheus/

# 切换到适应自己集群的版本

[root@cluster-pms-master kube-prometheus]# git checkout release-0.11

Branch release-0.11 set up to track remote branch release-0.11 from origin.

Switched to a new branch 'release-0.11'

# 部署crds资源

[root@cluster-pms-master kube-prometheus]# cd manifests/

[root@cluster-pms-master manifests]# kubectl create -f setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

# 部署默认prometheus集群套件

[root@cluster-pms-master manifests]# kubectl apply -f .

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

# 部署完成后查看monitoring空间资源,镜像拉取问题略

[root@cluster-pms-master manifests]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 116s

alertmanager-main-1 2/2 Running 0 116s

alertmanager-main-2 2/2 Running 0 116s

blackbox-exporter-5c545d55d6-kxmp7 3/3 Running 0 2m30s

grafana-7945df75ff-2b8dr 1/1 Running 0 2m29s

kube-state-metrics-54bd6b479c-5nj9w 3/3 Running 0 2m29s

node-exporter-wxlxw 2/2 Running 0 2m28s

prometheus-adapter-7bf7ff5b67-chmm6 1/1 Running 0 2m28s

prometheus-adapter-7bf7ff5b67-f8d48 1/1 Running 0 2m28s

prometheus-k8s-0 2/2 Running 0 114s

prometheus-k8s-1 2/2 Running 0 114s

prometheus-operator-54dd69bbf6-btp95 2/2 Running 0 2m27s

引入sidecar

先在cluster-pms这个集群开始,以这个集群为总管集群平台,接管其他集群监控数据

开始调整prometheus资源对象

- 添加externalLabels,用来标注这个prometheus实例来自哪个集群或环境,也可以其他标签等

- 添加sidecar容器

[root@cluster-pms-master manifests]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels:

env: prod # 部署到不同环境需要修改此处label

cluster: cluster-pms # 部署到不同集群需要修改此处label

image: quay.io/prometheus/prometheus:v2.36.1

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleNamespaceSelector: {}

ruleSelector: {}

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.36.1

thanos: # 添加thano-sidecar容器

baseImage: thanosio/thanos

version: main-2022-09-22-ff9ee9ac

[root@cluster-pms-master manifests]# kubectl apply -f prometheus-prometheus.yaml

prometheus.monitoring.coreos.com/k8s configured

[root@cluster-pms-master manifests]# kubectl get pod -n monitoring | grep prometheus-k8s

prometheus-k8s-0 3/3 Running 0 116s

prometheus-k8s-1 3/3 Running 0 2m13ssidecar将数据存储至对象存储

此处博主这里直接使用阿里云oss,没有部署minio,读者自行部署吧~

oss对象存储配置文件

[root@cluster-pms-master ~]# cat thanos-storage-gw.yaml

apiVersion: v1

kind: Secret

metadata:

name: thanos-storage-oss

type: Opaque

stringData:

objectstorage.yaml: |

type: ALIYUNOSS

config:

bucket: "thanos-storage-gw"

endpoint: "oss-cn-hongkong-internal.aliyuncs.com"

access_key_id: "xxxxx"

access_key_secret: "dddddd"

[root@cluster-pms-master ~]# kubectl apply -f thanos-storage-gw.yaml -n monitoring重新调整prometheus资源对象

[root@cluster-pms-master manifests]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels:

env: prod # 部署到不同环境需要修改此处label

cluster: cluster-pms # 部署到不同环境需要修改此处label

image: quay.io/prometheus/prometheus:v2.36.1

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleNamespaceSelector: {}

ruleSelector: {}

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.36.1

thanos: # 添加thano-sidecar容器

baseImage: thanosio/thanos

version: main-2022-09-22-ff9ee9ac

objectStorageConfig:

key: objectstorage.yaml

name: thanos-storage-oss

[root@cluster-pms-master manifests]# kubectl apply -f prometheus-prometheus.yaml -n monitoring引入querier

部署querier组件,该组件会提供一个ui界面,和prometheus提供的ui很相似

[root@cluster-pms-master ~]# cat thanos-query.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: thanos-query

namespace: monitoring

labels:

app.kubernetes.io/name: thanos-query

spec:

selector:

matchLabels:

app.kubernetes.io/name: thanos-query

template:

metadata:

labels:

app.kubernetes.io/name: thanos-query

spec:

containers:

- name: thanos

image: thanosio/thanos:main-2022-09-22-ff9ee9ac

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica # prometheus-operator 里面配置的副本标签为 prometheus_replica

# Discover local store APIs using DNS SRV.

- --store=dnssrv+prometheus-operated:10901 #通过这个headless srv获取当前集群所有prometheus副本

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

livenessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 10

readinessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 15

---

apiVersion: v1

kind: Service

metadata:

name: thanos-query

namespace: monitoring

labels:

app.kubernetes.io/name: thanos-query

spec:

ports:

- port: 9090

targetPort: http

name: http

nodePort: 30909

selector:

app.kubernetes.io/name: thanos-query

type: NodePort

[root@cluster-pms-master ~]# kubectl apply -f thanos-query.yaml -n monitoring调整网络策略,博主这里用的kube-prometheus的分支是release-0.11,manifest里设置了网络策略

[root@cluster-pms-master manifests]# ll *networkPolicy*

-rw-r--r-- 1 root root 977 Mar 17 15:02 alertmanager-networkPolicy.yaml

-rw-r--r-- 1 root root 722 Mar 17 15:02 blackboxExporter-networkPolicy.yaml

-rw-r--r-- 1 root root 651 Mar 17 15:02 grafana-networkPolicy.yaml

-rw-r--r-- 1 root root 723 Mar 17 15:02 kubeStateMetrics-networkPolicy.yaml

-rw-r--r-- 1 root root 671 Mar 17 15:02 nodeExporter-networkPolicy.yaml

-rw-r--r-- 1 root root 564 Mar 17 15:02 prometheusAdapter-networkPolicy.yaml

-rw-r--r-- 1 root root 1068 Mar 17 23:03 prometheus-networkPolicy.yaml

-rw-r--r-- 1 root root 694 Mar 17 15:02 prometheusOperator-networkPolicy.yaml

[root@cluster-pms-master manifests]# kubectl get networkpolicy -n monitoring

NAME POD-SELECTOR AGE

alertmanager-main app.kubernetes.io/component=alert-router,app.kubernetes.io/instance=main,app.kubernetes.io/name=alertmanager,app.kubernetes.io/part-of=kube-prometheus 22h

blackbox-exporter app.kubernetes.io/component=exporter,app.kubernetes.io/name=blackbox-exporter,app.kubernetes.io/part-of=kube-prometheus 22h

grafana app.kubernetes.io/component=grafana,app.kubernetes.io/name=grafana,app.kubernetes.io/part-of=kube-prometheus 22h

kube-state-metrics app.kubernetes.io/component=exporter,app.kubernetes.io/name=kube-state-metrics,app.kubernetes.io/part-of=kube-prometheus 22h

node-exporter app.kubernetes.io/component=exporter,app.kubernetes.io/name=node-exporter,app.kubernetes.io/part-of=kube-prometheus 22h

prometheus-adapter app.kubernetes.io/component=metrics-adapter,app.kubernetes.io/name=prometheus-adapter,app.kubernetes.io/part-of=kube-prometheus 22h

prometheus-k8s app.kubernetes.io/component=prometheus,app.kubernetes.io/instance=k8s,app.kubernetes.io/name=prometheus,app.kubernetes.io/part-of=kube-prometheus 15h

prometheus-operator app.kubernetes.io/component=controller,app.kubernetes.io/name=prometheus-operator,app.kubernetes.io/part-of=kube-prometheus 22h

所以需要调整放行thanos-query到prometheus的网络调用,如果不会调,则可以先把monitoring空间的网络策略都清除掉

[root@cluster-pms-master manifests]# cat prometheus-networkPolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: prometheus-k8s

namespace: monitoring

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

ports:

- port: 9090

protocol: TCP

- port: 8080

protocol: TCP

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: grafana

ports:

- port: 9090

protocol: TCP

# 放行thanos-query的网络调用,调用sidecar的端口10901

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: thanos-query

ports:

- port: 10901

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress

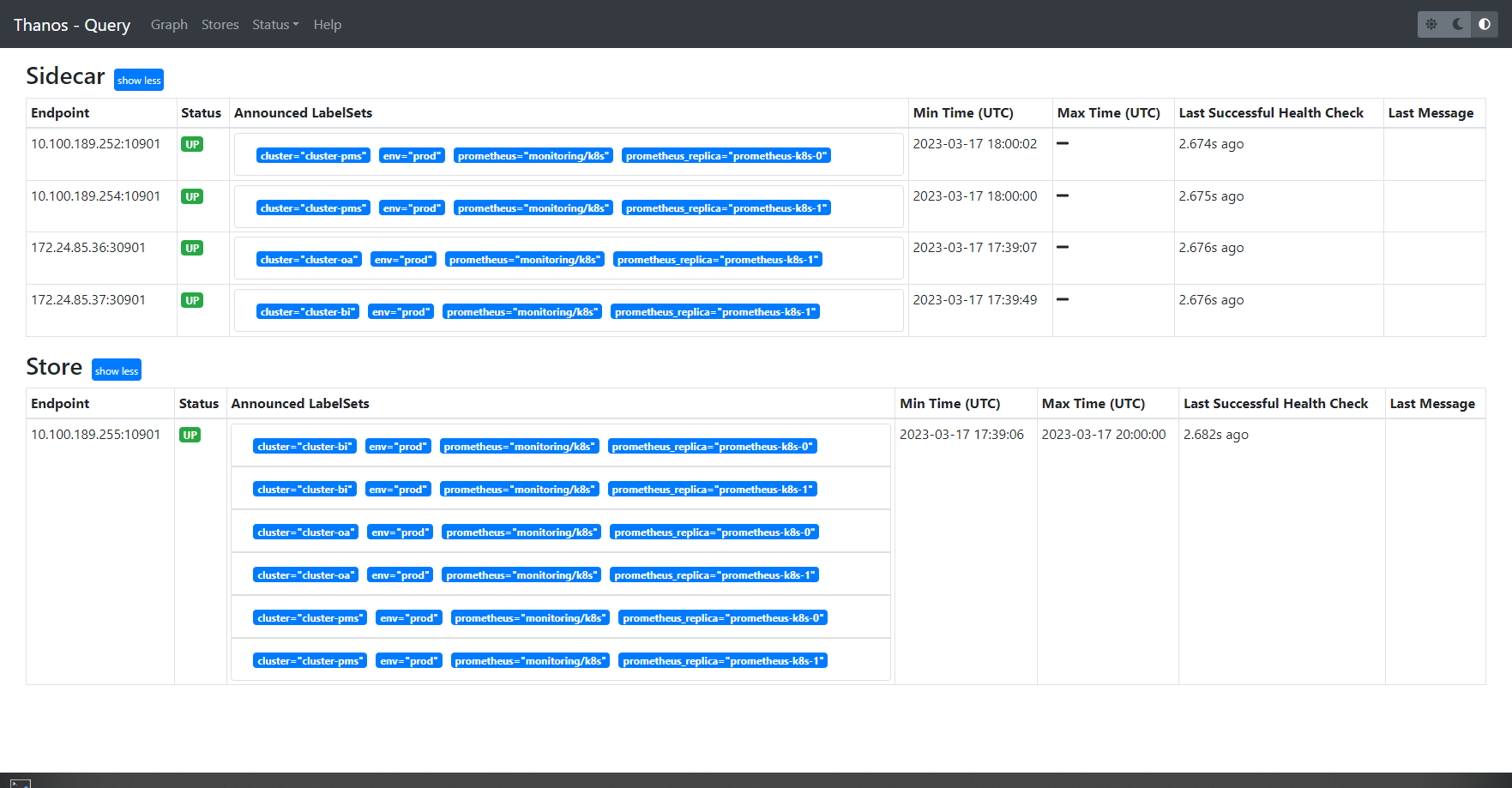

放行网络策略后我们登录querier组件暴露的nodeport查看下ui界面

[root@cluster-pms-master manifests]# kubectl get svc -n monitoring | grep than

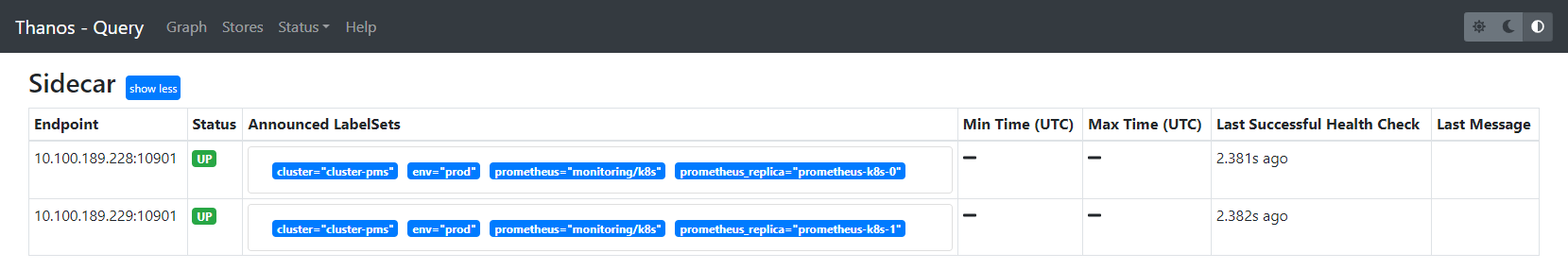

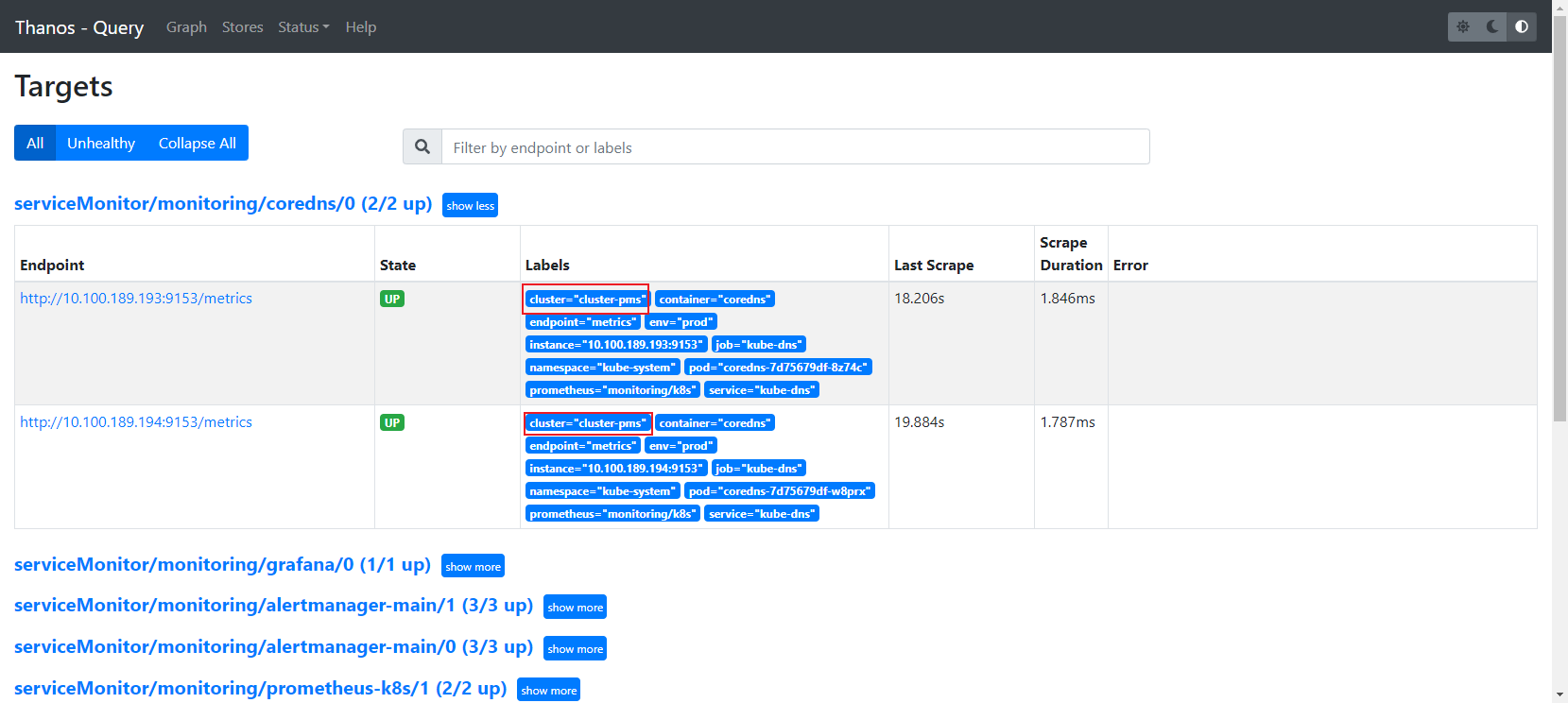

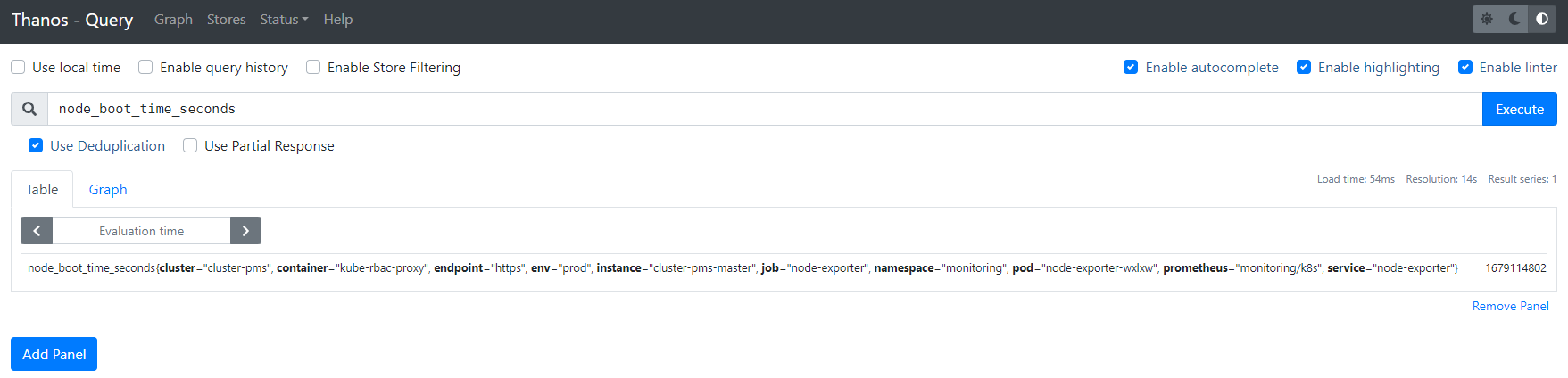

thanos-query NodePort 10.96.28.40 <none> 9090:30909/TCP 17m可以看到当前集群有两个prometheus实例,且打上了cluster和env标签区分

- “Use Deduplication”:Querier会根据指定的扩展标签进行数据去重,这保证了在用户层面不会因为高可用模式而出现重复数据的问题

- “Use Partial Response”:选项用于允许部分响应的情况,这要求用户在一致性与可用性之间做选择。当某个store出现故障而无法响应时,此时是否还需要返回其他正常store的数据

接入外部集群sidecar

注意:上面操作都是在cluster-pms中操作,现在来操作cluster-oa和cluster-bi这两个集群

配置oss secret

cat >thanos-storage-oss.yaml<<-'EOF'

apiVersion: v1

kind: Secret

metadata:

name: thanos-storage-oss

type: Opaque

stringData:

objectstorage.yaml: |

type: ALIYUNOSS

config:

bucket: "thanos-storage-gw"

endpoint: "oss-cn-hongkong-internal.aliyuncs.com"

access_key_id: "xxxx"

access_key_secret: "ddfdfd"

EOF

kubectl apply -f thanos-storage-oss.yaml -n monitoring调整prometheus接入sidecar

注意:两个集群的externalLabels调整

cat >prometheus-prometheus.yaml<<-'EOF'

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels:

env: prod # 部署到不同环境需要修改此处label

cluster: cluster-bi # 部署到不同环境需要修改此处label

image: quay.io/prometheus/prometheus:v2.36.1

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleNamespaceSelector: {}

ruleSelector: {}

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.36.1

thanos: # 添加thano-sidecar容器

baseImage: thanosio/thanos

version: main-2022-09-22-ff9ee9ac

objectStorageConfig:

key: objectstorage.yaml

name: thanos-storage-oss

EOF

kubectl apply -f prometheus-prometheus.yaml -n monitoring调整prometheus的svc为nodeport

cat >prometheus-service.yaml<<-'EOF'

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: prometheus-k8s

namespace: monitoring

spec:

# 调整为nodeport

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

# 暴露sidecar的port,固定为30901

- name: grpc

port: 10901

protocol: TCP

targetPort: grpc

nodePort: 30901

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

EOF

kubectl apply -f prometheus-service.yaml -n monitoring调整网络策略

放行prometheus pod内10901端口到cluster-pms集群网段通信(不会调就干掉)

cat >prometheus-networkPolicy.yaml<<-'EOF'

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: prometheus-k8s

namespace: monitoring

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

ports:

- port: 9090

protocol: TCP

- port: 8080

protocol: TCP

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: grafana

ports:

- port: 9090

protocol: TCP

- from:

- ipBlock:

cidr: 172.24.80.0/20

ports:

- port: 10901

protocol: TCP

- port: 9090

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress

EOF

kubectl apply -f prometheus-networkPolicy.yaml -n monitoring至此就将sidecar的10901通过nodeport方式暴露出去,后面总控集群cluster-pms的querier组件才能抓取该端口数据

调整thanos-query接入两个集群的sidecar

注意:此处已回到cluster-pms这个总管集群上处理,其他集群操作上面的步骤基本完事了

[root@cluster-pms-master ~]# cat thanos-query.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: thanos-query

namespace: monitoring

labels:

app.kubernetes.io/name: thanos-query

spec:

selector:

matchLabels:

app.kubernetes.io/name: thanos-query

template:

metadata:

labels:

app.kubernetes.io/name: thanos-query

spec:

containers:

- name: thanos

image: thanosio/thanos:main-2022-09-22-ff9ee9ac

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica # prometheus-operator 里面配置的副本标签为 prometheus_replica

# Discover local store APIs using DNS SRV.

- --store=dnssrv+prometheus-operated:10901

# 接入外部集群的prometheus实例的svc nodeport 地址

- --store=172.24.85.36:30901

- --store=172.24.85.37:30901

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

livenessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 10

readinessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 15

---

apiVersion: v1

kind: Service

metadata:

name: thanos-query

namespace: monitoring

labels:

app.kubernetes.io/name: thanos-query

spec:

ports:

- port: 9090

targetPort: http

name: http

nodePort: 30909

selector:

app.kubernetes.io/name: thanos-query

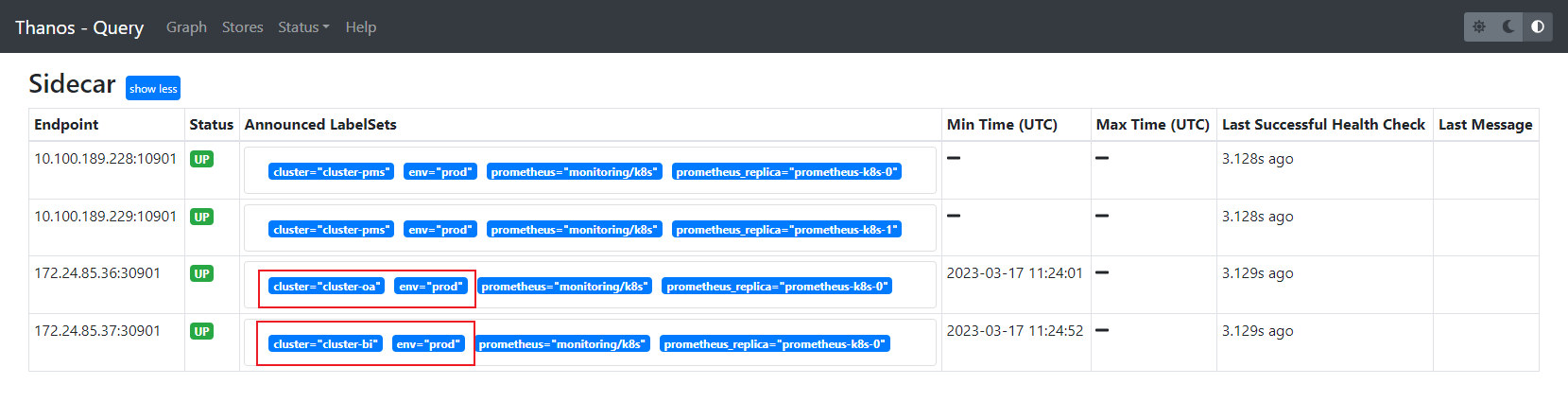

type: NodePort调整完后就可以看到对应集群的实例了

引入store gateway

前面我们在sidecar内将数据持久化到oss中,数据不是实时同步到oss,而是每两个小时同步到oss,然后清空本地存储数据,和prometheus的启动参数有关:

# prometheus抓取到的监控数据在落盘之前都是存储在内存中的

# 设置数据块落盘最大和最小时间跨度,默认2h 的数据量

# 监控数据是按块(block)存储,每一个块中包含该时间窗口内的所有样本数据,后面会引入compactor组件去降采

--storage.tsdb.max-block-duration=2h

--storage.tsdb.min-block-duration=2h–storage.tsdb.min-block-duration和–storage.tsdb.min-block-duration参数必须添加且配置相同的值来阻止prometheus压缩数据,因为后面会用compactor来完成这个共组,在这里压缩的话会造成compactor异常出错

所以读者静等两小时后oss才有数据~~~

为了能查询到oss内的历史数据,我们需要引入store gateway来完成查询

[root@cluster-pms-master ~]# cat store-gw.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: thanos-store

namespace: monitoring

labels:

app: thanos-store

spec:

replicas: 1

selector:

matchLabels:

app: thanos-store

serviceName: thanos-store

template:

metadata:

labels:

app: thanos-store

thanos-store-api: "true"

spec:

containers:

- name: thanos

image: thanosio/thanos:main-2022-09-22-ff9ee9ac

args:

- "store"

- "--log.level=debug"

- "--data-dir=/data"

- "--objstore.config-file=/etc/secret/objectstorage.yaml"

- "--index-cache-size=500MB"

- "--chunk-pool-size=500MB"

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

livenessProbe:

httpGet:

port: 10902

path: /-/healthy

initialDelaySeconds: 10

readinessProbe:

httpGet:

port: 10902

path: /-/ready

initialDelaySeconds: 15

volumeMounts:

- name: object-storage-config

mountPath: /etc/secret

readOnly: false

volumes:

- name: object-storage-config

secret:

secretName: thanos-storage-oss

---

apiVersion: v1

kind: Service

metadata:

name: thanos-store

namespace: monitoring

spec:

type: ClusterIP

clusterIP: None

ports:

- name: grpc

port: 10901

targetPort: grpc

selector:

app: thanos-store

此时我们在querier的ui上可以查看到stores多了一类store

引入compator

前面已经将数据每两小时同步到oss,又接入了store gateway供querier查询历史数据,这些历史数据是未压缩的,后面会越堆越大,所以需要引入compactor来降采压缩处理。

另一方面在实现查询较大时间范围的监控数据时,当时间范围很大,查询的数据量也会很大,这会导致查询速度非常慢。通常在查看较大时间范围的监控数据时,我们并不需要那么详细的数据,只需要看到大致就行。

[root@cluster-pms-master ~]# cat thanos-compactor.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: thanos-compactor

namespace: monitoring

labels:

app: thanos-compactor

spec:

replicas: 1

selector:

matchLabels:

app: thanos-compactor

serviceName: thanos-compactor

template:

metadata:

labels:

app: thanos-compactor

spec:

containers:

- name: thanos

image: thanosio/thanos:main-2022-09-22-ff9ee9ac

args:

- "compact"

- "--log.level=debug"

- "--data-dir=/data"

- "--objstore.config-file=/etc/secret/objectstorage.yaml"

- "--wait"

ports:

- name: http

containerPort: 10902

livenessProbe:

httpGet:

port: 10902

path: /-/healthy

initialDelaySeconds: 10

readinessProbe:

httpGet:

port: 10902

path: /-/ready

initialDelaySeconds: 15

volumeMounts:

- name: object-storage-config

mountPath: /etc/secret

readOnly: false

volumes:

- name: object-storage-config

secret:

secretName: thanos-storage-oss

compactor默认策略:

- 为大于 40 小时的块创建 5m 降采样

- 为大于 10 天的块创建 1 小时降采样

args参数:

- –wait:降采样任务完成后,程序会退出,设置该参数可以让其保持运行态

- –retention.resolution-5m:5m分钟降采样数据的保留时间(建议不设置或为0s,将持久保留)

- –retention.resolution-1h:1小时降采样数据保留时间(建议不设置或为0s,将持久保留)

- –retention.resolution-raw:原始数据的保留时间,该参数比上述两个大(建议不设置或为0s,将持久保留)

注意:Compactor也会对索引进行压缩,包括将来自同个Prometheus实例的多个块压缩为一个,它是通过扩展标签集来区分不同prometheus实例的,所以确保你的集群间prometheus扩展标签的唯一性~

grafana面板调整

调整网络策略

在cluster-pms总管集群中暴露grafana svc,博主这里用的nodeport,暴露到外网需要调整下网络策略

[root@cluster-pms-master manifests]# cat grafana-networkPolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.5.5

name: grafana

namespace: monitoring

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

ports:

- port: 3000

protocol: TCP

# 增加此处放行内网vpc段

- from:

- ipBlock:

cidr: 172.24.80.0/20

ports:

- port: 3000

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

# 增加此处标签,放行外网

public-network: "true"

policyTypes:

- Egress

- Ingress

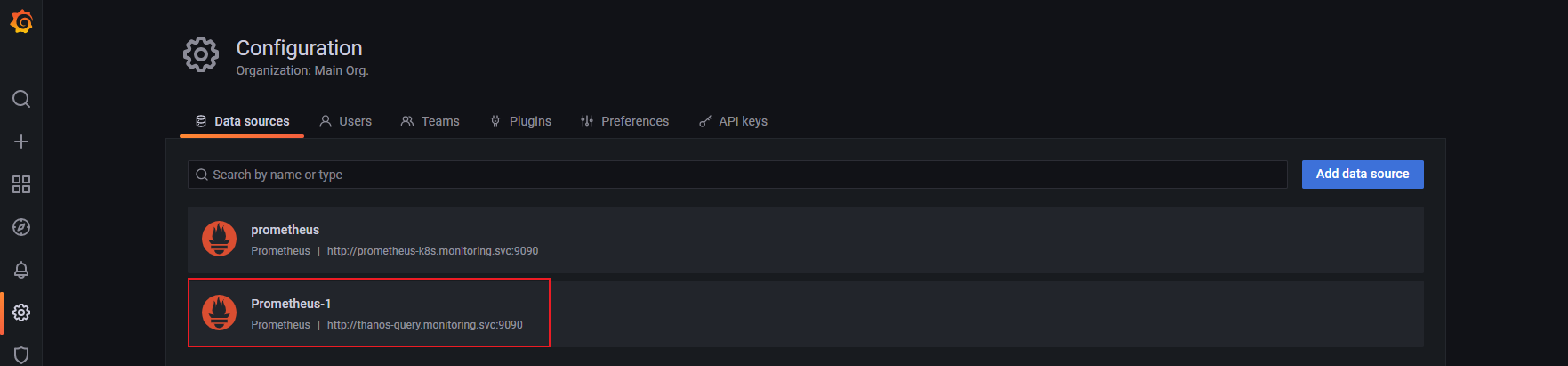

增加thanos querier为数据源

[root@cluster-pms-master manifests]# kubectl get svc -n monitoring | grep quer

thanos-query NodePort 10.96.28.40 <none> 9090:30909/TCP 16h

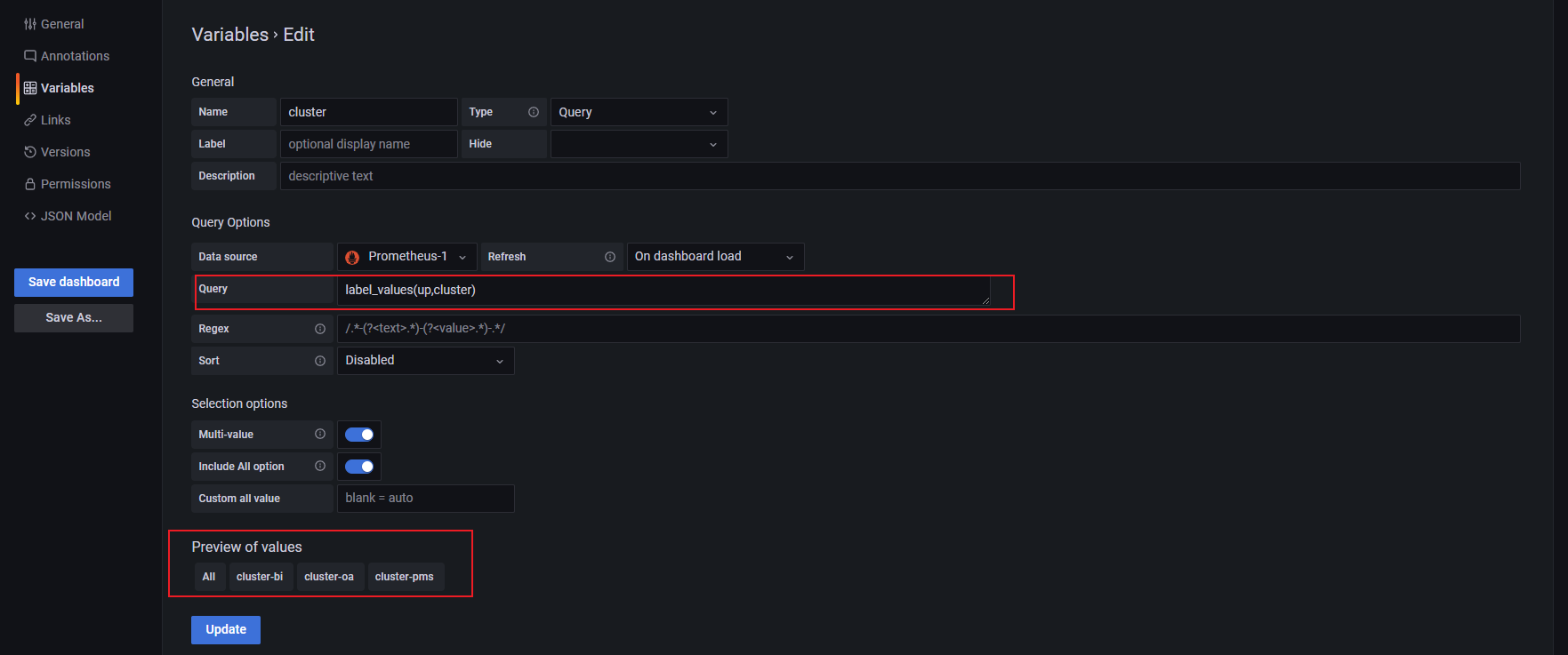

增加cluster变量

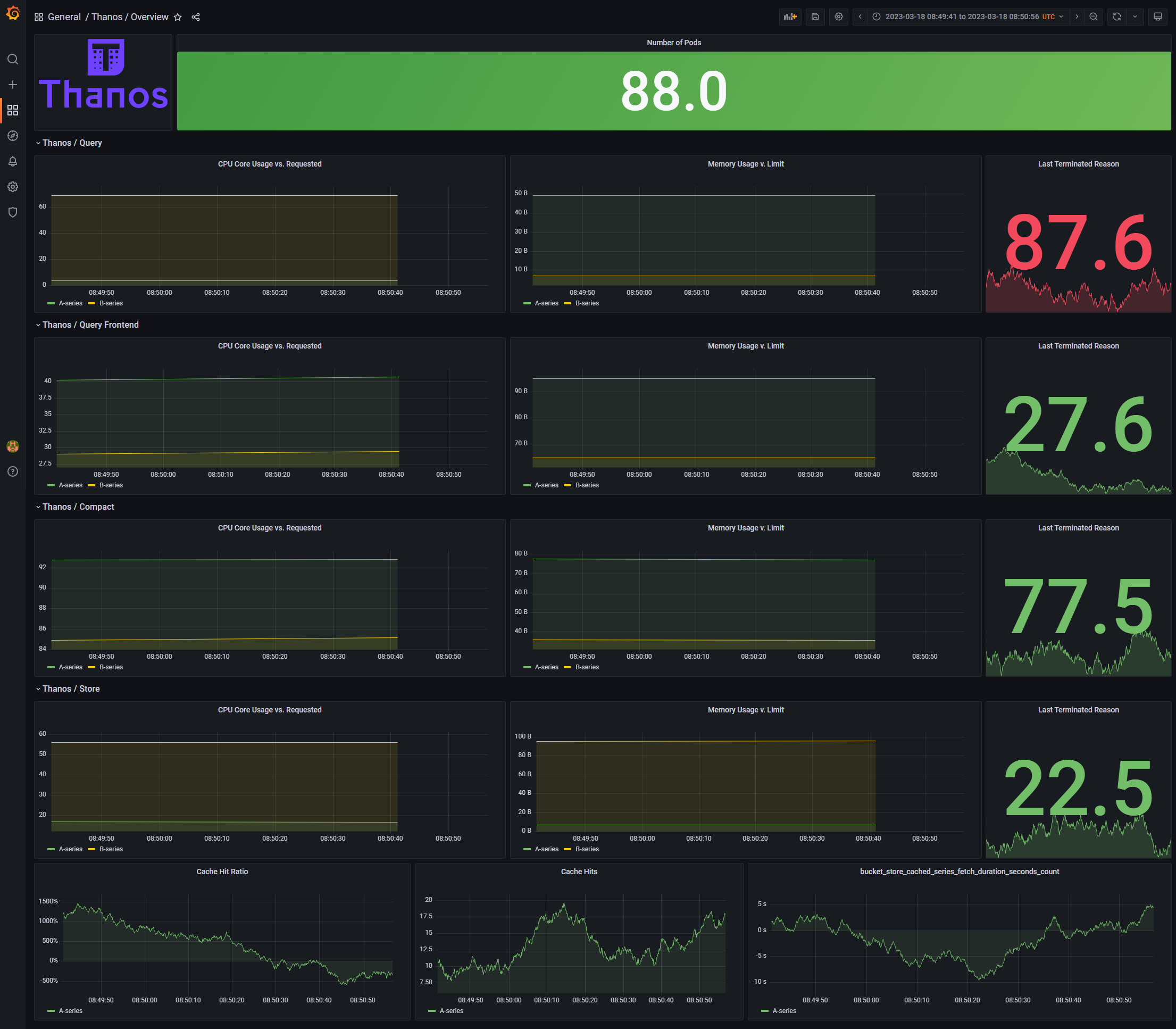

样例图

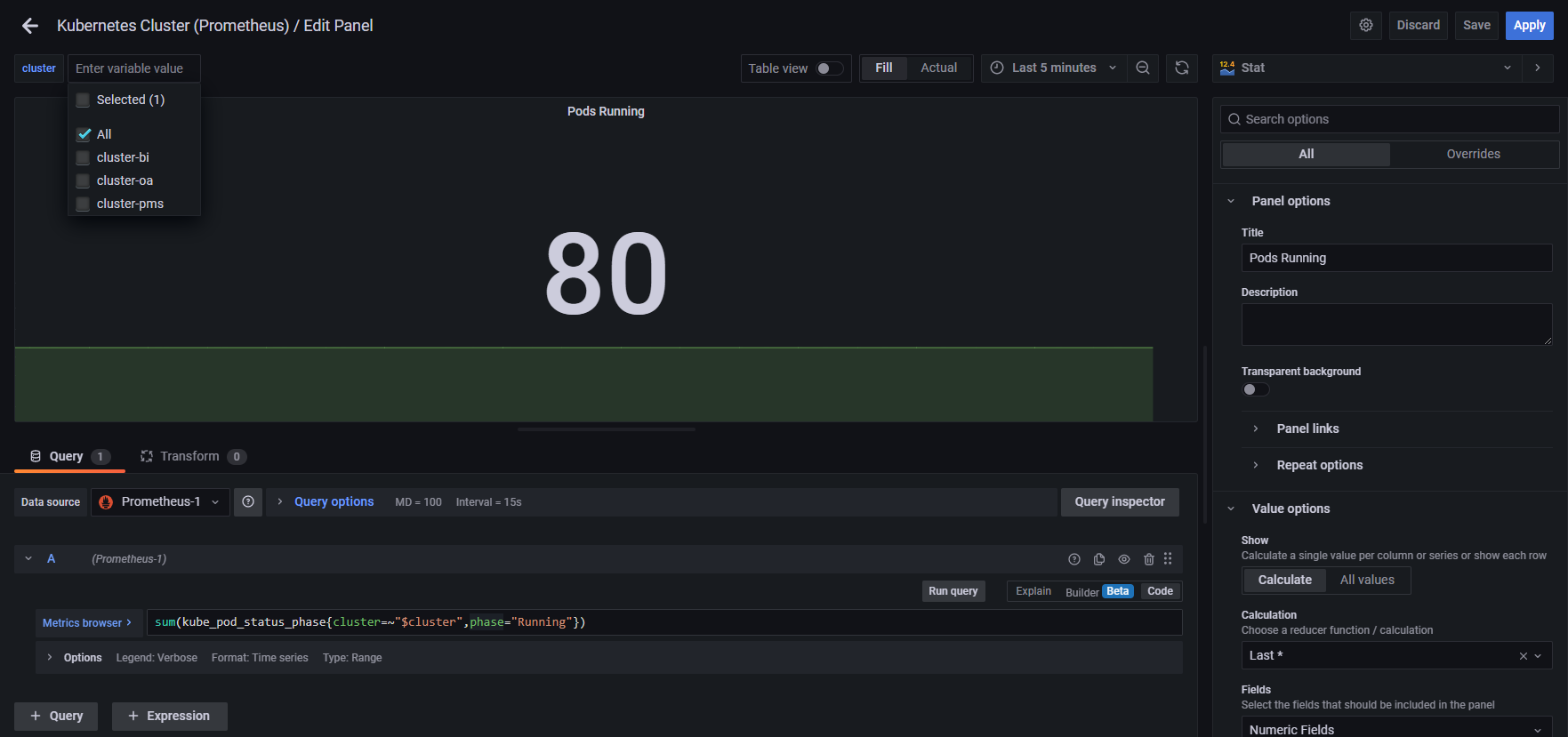

后面做图就慢慢搞了,看读者业务得需求了,比如:

- 所有集群在运行中得pod数量

- 所有deployment

- 所有集群总节点

- 所有集群总核心和内存

- 集群个数

样例:所有集群在运行中得pod数量

thanos面板ID: 12937

参考文档:

- sideca文档:https://thanos.io/v0.28/components/sidecar.md/

- querier文档:https://thanos.io/v0.28/components/query.md/

- 持久化存储文档:https://thanos.io/v0.28/thanos/storage.md/

- store gateway文档:https://thanos.io/v0.28/components/store.md/

- compactor文档:https://thanos.io/v0.28/components/compact.md/

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站