共计 20730 个字符,预计需要花费 52 分钟才能阅读完成。

Deployment是最常用的工作负载控制器,多数用其来做应用的部署、升级、扩缩容、发布回滚等

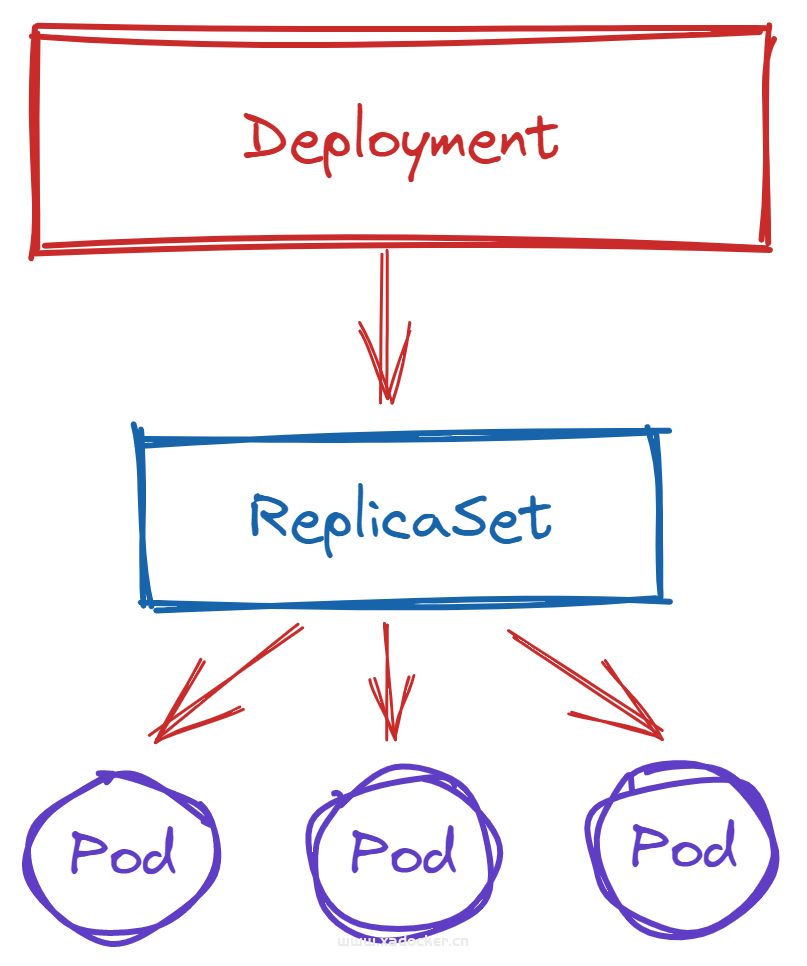

Deployment其实是通过控制ReplicaSet来间接控制Pods:

Deployment简单样例

[root@master ingress]# cat >nginx-deployment.yaml<<'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

labels:

app: nginx1

spec:

replicas: 3

selector:

matchLabels:

app: nginx1

template:

metadata:

labels:

app: nginx1

spec:

initContainers:

- name: init-container

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["sh"]

env:

# - name: MY_POD_NAME

# valueFrom:

# fieldRef:

# fieldPath: metadata.name

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

args:

[

"-c",

"echo ${HOSTNAME} ${MY_POD_IP} > /wwwroot/index.html",

]

volumeMounts:

- name: wwwroot

mountPath: "/wwwroot"

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: wwwroot

emptyDir: {}

EOF

[root@master ingress]# kubectl apply -f nginx-deployment.yaml

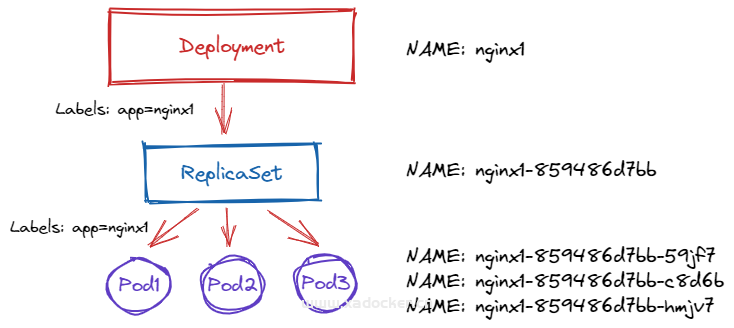

deployment.apps/nginx1 unchanged查看资源,注意deployment、replicaset、pod名字,rs是在deployment之后加了一段唯一字符串,而pod是在rs之后加了一段唯一字符串

# 查看deployment

[root@master ingress]# kubectl get deployment -l app=nginx1

NAME READY UP-TO-DATE AVAILABLE AGE

nginx1 3/3 3 3 10d

# 查看rs

[root@master ingress]# kubectl get rs -l app=nginx1

NAME DESIRED CURRENT READY AGE

nginx1-859486d7bb 3 3 3 10d

# 查看pod

[root@master ingress]# kubectl get pods -l app=nginx1

NAME READY STATUS RESTARTS AGE

nginx1-859486d7bb-59jf7 1/1 Running 0 116m

nginx1-859486d7bb-c8d6b 1/1 Running 0 116m

nginx1-859486d7bb-hmjv7 1/1 Running 0 116m扩缩容

方式一

# 使用cli方式扩缩容

[root@master ingress]# kubectl scale deploy nginx1 --replicas=4

deployment.apps/nginx1 scaled

[root@master ingress]# kubectl get pods -l app=nginx1

NAME READY STATUS RESTARTS AGE

nginx1-859486d7bb-59jf7 1/1 Running 0 116m

nginx1-859486d7bb-c8d6b 1/1 Running 0 116m

nginx1-859486d7bb-hmjv7 1/1 Running 0 116m

nginx1-859486d7bb-t5hgj 0/1 PodInitializing 0 2s方式二

# 使用声明文件扩容

[root@master ingress]# sed 's/replicas: 3/replicas: 5/g' -i nginx-deployment.yaml

[root@master ingress]# kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx1 configured

[root@master ingress]# kubectl get pods -l app=nginx1

NAME READY STATUS RESTARTS AGE

nginx1-859486d7bb-59jf7 1/1 Running 0 118m

nginx1-859486d7bb-c8d6b 1/1 Running 0 119m

nginx1-859486d7bb-gg2w5 1/1 Running 0 8s

nginx1-859486d7bb-hmjv7 1/1 Running 0 118m

nginx1-859486d7bb-t5hgj 1/1 Running 0 2m15s方式三

# 在线编辑该资源,找到replicas字段修改副本数保存即可

[root@master ingress]# kubectl edit deploy nginx1

deployment.apps/nginx1 edited

[root@master ingress]# kubectl get pods -l app=nginx1

NAME READY STATUS RESTARTS AGE

nginx1-859486d7bb-59jf7 1/1 Running 0 121m

nginx1-859486d7bb-bsj7x 1/1 Running 0 60s

nginx1-859486d7bb-c8d6b 1/1 Running 0 121m

nginx1-859486d7bb-gg2w5 1/1 Running 0 2m25s

nginx1-859486d7bb-hmjv7 1/1 Running 0 121m

nginx1-859486d7bb-t5hgj 1/1 Running 0 4m32s版本管理

deployment通过定义字段:strategy 来实现两种方式进行更新

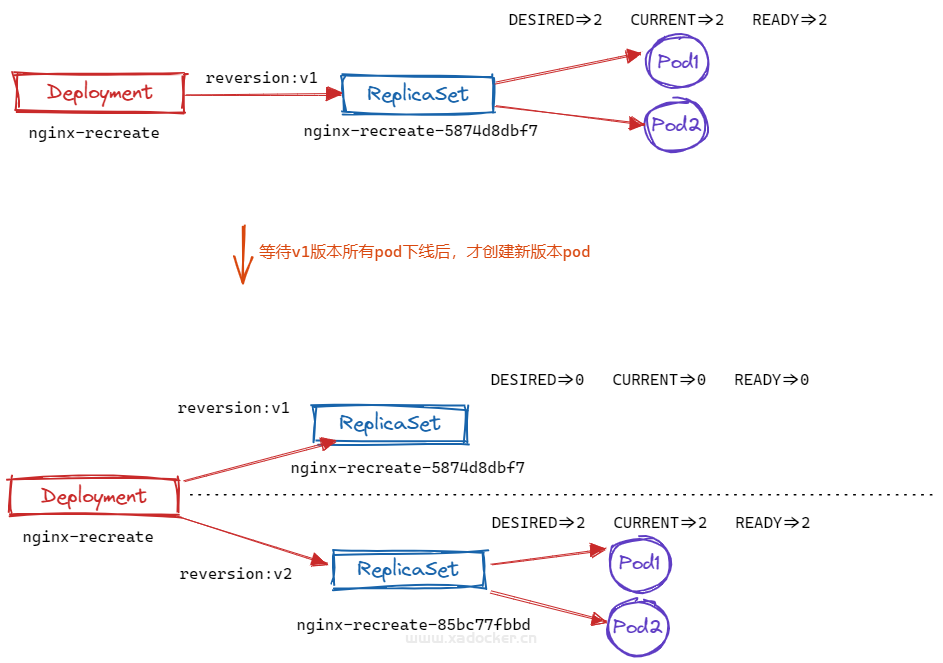

- Recreate:重建更新,先kill所有旧版本Pod,然后再创建新版本Pod

- RollingUpdate:滚动更新(默认策略),先kill一部分旧版Pod,然后再创建对应数量的新版Pod

- maxUnavailable:用来指定在升级过程中不可用pod的最大数量,默认为25%

- maxSurge:用来指定在升级过程中可以超过期望的pod的最大数量,默认为25%

更新测试

重建更新

[root@master deploy]# cat >deployment-recreate.yaml<<'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-recreate

labels:

app: nginx-recreate

spec:

replicas: 2

selector:

matchLabels:

app: nginx-recreate

strategy:

type: Recreate

template:

metadata:

labels:

app: nginx-recreate

spec:

initContainers:

- name: init-container

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["sh"]

env:

# - name: MY_POD_NAME

# valueFrom:

# fieldRef:

# fieldPath: metadata.name

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

args:

[

"-c",

"echo ${HOSTNAME} ${MY_POD_IP} > /wwwroot/index.html",

]

volumeMounts:

- name: wwwroot

mountPath: "/wwwroot"

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: wwwroot

emptyDir: {}

EOF

[root@master deploy]#

[root@master deploy]# kubectl apply -f deployment-recreate.yaml

deployment.apps/nginx-recreate created查看资源状态

[root@master deploy]# kubectl get deployment -l app=nginx-recreate -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-recreate 2/2 2 2 21s nginx nginx:latest app=nginx-recreate

[root@master deploy]# kubectl get rs -l app=nginx-recreate -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

nginx-recreate-5874d8dbf7 2 2 2 28s nginx nginx:latest app=nginx-recreate,pod-template-hash=5874d8dbf7

[root@master deploy]# kubectl get pods -l app=nginx-recreate -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-recreate-5874d8dbf7-7wxjf 1/1 Running 0 35s 10.100.104.46 node2 <none> <none>

nginx-recreate-5874d8dbf7-ss7qr 1/1 Running 0 35s 10.100.166.158 node1 <none> <none>

测试重建更新

注意观察rs状态,期间等待v1版本pod数归0后,v2 版本pod才开始创建

# 修改镜像版本模拟

[root@master deploy]# sed 's/nginx:latest/nginx:1.14.2/g' -i deployment-recreate.yaml

[root@master deploy]# kubectl apply -f deployment-recreate.yaml

deployment.apps/nginx-recreate configured

[root@master deploy]# kubectl get deploy -o wide -l app=nginx-recreate

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-recreate 2/2 2 2 20m nginx nginx:1.14.2 app=nginx-recreate

[root@master deploy]# kubectl get rs -o wide -l app=nginx-recreate

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

nginx-recreate-5874d8dbf7 0 0 0 21m nginx nginx:latest app=nginx-recreate,pod-template-hash=5874d8dbf7

nginx-recreate-85bc77fbbd 2 2 2 19m nginx nginx:1.14.2 app=nginx-recreate,pod-template-hash=85bc77fbbd

[root@master deploy]# kubectl get pods -o wide -l app=nginx-recreate

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-recreate-85bc77fbbd-7498k 1/1 Running 0 20m 10.100.166.159 node1 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 1/1 Running 0 20m 10.100.104.47 node2 <none> <none>

# 新开终端查看变化

[root@master deploy]# kubectl get pods -l app=nginx-recreate -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-recreate-5874d8dbf7-ss7qr 1/1 Running 0 7s 10.100.166.158 node1 <none> <none>

nginx-recreate-5874d8dbf7-7wxjf 1/1 Running 0 7s 10.100.104.46 node2 <none> <none>

nginx-recreate-5874d8dbf7-7wxjf 1/1 Terminating 0 58s 10.100.104.46 node2 <none> <none>

nginx-recreate-5874d8dbf7-ss7qr 1/1 Terminating 0 58s 10.100.166.158 node1 <none> <none>

nginx-recreate-5874d8dbf7-ss7qr 0/1 Terminating 0 58s 10.100.166.158 node1 <none> <none>

nginx-recreate-5874d8dbf7-7wxjf 0/1 Terminating 0 59s 10.100.104.46 node2 <none> <none>

nginx-recreate-5874d8dbf7-ss7qr 0/1 Terminating 0 64s 10.100.166.158 node1 <none> <none>

nginx-recreate-5874d8dbf7-ss7qr 0/1 Terminating 0 64s 10.100.166.158 node1 <none> <none>

nginx-recreate-5874d8dbf7-7wxjf 0/1 Terminating 0 66s 10.100.104.46 node2 <none> <none>

nginx-recreate-5874d8dbf7-7wxjf 0/1 Terminating 0 66s 10.100.104.46 node2 <none> <none>

nginx-recreate-85bc77fbbd-7498k 0/1 Pending 0 0s <none> <none> <none> <none>

nginx-recreate-85bc77fbbd-h9brd 0/1 Pending 0 0s <none> <none> <none> <none>

nginx-recreate-85bc77fbbd-7498k 0/1 Pending 0 0s <none> node1 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 0/1 Pending 0 0s <none> node2 <none> <none>

nginx-recreate-85bc77fbbd-7498k 0/1 Init:0/1 0 0s <none> node1 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 0/1 Init:0/1 0 0s <none> node2 <none> <none>

nginx-recreate-85bc77fbbd-7498k 0/1 Init:0/1 0 1s <none> node1 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 0/1 Init:0/1 0 1s <none> node2 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 0/1 PodInitializing 0 2s 10.100.104.47 node2 <none> <none>

nginx-recreate-85bc77fbbd-7498k 0/1 PodInitializing 0 2s 10.100.166.159 node1 <none> <none>

nginx-recreate-85bc77fbbd-h9brd 1/1 Running 0 7s 10.100.104.47 node2 <none> <none>

nginx-recreate-85bc77fbbd-7498k 1/1 Running 0 7s 10.100.166.159 node1 <none> <none>滚动更新

[root@master deploy]# cat >deployment-rollupdate.yml<<'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-rollupdate

labels:

app: nginx-rollupdate

spec:

replicas: 8

selector:

matchLabels:

app: nginx-rollupdate

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

template:

metadata:

labels:

app: nginx-rollupdate

spec:

initContainers:

- name: init-container

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["sh"]

env:

# - name: MY_POD_NAME

# valueFrom:

# fieldRef:

# fieldPath: metadata.name

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

args:

[

"-c",

"echo ${HOSTNAME} ${MY_POD_IP} > /wwwroot/index.html",

]

volumeMounts:

- name: wwwroot

mountPath: "/wwwroot"

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: wwwroot

emptyDir: {}

EOF创建资源并查看状态

[root@master deploy]# kubectl apply -f deployment-rollupdate.yml

deployment.apps/nginx-rollupdate created

[root@master deploy]# kubectl get -f deployment-rollupdate.yml

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-rollupdate 8/8 8 8 8s

[root@master deploy]# kubectl get deployment,rs,pods -l app=nginx-rollupdate -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-rollupdate 8/8 8 8 3m15s nginx nginx:1.14.2 app=nginx-rollupdate

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/nginx-rollupdate-58b64cfcc9 8 8 8 3m15s nginx nginx:1.14.2 app=nginx-rollupdate,pod-template-hash=58b64cfcc9

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-rollupdate-58b64cfcc9-4wn6f 1/1 Running 0 3m15s 10.100.104.48 node2 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-fdq6t 1/1 Running 0 3m15s 10.100.104.49 node2 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-gbn8n 1/1 Running 0 3m15s 10.100.166.163 node1 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-l88zh 1/1 Running 0 3m15s 10.100.166.161 node1 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-ljlgk 1/1 Running 0 3m15s 10.100.166.162 node1 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-nf4wx 1/1 Running 0 3m15s 10.100.166.160 node1 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-tf7hc 1/1 Running 0 3m15s 10.100.104.16 node2 <none> <none>

pod/nginx-rollupdate-58b64cfcc9-z5zc6 1/1 Running 0 3m15s 10.100.104.50 node2 <none> <none>测试滚动更新

# 在变更前开个终端观察rs

[root@master deploy]# kubectl get rs -w -l app=nginx-rollupdate

# 在变更前开个终端观察pod

[root@master deploy]# kubectl get pods -w -l app=nginx-rollupdate

# 开始变更

[root@master deploy]# sed 's/nginx:1.14.2/nginx:latest/g' deployment-rollupdate.yml -i

[root@master deploy]# kubectl apply -f deployment-rollupdate.yml

deployment.apps/nginx-rollupdate configured查看rs变更过程记录

新版rs Pod期望值由0=>2,同时旧版rs Pod期望值由8=>6,以此类推两边同时各自增加或减少相应的pod,期间该应用pod(包含v1/v2)数不会超过原先的125%(由maxSurge控制,可能刚开始v1版下发缩容操作,但是pod还未释放掉,而v2版本已创建新pod),最终数量状态则是回到一致

# 查看rs DESIRED CURRENT READY

[root@master deploy]# kubectl get rs -w -l app=nginx-rollupdate

NAME DESIRED CURRENT READY AGE

nginx-rollupdate-58b64cfcc9 8 8 8 5m41s

nginx-rollupdate-6b75c74c9d 2 0 0 0s

nginx-rollupdate-58b64cfcc9 6 8 8 6m16s

nginx-rollupdate-6b75c74c9d 4 0 0 0s

nginx-rollupdate-58b64cfcc9 6 8 8 6m16s

nginx-rollupdate-58b64cfcc9 6 6 6 6m16s

nginx-rollupdate-6b75c74c9d 4 0 0 0s

nginx-rollupdate-6b75c74c9d 4 2 0 0s

nginx-rollupdate-6b75c74c9d 4 4 0 0s

nginx-rollupdate-6b75c74c9d 4 4 1 2s

nginx-rollupdate-58b64cfcc9 5 6 6 6m18s

nginx-rollupdate-6b75c74c9d 4 4 2 2s

nginx-rollupdate-58b64cfcc9 5 6 6 6m18s

nginx-rollupdate-6b75c74c9d 5 4 2 2s

nginx-rollupdate-58b64cfcc9 5 5 5 6m18s

nginx-rollupdate-6b75c74c9d 5 4 2 2s

nginx-rollupdate-58b64cfcc9 4 5 5 6m18s

nginx-rollupdate-6b75c74c9d 6 4 2 2s

nginx-rollupdate-58b64cfcc9 4 5 5 6m18s

nginx-rollupdate-6b75c74c9d 6 5 2 2s

nginx-rollupdate-58b64cfcc9 4 4 4 6m18s

nginx-rollupdate-6b75c74c9d 6 6 2 2s

nginx-rollupdate-6b75c74c9d 6 6 3 3s

nginx-rollupdate-58b64cfcc9 3 4 4 6m19s

nginx-rollupdate-6b75c74c9d 7 6 3 3s

nginx-rollupdate-58b64cfcc9 3 4 4 6m19s

nginx-rollupdate-58b64cfcc9 3 3 3 6m19s

nginx-rollupdate-6b75c74c9d 7 6 3 3s

nginx-rollupdate-6b75c74c9d 7 7 3 3s

nginx-rollupdate-6b75c74c9d 7 7 4 4s

nginx-rollupdate-58b64cfcc9 2 3 3 6m20s

nginx-rollupdate-6b75c74c9d 8 7 4 4s

nginx-rollupdate-58b64cfcc9 2 3 3 6m20s

nginx-rollupdate-6b75c74c9d 8 7 4 4s

nginx-rollupdate-58b64cfcc9 2 2 2 6m20s

nginx-rollupdate-6b75c74c9d 8 8 4 4s

nginx-rollupdate-6b75c74c9d 8 8 5 5s

nginx-rollupdate-58b64cfcc9 1 2 2 6m21s

nginx-rollupdate-58b64cfcc9 1 2 2 6m21s

nginx-rollupdate-58b64cfcc9 1 1 1 6m21s

nginx-rollupdate-6b75c74c9d 8 8 6 7s

nginx-rollupdate-58b64cfcc9 0 1 1 6m23s

nginx-rollupdate-58b64cfcc9 0 1 1 6m23s

nginx-rollupdate-58b64cfcc9 0 0 0 6m23s

nginx-rollupdate-6b75c74c9d 8 8 7 7s

nginx-rollupdate-6b75c74c9d 8 8 8 8s查看pods变更过程

[root@master deploy]# kubectl get pods -w -l app=nginx-rollupdate

NAME READY STATUS RESTARTS AGE

nginx-rollupdate-58b64cfcc9-4wn6f 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-fdq6t 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-gbn8n 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-l88zh 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-ljlgk 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-nf4wx 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-tf7hc 1/1 Running 0 6m1s

nginx-rollupdate-58b64cfcc9-z5zc6 1/1 Running 0 6m1s

nginx-rollupdate-6b75c74c9d-jdwt4 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-jdwt4 0/1 Pending 0 0s

nginx-rollupdate-58b64cfcc9-ljlgk 1/1 Terminating 0 6m16s

nginx-rollupdate-58b64cfcc9-gbn8n 1/1 Terminating 0 6m16s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-dvm2h 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-jdwt4 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-hd4w7 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-dvm2h 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-hd4w7 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-dvm2h 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-hd4w7 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-hd4w7 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-jdwt4 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-dvm2h 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-dvm2h 0/1 PodInitializing 0 1s

nginx-rollupdate-6b75c74c9d-jdwt4 0/1 PodInitializing 0 1s

nginx-rollupdate-58b64cfcc9-gbn8n 0/1 Terminating 0 6m17s

nginx-rollupdate-58b64cfcc9-ljlgk 0/1 Terminating 0 6m17s

nginx-rollupdate-6b75c74c9d-hd4w7 0/1 PodInitializing 0 2s

nginx-rollupdate-6b75c74c9d-82cjn 0/1 PodInitializing 0 2s

nginx-rollupdate-6b75c74c9d-dvm2h 1/1 Running 0 2s

nginx-rollupdate-6b75c74c9d-jdwt4 1/1 Running 0 2s

nginx-rollupdate-58b64cfcc9-z5zc6 1/1 Terminating 0 6m18s

nginx-rollupdate-6b75c74c9d-vswpg 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-vswpg 0/1 Pending 0 0s

nginx-rollupdate-58b64cfcc9-4wn6f 1/1 Terminating 0 6m18s

nginx-rollupdate-6b75c74c9d-cj4pf 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-vswpg 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-cj4pf 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-cj4pf 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-cj4pf 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-vswpg 0/1 Init:0/1 0 1s

nginx-rollupdate-6b75c74c9d-vswpg 0/1 PodInitializing 0 1s

nginx-rollupdate-58b64cfcc9-z5zc6 0/1 Terminating 0 6m19s

nginx-rollupdate-6b75c74c9d-hd4w7 1/1 Running 0 3s

nginx-rollupdate-58b64cfcc9-nf4wx 1/1 Terminating 0 6m19s

nginx-rollupdate-6b75c74c9d-v67c6 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-v67c6 0/1 Pending 0 0s

nginx-rollupdate-58b64cfcc9-4wn6f 0/1 Terminating 0 6m19s

nginx-rollupdate-58b64cfcc9-gbn8n 0/1 Terminating 0 6m19s

nginx-rollupdate-58b64cfcc9-gbn8n 0/1 Terminating 0 6m19s

nginx-rollupdate-6b75c74c9d-82cjn 1/1 Running 0 4s

nginx-rollupdate-58b64cfcc9-l88zh 1/1 Terminating 0 6m20s

nginx-rollupdate-6b75c74c9d-ml6kr 0/1 Pending 0 0s

nginx-rollupdate-6b75c74c9d-ml6kr 0/1 Pending 0 0s

nginx-rollupdate-58b64cfcc9-ljlgk 0/1 Terminating 0 6m20s

nginx-rollupdate-58b64cfcc9-ljlgk 0/1 Terminating 0 6m20s

nginx-rollupdate-6b75c74c9d-v67c6 0/1 Pending 0 1s

nginx-rollupdate-6b75c74c9d-ml6kr 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-ml6kr 0/1 Init:0/1 0 0s

nginx-rollupdate-6b75c74c9d-v67c6 0/1 Init:0/1 0 1s

nginx-rollupdate-58b64cfcc9-z5zc6 0/1 Terminating 0 6m21s

nginx-rollupdate-58b64cfcc9-z5zc6 0/1 Terminating 0 6m21s

nginx-rollupdate-6b75c74c9d-cj4pf 0/1 PodInitializing 0 3s

nginx-rollupdate-58b64cfcc9-4wn6f 0/1 Terminating 0 6m21s

nginx-rollupdate-58b64cfcc9-4wn6f 0/1 Terminating 0 6m21s

nginx-rollupdate-6b75c74c9d-vswpg 1/1 Running 0 3s

nginx-rollupdate-58b64cfcc9-tf7hc 1/1 Terminating 0 6m21s

nginx-rollupdate-58b64cfcc9-nf4wx 0/1 Terminating 0 6m22s

nginx-rollupdate-58b64cfcc9-nf4wx 0/1 Terminating 0 6m22s

nginx-rollupdate-58b64cfcc9-nf4wx 0/1 Terminating 0 6m22s

nginx-rollupdate-6b75c74c9d-ml6kr 0/1 PodInitializing 0 2s

nginx-rollupdate-58b64cfcc9-l88zh 0/1 Terminating 0 6m23s

nginx-rollupdate-58b64cfcc9-tf7hc 0/1 Terminating 0 6m23s

nginx-rollupdate-58b64cfcc9-l88zh 0/1 Terminating 0 6m23s

nginx-rollupdate-58b64cfcc9-l88zh 0/1 Terminating 0 6m23s

nginx-rollupdate-6b75c74c9d-ml6kr 1/1 Running 0 3s

nginx-rollupdate-58b64cfcc9-fdq6t 1/1 Terminating 0 6m23s

nginx-rollupdate-6b75c74c9d-cj4pf 1/1 Running 0 5s

nginx-rollupdate-6b75c74c9d-v67c6 0/1 PodInitializing 0 5s

nginx-rollupdate-58b64cfcc9-tf7hc 0/1 Terminating 0 6m24s

nginx-rollupdate-58b64cfcc9-tf7hc 0/1 Terminating 0 6m24s

nginx-rollupdate-6b75c74c9d-v67c6 1/1 Running 0 5s

nginx-rollupdate-58b64cfcc9-fdq6t 0/1 Terminating 0 6m24s

nginx-rollupdate-58b64cfcc9-fdq6t 0/1 Terminating 0 6m31s

nginx-rollupdate-58b64cfcc9-fdq6t 0/1 Terminating 0 6m31s

回滚测试

查看版本历史

[root@master deploy]# kubectl rollout history deployment nginx-rollupdate

deployment.apps/nginx-rollupdate

REVISION CHANGE-CAUSE

1 <none>

2 <none>

版本回退

# 回滚到历史版本1

[root@master deploy]# kubectl rollout undo deployment nginx-rollupdate --to-revision=1

deployment.apps/nginx-rollupdate rolled back

[root@master deploy]# kubectl rollout status deployment nginx-rollupdate

Waiting for deployment "nginx-rollupdate" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 2 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-rollupdate" rollout to finish: 6 of 8 updated replicas are available...

Waiting for deployment "nginx-rollupdate" rollout to finish: 7 of 8 updated replicas are available...

deployment "nginx-rollupdate" successfully rolled out

[root@master deploy]# kubectl get rs -w -l app=nginx-rollupdate

NAME DESIRED CURRENT READY AGE

nginx-rollupdate-58b64cfcc9 0 0 0 22m

nginx-rollupdate-6b75c74c9d 8 8 8 16m

nginx-rollupdate-58b64cfcc9 0 0 0 23m

nginx-rollupdate-58b64cfcc9 2 0 0 23m

nginx-rollupdate-6b75c74c9d 6 8 8 16m

nginx-rollupdate-6b75c74c9d 6 8 8 16m

nginx-rollupdate-58b64cfcc9 2 0 0 23m

nginx-rollupdate-58b64cfcc9 2 2 0 23m

nginx-rollupdate-58b64cfcc9 4 2 0 23m

nginx-rollupdate-6b75c74c9d 6 6 6 16m

nginx-rollupdate-58b64cfcc9 4 2 0 23m

nginx-rollupdate-58b64cfcc9 4 4 0 23m

nginx-rollupdate-58b64cfcc9 4 4 1 23m

nginx-rollupdate-6b75c74c9d 5 6 6 16m

nginx-rollupdate-6b75c74c9d 5 6 6 16m

nginx-rollupdate-58b64cfcc9 5 4 1 23m

nginx-rollupdate-6b75c74c9d 5 5 5 16m

nginx-rollupdate-58b64cfcc9 5 4 2 23m

nginx-rollupdate-6b75c74c9d 4 5 5 16m

nginx-rollupdate-58b64cfcc9 5 5 2 23m

nginx-rollupdate-6b75c74c9d 4 5 5 16m

nginx-rollupdate-6b75c74c9d 4 4 4 16m

nginx-rollupdate-58b64cfcc9 6 5 2 23m

nginx-rollupdate-58b64cfcc9 6 5 2 23m

nginx-rollupdate-58b64cfcc9 6 6 2 23m

nginx-rollupdate-58b64cfcc9 6 6 3 23m

nginx-rollupdate-6b75c74c9d 3 4 4 16m

nginx-rollupdate-6b75c74c9d 3 4 4 16m

nginx-rollupdate-58b64cfcc9 7 6 3 23m

nginx-rollupdate-58b64cfcc9 7 6 3 23m

nginx-rollupdate-58b64cfcc9 7 7 3 23m

nginx-rollupdate-6b75c74c9d 3 3 3 16m

nginx-rollupdate-58b64cfcc9 7 7 4 23m

nginx-rollupdate-6b75c74c9d 2 3 3 16m

nginx-rollupdate-58b64cfcc9 8 7 4 23m

nginx-rollupdate-58b64cfcc9 8 7 4 23m

nginx-rollupdate-6b75c74c9d 2 3 3 16m

nginx-rollupdate-58b64cfcc9 8 8 4 23m

nginx-rollupdate-6b75c74c9d 2 2 2 16m

nginx-rollupdate-58b64cfcc9 8 8 5 23m

nginx-rollupdate-6b75c74c9d 1 2 2 16m

nginx-rollupdate-6b75c74c9d 1 2 2 16m

nginx-rollupdate-6b75c74c9d 1 1 1 16m

nginx-rollupdate-58b64cfcc9 8 8 6 23m

nginx-rollupdate-6b75c74c9d 0 1 1 16m

nginx-rollupdate-6b75c74c9d 0 1 1 16m

nginx-rollupdate-6b75c74c9d 0 0 0 16m

nginx-rollupdate-58b64cfcc9 8 8 7 23m

nginx-rollupdate-58b64cfcc9 8 8 8 23m重启deployment

[root@master deploy]# kubectl rollout status deployment nginx-rollupdate -w

deployment "nginx-rollupdate" successfully rolled outDeployment 常用字段样例

apiVersion: apps/v1 # 指定api版本,此值必须在kubectl api-versions中

kind: Deployment # 指定创建资源的角色/类型

metadata: # 资源的元数据/属性

name: demo # 资源的名字,在同一个namespace中必须唯一

namespace: default # 部署在哪个namespace中

labels: # 设定资源的标签

app: demo

version: stable

spec: # 资源规范字段

replicas: 1 # 声明副本数目

revisionHistoryLimit: 3 # 保留历史版本

selector: # 选择器

matchLabels: # 匹配标签

app: demo

version: stable

strategy: # 策略

rollingUpdate: # 滚动更新

maxSurge: 30% # 最大额外可以存在的副本数,可以为百分比,也可以为整数

maxUnavailable: 30% # 示在更新过程中能够进入不可用状态的 Pod 的最大值,可以为百分比,也可以为整数

type: RollingUpdate # 滚动更新策略

template: # 模版

metadata: # 资源的元数据/属性

annotations: # 自定义注解列表

sidecar.istio.io/inject: "false" # 自定义注解名字

labels: # 设定资源的标签

app: demo

version: stable

spec: # 资源规范字段

containers:

- name: demo # 容器的名字

image: demo:v1 # 容器使用的镜像地址

imagePullPolicy: IfNotPresent # 每次Pod启动拉取镜像策略,三个选择 Always、Never、IfNotPresent

# Always,每次都检查;Never,每次都不检查(不管本地是否有);IfNotPresent,如果本地有就不检查,如果没有就拉取

resources: # 资源管理

limits: # 最大使用

cpu: 300m # CPU,1核心 = 1000m

memory: 500Mi # 内存,1G = 1000Mi

requests: # 容器运行时,最低资源需求,也就是说最少需要多少资源容器才能正常运行

cpu: 100m

memory: 100Mi

livenessProbe: # pod 内部健康检查的设置

httpGet: # 通过httpget检查健康,返回200-399之间,则认为容器正常

path: /healthCheck # URI地址

port: 8080 # 端口

scheme: HTTP # 协议

# host: 127.0.0.1 # 主机地址

initialDelaySeconds: 30 # 表明第一次检测在容器启动后多长时间后开始

timeoutSeconds: 5 # 检测的超时时间

periodSeconds: 30 # 检查间隔时间

successThreshold: 1 # 成功门槛

failureThreshold: 5 # 失败门槛,连接失败5次,pod杀掉,重启一个新的pod

readinessProbe: # Pod 准备服务健康检查设置

httpGet:

path: /healthCheck

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 5

#也可以用这种方法

#exec: 执行命令的方法进行监测,如果其退出码不为0,则认为容器正常

# command:

# - cat

# - /tmp/health

#也可以用这种方法

#tcpSocket: # 通过tcpSocket检查健康

# port: number

ports:

- name: http # 名称

containerPort: 8080 # 容器开发对外的端口

protocol: TCP # 协议

imagePullSecrets: # 镜像仓库拉取密钥

- name: harbor-certification

affinity: # 亲和性调试

nodeAffinity: # 节点亲和力

requiredDuringSchedulingIgnoredDuringExecution: # pod 必须部署到满足条件的节点上

nodeSelectorTerms: # 节点满足任何一个条件就可以

- matchExpressions: # 有多个选项,则只有同时满足这些逻辑选项的节点才能运行 pod

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站