共计 28725 个字符,预计需要花费 72 分钟才能阅读完成。

前言

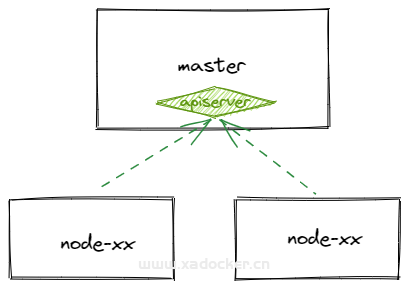

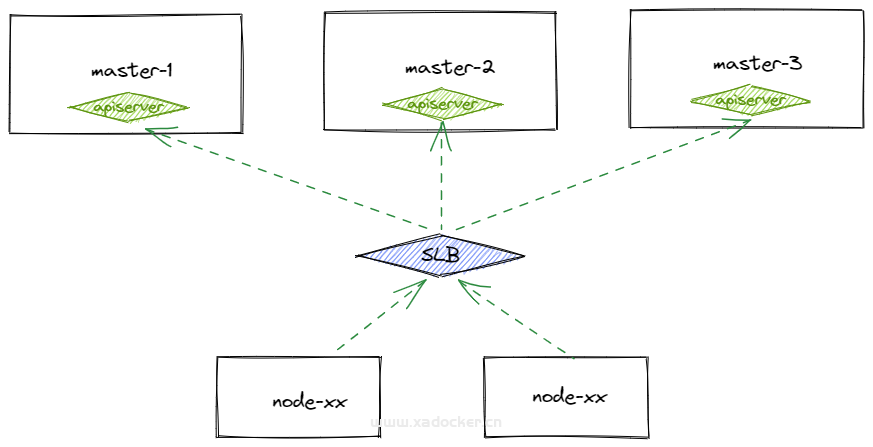

最近在公司推动k8s落地,公司项目已从云服务器到docker化部署走了好久,而我们为后续容器的管理也引入了k8s,但只是测试环境目前在使用,得在这个环境中持续维护过才上生产。基于成本考虑测试环境并没有高可用,而是单master,所以本篇文章就此展开如何扩展多个master节点

改造前后拓扑如下

# 目前现状

[root@node1 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node1 Ready master 22h v1.18.9 172.16.3.233 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node2 Ready <none> 22h v1.18.9 172.16.3.234 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node3 Ready <none> 22h v1.18.9 172.16.3.235 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node4 Ready <none> 22h v1.18.9 172.16.3.239 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node5 Ready <none> 22h v1.18.9 172.16.3.237 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node6 Ready <none> 22h v1.18.9 172.16.3.238 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

[root@node1 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5b8b769fcd-jdq7x 1/1 Running 0 22h 10.100.166.129 node1 <none> <none>

calico-node-4fhh9 1/1 Running 0 22h 172.16.3.235 node3 <none> <none>

calico-node-cz48n 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

calico-node-hk8g4 1/1 Running 0 22h 172.16.3.234 node2 <none> <none>

calico-node-ljqsc 1/1 Running 0 22h 172.16.3.239 node4 <none> <none>

calico-node-swqgx 1/1 Running 0 22h 172.16.3.238 node6 <none> <none>

calico-node-wnrz5 1/1 Running 0 22h 172.16.3.237 node5 <none> <none>

coredns-66db54ff7f-j2pc6 1/1 Running 0 22h 10.100.166.130 node1 <none> <none>

coredns-66db54ff7f-q75cz 1/1 Running 0 22h 10.100.166.131 node1 <none> <none>

etcd-node1 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

kube-apiserver-node1 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

kube-controller-manager-node1 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

kube-proxy-6z2l8 1/1 Running 0 22h 172.16.3.237 node5 <none> <none>

kube-proxy-8c6jf 1/1 Running 0 22h 172.16.3.238 node6 <none> <none>

kube-proxy-f2s82 1/1 Running 0 22h 172.16.3.239 node4 <none> <none>

kube-proxy-fdl4m 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

kube-proxy-hbvcb 1/1 Running 0 22h 172.16.3.235 node3 <none> <none>

kube-proxy-ldwnb 1/1 Running 0 22h 172.16.3.234 node2 <none> <none>

kube-scheduler-node1 1/1 Running 0 22h 172.16.3.233 node1 <none> <none>

metrics-server-5c5fc888ff-jn76t 1/1 Running 0 14h 10.100.3.68 node4 <none> <none>

metrics-server-5c5fc888ff-kffsv 1/1 Running 0 14h 10.100.33.132 node5 <none> <none>

metrics-server-5c5fc888ff-kwmc6 1/1 Running 0 14h 10.100.135.6 node3 <none> <none>

metrics-server-5c5fc888ff-wr566 1/1 Running 0 14h 10.100.104.14 node2 <none> <none>

nfs-client-provisioner-5d89dbb88-5t8dj 1/1 Running 0 22h 10.100.135.1 node3 <none> <none>

改造后大致如下

Terraform准备资源

创建资源

[root@node-nfs k8s-resource]# cat terraform.tf

terraform {

required_providers {

alicloud = {

source = "aliyun/alicloud"

#source = "local-registry/aliyun/alicloud"

}

}

}

provider "alicloud" {

access_key = "xxx"

secret_key = "xxx"

region = "cn-guangzhou"

}

data "alicloud_vpcs" "vpcs_ds" {

cidr_block = "172.16.0.0/12"

status = "Available"

name_regex = "^game-cluster-xxxx"

}

output "first_vpc_id" {

value = "${data.alicloud_vpcs.vpcs_ds.vpcs.0.id}"

}

resource "alicloud_vswitch" "k8s_master_vsw" {

vpc_id = data.alicloud_vpcs.vpcs_ds.vpcs.0.id

cidr_block = "172.16.8.0/21"

zone_id = "cn-guangzhou-b"

vswitch_name = "k8s-master"

}

resource "alicloud_vswitch" "slb_vsw" {

vpc_id = data.alicloud_vpcs.vpcs_ds.vpcs.0.id

cidr_block = "172.16.16.0/21"

zone_id = "cn-guangzhou-b"

vswitch_name = "api-server"

}

# 创建ecs

data "alicloud_security_groups" "default" {

name_regex = "^game-clsuter-xxxx"

}

resource "alicloud_instance" "instance" {

count = 2

security_groups = data.alicloud_security_groups.default.ids

instance_type = "ecs.c6.xlarge"

instance_charge_type = "PrePaid"

period_unit = "Week"

period = 1

system_disk_category = "cloud_efficiency"

image_id = "centos_7_6_x64_20G_alibase_20211130.vhd"

instance_name = "k8s_master_node${count.index}"

vswitch_id = alicloud_vswitch.k8s_master_vsw.id

internet_max_bandwidth_out = 0

description = "this ecs is run for k8s master"

password = "xxxxxxxxxxxxxxxxx"

}

data "alicloud_instances" "instances_ds" {

name_regex = "^k8s_master_node"

status = "Running"

}

output "instance_ids" {

value = "${data.alicloud_instances.instances_ds.ids}"

}

# 创建slb

resource "alicloud_slb" "instance" {

load_balancer_name = "api-server"

address_type = "intranet"

specification = "slb.s1.small"

vswitch_id = alicloud_vswitch.slb_vsw.id

internet_charge_type = "PayByTraffic"

instance_charge_type = "PostPaid"

period = 1

}

resource "alicloud_slb_server_group" "apiserver_6443_group" {

load_balancer_id = alicloud_slb.instance.id

name = "apiserver_6443"

servers {

server_ids = data.alicloud_instances.instances_ds.ids

port = 6443

weight = 100

}

}

resource "alicloud_slb_listener" "ingress_80" {

load_balancer_id = alicloud_slb.instance.id

backend_port = 6443

frontend_port = 6443

protocol = "tcp"

bandwidth = -1

established_timeout = 900

scheduler = "rr"

server_group_id = alicloud_slb_server_group.apiserver_6443_group.id

}

查看资源

[root@node-nfs k8s-resource]# terraform show

# alicloud_instance.instance[0]:

resource "alicloud_instance" "instance" {

auto_renew_period = 0

availability_zone = "cn-guangzhou-b"

deletion_protection = false

description = "this ecs is run for k8s master"

dry_run = false

force_delete = false

host_name = "iZxxx9k0bcq73pjg7h10pmZ"

id = "i-xxx9k0bcq73pjg7h10pm"

image_id = "centos_7_6_x64_20G_alibase_20201120.vhd"

include_data_disks = true

instance_charge_type = "PrePaid"

instance_name = "k8s_master_node0"

instance_type = "ecs.c6.xlarge"

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_in = -1

internet_max_bandwidth_out = 0

password = (sensitive value)

period = 1

period_unit = "Week"

private_ip = "172.16.8.4"

renewal_status = "Normal"

secondary_private_ip_address_count = 0

secondary_private_ips = []

security_groups = [

"sg-xxxakygvknad8qyum0l2",

]

spot_price_limit = 0

spot_strategy = "NoSpot"

status = "Running"

subnet_id = "vsw-xxx0537ie76xn4qtkn5pb"

system_disk_category = "cloud_efficiency"

system_disk_size = 40

tags = {}

volume_tags = {}

vswitch_id = "vsw-xxx0537ie76xn4qtkn5pb"

}

# alicloud_instance.instance[1]:

resource "alicloud_instance" "instance" {

auto_renew_period = 0

availability_zone = "cn-guangzhou-b"

deletion_protection = false

description = "this ecs is run for k8s master"

dry_run = false

force_delete = false

host_name = "iZxxxd0r1on8nzfni1wvl2Z"

id = "i-xxxd0r1on8nzfni1wvl2"

image_id = "centos_7_6_x64_20G_alibase_20201120.vhd"

include_data_disks = true

instance_charge_type = "PrePaid"

instance_name = "k8s_master_node1"

instance_type = "ecs.c6.xlarge"

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_in = -1

internet_max_bandwidth_out = 0

password = (sensitive value)

period = 1

period_unit = "Week"

private_ip = "172.16.8.5"

renewal_status = "Normal"

secondary_private_ip_address_count = 0

secondary_private_ips = []

security_groups = [

"sg-xxxakygvknad8qyum0l2",

]

spot_price_limit = 0

spot_strategy = "NoSpot"

status = "Running"

subnet_id = "vsw-xxx0537ie76xn4qtkn5pb"

system_disk_category = "cloud_efficiency"

system_disk_size = 40

tags = {}

volume_tags = {}

vswitch_id = "vsw-xxx0537ie76xn4qtkn5pb"

}

# alicloud_slb.instance:

resource "alicloud_slb" "instance" {

address = "172.16.22.116"

address_ip_version = "ipv4"

address_type = "intranet"

bandwidth = 5120

delete_protection = "off"

id = "lb-xxxny80oabmw3acpqf8gb"

instance_charge_type = "PostPaid"

internet_charge_type = "PayByTraffic"

load_balancer_name = "api-server"

load_balancer_spec = "slb.s1.small"

master_zone_id = "cn-guangzhou-b"

modification_protection_status = "NonProtection"

name = "api-server"

payment_type = "PayAsYouGo"

resource_group_id = "rg-acfm3fweu5akq6y"

slave_zone_id = "cn-guangzhou-a"

specification = "slb.s1.small"

status = "active"

tags = {}

vswitch_id = "vsw-xxxc2xcd1vp5e17uaemy6"

}

# alicloud_slb_listener.ingress_80:

resource "alicloud_slb_listener" "ingress_80" {

acl_status = "off"

backend_port = 6443

bandwidth = -1

established_timeout = 900

frontend_port = 6443

health_check = "on"

health_check_interval = 2

health_check_timeout = 5

health_check_type = "tcp"

healthy_threshold = 3

id = "lb-xxxny80oabmw3acpqf8gb:tcp:6443"

load_balancer_id = "lb-xxxny80oabmw3acpqf8gb"

persistence_timeout = 0

protocol = "tcp"

scheduler = "rr"

server_group_id = "rsp-xxxedoz1n0fuf"

unhealthy_threshold = 3

}

# alicloud_slb_server_group.apiserver_6443_group:

resource "alicloud_slb_server_group" "apiserver_6443_group" {

delete_protection_validation = false

id = "rsp-xxxedoz1n0fuf"

load_balancer_id = "lb-xxxny80oabmw3acpqf8gb"

name = "apiserver_6443"

servers {

port = 6443

server_ids = [

"i-xxxd0r1on8nzfni1wvl2",

"i-xxx9k0bcq73pjg7h10pm",

"i-xxxciu2i9pghitzjvfqd",

]

type = "ecs"

weight = 100

}

}

# alicloud_vswitch.k8s_master_vsw:

resource "alicloud_vswitch" "k8s_master_vsw" {

availability_zone = "cn-guangzhou-b"

cidr_block = "172.16.8.0/21"

id = "vsw-xxx0537ie76xn4qtkn5pb"

name = "k8s-master"

status = "Available"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vswitch_name = "k8s-master"

zone_id = "cn-guangzhou-b"

}

# alicloud_vswitch.slb_vsw:

resource "alicloud_vswitch" "slb_vsw" {

availability_zone = "cn-guangzhou-b"

cidr_block = "172.16.16.0/21"

id = "vsw-xxxc2xcd1vp5e17uaemy6"

name = "api-server"

status = "Available"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vswitch_name = "api-server"

zone_id = "cn-guangzhou-b"

}

# data.alicloud_instances.instances_ds:

data "alicloud_instances" "instances_ds" {

id = "506499456"

ids = [

"i-xxxd0r1on8nzfni1wvl2",

"i-xxx9k0bcq73pjg7h10pm",

"i-xxxciu2i9pghitzjvfqd",

]

instances = [

{

availability_zone = "cn-guangzhou-b"

creation_time = "2020-11-27T08:17Z"

description = "this ecs is run for k8s master"

disk_device_mappings = [

{

category = "cloud_efficiency"

device = "/dev/xvda"

size = 40

type = "system"

},

]

eip = ""

id = "i-xxxd0r1on8nzfni1wvl2"

image_id = "centos_7_6_x64_20G_alibase_20201120.vhd"

instance_charge_type = "PrePaid"

instance_type = "ecs.c6.xlarge"

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_out = 0

key_name = ""

name = "k8s_master_node1"

private_ip = "172.16.8.5"

public_ip = ""

ram_role_name = ""

region_id = "cn-guangzhou"

resource_group_id = ""

security_groups = [

"sg-xxxakygvknad8qyum0l2",

]

spot_strategy = "NoSpot"

status = "Running"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vswitch_id = "vsw-xxx0537ie76xn4qtkn5pb"

},

{

availability_zone = "cn-guangzhou-b"

creation_time = "2020-11-27T08:17Z"

description = "this ecs is run for k8s master"

disk_device_mappings = [

{

category = "cloud_efficiency"

device = "/dev/xvda"

size = 40

type = "system"

},

]

eip = ""

id = "i-xxx9k0bcq73pjg7h10pm"

image_id = "centos_7_6_x64_20G_alibase_20201120.vhd"

instance_charge_type = "PrePaid"

instance_type = "ecs.c6.xlarge"

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_out = 0

key_name = ""

name = "k8s_master_node0"

private_ip = "172.16.8.4"

public_ip = ""

ram_role_name = ""

region_id = "cn-guangzhou"

resource_group_id = ""

security_groups = [

"sg-xxxakygvknad8qyum0l2",

]

spot_strategy = "NoSpot"

status = "Running"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vswitch_id = "vsw-xxx0537ie76xn4qtkn5pb"

},

{

availability_zone = "cn-guangzhou-b"

creation_time = "2020-11-22T13:33Z"

description = ""

disk_device_mappings = [

{

category = "cloud_efficiency"

device = "/dev/xvda"

size = 120

type = "system"

},

]

eip = ""

id = "i-xxxciu2i9pghitzjvfqd"

image_id = "centos_7_6_x64_20G_alibase_20201120.vhd"

instance_charge_type = "PrePaid"

instance_type = "ecs.c6.2xlarge"

internet_charge_type = "PayByBandwidth"

internet_max_bandwidth_out = 0

key_name = ""

name = "k8s_master_node0"

private_ip = "172.16.3.233"

public_ip = ""

ram_role_name = ""

region_id = "cn-guangzhou"

resource_group_id = ""

security_groups = [

"sg-xxxakygvknad8qyum0l2",

]

spot_strategy = "NoSpot"

status = "Running"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vswitch_id = "vsw-xxx9teaxnewvrrbbteona"

},

]

name_regex = "^k8s_master_node"

names = [

"k8s_master_node1",

"k8s_master_node0",

"k8s_master_node0",

]

status = "Running"

total_count = 9

}

# data.alicloud_security_groups.default:

data "alicloud_security_groups" "default" {

enable_details = true

groups = [

{

creation_time = "2020-11-12T07:25:26Z"

description = ""

id = "sg-xxxakygvknad8qyum0l2"

inner_access = true

name = "tf_test_foo"

resource_group_id = ""

security_group_type = "normal"

tags = {}

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

},

]

id = "2620182046"

ids = [

"sg-xxxakygvknad8qyum0l2",

]

name_regex = "^tf_test_foo"

names = [

"tf_test_foo",

]

page_size = 50

total_count = 3

}

# data.alicloud_vpcs.vpcs_ds:

data "alicloud_vpcs" "vpcs_ds" {

cidr_block = "172.16.0.0/12"

enable_details = true

id = "3917272974"

ids = [

"vpc-xxx8xhxni9b2fx43v7t79",

"vpc-xxxjzh7cwbazphqrdvv0w",

]

name_regex = "^tf"

names = [

"tf_test_foo",

"tf_test_foo",

]

page_size = 50

status = "Available"

total_count = 3

vpcs = [

{

cidr_block = "172.16.0.0/12"

creation_time = "2020-11-12T07:25:21Z"

description = ""

id = "vpc-xxx8xhxni9b2fx43v7t79"

ipv6_cidr_block = ""

is_default = false

region_id = "cn-guangzhou"

resource_group_id = "rg-acfm3fweu5akq6y"

route_table_id = "vtb-xxxh7tx2h0ry77itn7t7b"

router_id = "vrt-xxxjux5gla6wddl8laniu"

secondary_cidr_blocks = []

status = "Available"

tags = {}

user_cidrs = []

vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

vpc_name = "tf_test_foo"

vrouter_id = "vrt-xxxjux5gla6wddl8laniu"

vswitch_ids = [

"vsw-xxxc2xcd1vp5e17uaemy6",

"vsw-xxx0537ie76xn4qtkn5pb",

"vsw-xxx9teaxnewvrrbbteona",

]

},

{

cidr_block = "172.16.0.0/12"

creation_time = "2020-11-12T06:16:21Z"

description = ""

id = "vpc-xxxjzh7cwbazphqrdvv0w"

ipv6_cidr_block = ""

is_default = false

region_id = "cn-guangzhou"

resource_group_id = "rg-acfm3fweu5akq6y"

route_table_id = "vtb-xxx7d55dzn93brz4a0c7m"

router_id = "vrt-xxxs0p2z91n3t2m12r1sh"

secondary_cidr_blocks = []

status = "Available"

tags = {}

user_cidrs = []

vpc_id = "vpc-xxxjzh7cwbazphqrdvv0w"

vpc_name = "tf_test_foo"

vrouter_id = "vrt-xxxs0p2z91n3t2m12r1sh"

vswitch_ids = [

"vsw-xxxu0p45oozkpks1cxuc3",

]

},

]

}

Outputs:

ecs_instance_ip = [

"172.16.8.5",

"172.16.8.4",

"172.16.3.233",

]

first_vpc_id = "vpc-xxx8xhxni9b2fx43v7t79"

instance_ids = [

"i-xxxd0r1on8nzfni1wvl2",

"i-xxx9k0bcq73pjg7h10pm",

"i-xxxciu2i9pghitzjvfqd",

]

slb_instance_ip = "172.16.22.116"

新master节点部署

新增master节点上安装docker/kubelet/kubeadm

echo "127.0.0.1 $(hostname)" >> /etc/hosts

yum remove -y docker docker-client docker-client-latest docker-ce-cli docker-common docker-latest docker-latest-logrotate docker-logrotate docker-selinux docker-engine-selinux docker-engine

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-19.03.8 docker-ce-cli-19.03.8 containerd.io

systemctl enable docker

systemctl start docker

yum install -y nfs-utils

yum install -y wget

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf

# 可能没有,追加

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf

# 执行命令以应用

sysctl -p

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

export k8s_version=1.18.9

yum install -y kubelet-${k8s_version} kubeadm-${k8s_version} kubectl-${k8s_version}

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

cat >/etc/docker/daemon.json<<EOF

{"registry-mirrors": ["https://registry.cn-hangzhou.aliyuncs.com"]}

EOF

systemctl daemon-reload

systemctl restart docker

systemctl enable kubelet && systemctl start kubelet

mkdir /etc/kubernetes/pki

# 将apiserver地址改为slb地址

echo 172.16.3.223 apiserver.demo >> /etc/hosts在原master节点上获取节点加入命令

# 获取控制面版certificate key

[root@node1 ~]# kubeadm init phase upload-certs --upload-certs

I0527 18:37:10.193183 30327 version.go:252] remote version is much newer: v1.24.1; falling back to: stable-1.18

W0527 18:37:11.094478 30327 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

6436c2dbf54aa169142d4fb608fa2689f267b0dadfc639134a75a5d80ec310f5

# 获取节点加入命令

[root@node1 ~]# kubeadm token create --print-join-command

W0527 18:39:03.701901 31996 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

kubeadm join apiserver.demo:6443 --token zg8w13.apstyginl2ab7th9 --discovery-token-ca-cert-hash sha256:d96330afa7840595c4648c72310e4e145eeb75165d28b50cbada4839f52e68bc

# 通过上面两个命令的输出组合成master节点的加入控制平面命令

kubeadm join apiserver.demo:6443 --token zg8w13.apstyginl2ab7th9 --discovery-token-ca-cert-hash sha256:d96330afa7840595c4648c72310e4e145eeb75165d28b50cbada4839f52e68bc --control-plane --certificate-key 6436c2dbf54aa169142d4fb608fa2689f267b0dadfc639134a75a5d80ec310f5

新master节点上执行加入控制平面命令

# 新master节点1

[root@node8 ~]# kubeadm join apiserver.demo:6443 --token zg8w13.apstyginl2ab7th9 --discovery-token-ca-cert-hash sha256:d96330afa7840595c4648c72310e4e145eeb75165d28b50cbada4839f52e68bc --control-plane --certificate-key 6436c2dbf54aa169142d4fb608fa2689f267b0dadfc639134a75a5d80ec310f5

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [node8 localhost] and IPs [172.16.8.5 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [node8 localhost] and IPs [172.16.8.5 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [node8 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local apiserver.demo] and IPs [10.96.0.1 172.16.8.5]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

W0527 18:00:28.880162 18011 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0527 18:00:28.886583 18011 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0527 18:00:28.887383 18011 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

{"level":"warn","ts":"2022-05-27T18:00:40.845+0800","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"passthrough:///https://172.16.8.5:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = context deadline exceeded"}

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node node8 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node node8 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

# 新master节点2

[root@node9 ~]# kubeadm join apiserver.demo:6443 --token zg8w13.apstyginl2ab7th9 --discovery-token-ca-cert-hash sha256:d96330afa7840595c4648c72310e4e145eeb75165d28b50cbada4839f52e68bc --control-plane --certificate-key 6436c2dbf54aa169142d4fb608fa2689f267b0dadfc639134a75a5d80ec310f5

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [node9 localhost] and IPs [172.16.8.4 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [node9 localhost] and IPs [172.16.8.4 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [node9 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local apiserver.demo] and IPs [10.96.0.1 172.16.8.4]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

W0527 18:10:57.489136 18637 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0527 18:10:57.495196 18637 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0527 18:10:57.495990 18637 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

{"level":"warn","ts":"2022-05-27T18:11:18.111+0800","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"passthrough:///https://172.16.8.4:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = context deadline exceeded"}

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node node9 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node node9 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

查看master节点状态

# 可以看到新的master节点已就绪

[root@node2 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node1 Ready master 27h v1.18.9 172.16.3.233 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node2 Ready <none> 26h v1.18.9 172.16.3.234 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node3 Ready <none> 26h v1.18.9 172.16.3.235 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node4 Ready <none> 26h v1.18.9 172.16.3.239 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node5 Ready <none> 26h v1.18.9 172.16.3.237 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node6 Ready <none> 26h v1.18.9 172.16.3.238 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node8 Ready master 60m v1.18.9 172.16.8.5 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

node9 Ready master 49m v1.18.9 172.16.8.4 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

# 此时每个master节点上都会有etcd,该etcd是以堆叠的方式运行

[root@node2 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5b8b769fcd-n4tn6 1/1 Running 0 80m 10.100.104.16 node2 <none> <none>

calico-node-4fhh9 1/1 Running 0 27h 172.16.3.235 node3 <none> <none>

calico-node-9n8nc 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

calico-node-cz48n 1/1 Running 0 27h 172.16.3.233 node1 <none> <none>

calico-node-hk8g4 1/1 Running 0 27h 172.16.3.234 node2 <none> <none>

calico-node-jm4kb 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

calico-node-ljqsc 1/1 Running 0 26h 172.16.3.239 node4 <none> <none>

calico-node-swqgx 1/1 Running 0 26h 172.16.3.238 node6 <none> <none>

calico-node-wnrz5 1/1 Running 0 26h 172.16.3.237 node5 <none> <none>

coredns-66db54ff7f-2s26k 1/1 Running 0 80m 10.100.104.15 node2 <none> <none>

coredns-66db54ff7f-bw2tv 1/1 Running 0 80m 10.100.135.7 node3 <none> <none>

etcd-node1 1/1 Running 0 27h 172.16.3.233 node1 <none> <none>

etcd-node8 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

etcd-node9 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

kube-apiserver-node1 1/1 Running 1 27h 172.16.3.233 node1 <none> <none>

kube-apiserver-node8 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

kube-apiserver-node9 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

kube-controller-manager-node1 1/1 Running 1 27h 172.16.3.233 node1 <none> <none>

kube-controller-manager-node8 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

kube-controller-manager-node9 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

kube-proxy-4q2z5 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

kube-proxy-6z2l8 1/1 Running 0 26h 172.16.3.237 node5 <none> <none>

kube-proxy-8bmhg 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

kube-proxy-8c6jf 1/1 Running 0 26h 172.16.3.238 node6 <none> <none>

kube-proxy-f2s82 1/1 Running 0 26h 172.16.3.239 node4 <none> <none>

kube-proxy-fdl4m 1/1 Running 0 27h 172.16.3.233 node1 <none> <none>

kube-proxy-hbvcb 1/1 Running 0 27h 172.16.3.235 node3 <none> <none>

kube-proxy-ldwnb 1/1 Running 0 27h 172.16.3.234 node2 <none> <none>

kube-scheduler-node1 1/1 Running 1 27h 172.16.3.233 node1 <none> <none>

kube-scheduler-node8 1/1 Running 0 62m 172.16.8.5 node8 <none> <none>

kube-scheduler-node9 1/1 Running 0 52m 172.16.8.4 node9 <none> <none>

metrics-server-5c5fc888ff-jn76t 1/1 Running 0 18h 10.100.3.68 node4 <none> <none>

metrics-server-5c5fc888ff-kffsv 1/1 Running 0 18h 10.100.33.132 node5 <none> <none>

metrics-server-5c5fc888ff-kwmc6 1/1 Running 0 18h 10.100.135.6 node3 <none> <none>

metrics-server-5c5fc888ff-wr566 1/1 Running 0 18h 10.100.104.14 node2 <none> <none>

nfs-client-provisioner-5d89dbb88-5t8dj 1/1 Running 1 26h 10.100.135.1 node3 <none> <none>

此时最新拓扑

注意绑定在四层SLB的节点,该节点无法通过该SLB访问该节点提供的服务

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站