共计 28471 个字符,预计需要花费 72 分钟才能阅读完成。

Ceph 基本概念

目前Ceph官方提供了几种不同的部署Ceph集群的方法

- ceph-deploy:一个集群自动化部署工具,使用较久,成熟稳定,被很多自动化工具所集成,但目前已不再积极维护

- cephadm:从Octopus开始提供的新集群部署工具,支持通过图形界面或者命令行界面添加节点

- manual:手动部署,一步步部署Ceph集群,支持较多定制化和了解部署细节,安装难度较大,但可以清晰掌握安装部署的细节。

基本组件

- RADOSGW:对象网关守护程序

- RADOS:存储集群

- Monitor:监视器,RADOS的组件,为何整个ceph集群的全局状态

- OSD:Object Storage Device,RADOS的组件,用于存储资源,即数据盘(一个磁盘一个OSD进程)

- MDS:ceph元数据服务器,为ceph文件系统存储元数据,其他块存储,对象存储不需要这东西

- RBD:块存储

- CEPHFS:文件存储

- LIBRADOS:和RADOS 交互的基本库,librados,ceph通过原生协议和RADOS交互

使用cephadm部署

Cephadm通过manager daemon SSH连接到主机部署和管理Ceph群集,以添加,删除或更新Ceph daemon containers。它不依赖于诸如Ansible,Rook或Salt的外部配置或编排工具。

Cephadm管理Ceph集群的整个生命周期。它首先在一个节点上引导一个微小的Ceph集群(one monitor and one manager),然后自动将集群扩展到多个主机节点,并提供所有Ceph守护程序和服务。这可以通过Ceph命令行界面(CLI)或仪表板(GUI)执行。Cephadm是Octopus v15.2.0版本中的新增功能,并且不支持旧版本的Ceph。

基础资源规划

terraform资源声明

初始化环境

初始化配置

# 每个节点都设置主机名

hostnamectl set-hostname node1

hostnamectl set-hostname node2

hostnamectl set-hostname node3

# 配置host解析

cat >> /etc/hosts <<EOF

172.16.8.22 node1

172.16.8.23 node2

172.16.8.24 node3

EOF

# 安装python3

yum install python3 -y安装docker

# 卸载docker

yum remove -y docker \

docker-client \

docker-client-latest \

docker-ce-cli \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

# 配置仓库源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker

yum install -y docker-ce-19.03.8 docker-ce-cli-19.03.8 containerd.io

#配置docker镜像加速

mkdir -p /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://uyah70su.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl enable docker

systemctl restart docker安装cephadm

# 在第一个节点执行即可

# 直接从github下载

[root@node1 ~]# curl https://raw.githubusercontent.com/ceph/ceph/v15.2.1/src/cephadm/cephadm -o cephadm

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 148k 100 148k 0 0 9751 0 0:00:15 0:00:15 --:--:-- 12398

[root@node1 ~]# chmod +x cephadm

[root@node1 ~]# mv cephadm /usr/local/bin/

# 安装ceph源

[root@node1 ~]# ./cephadm add-repo --release octopus

INFO:root:Writing repo to /etc/yum.repos.d/ceph.repo...

INFO:cephadm:Enabling EPEL...

# 安装cephadm命令

[root@node1 ~]# which cephadm

/usr/bin/which: no cephadm in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

[root@node1 ~]# ./cephadm install

INFO:cephadm:Installing packages ['cephadm']...

[root@node1 ~]# which cephadm

/usr/sbin/cephadm

# 查看版本,初次查看会有点慢

[root@node1 ~]# cephadm version

ceph version 15.2.16 (d46a73d6d0a67a79558054a3a5a72cb561724974) octopus (stable)

cephadm创建新集群

引导集群

# 创建第一个ceph-mon

[root@node1 ~]# mkdir -p /etc/ceph

# 需要一个节点ip,不能用节点主机名,只能接ip地址

[root@node1 ~]# cephadm bootstrap --mon-ip 172.16.8.22

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: ceeaecf0-ea1c-11ec-a587-00163e0157e5

Verifying IP 172.16.8.22 port 3300 ...

Verifying IP 172.16.8.22 port 6789 ...

Mon IP 172.16.8.22 is in CIDR network 172.16.8.0/21

Pulling container image quay.io/ceph/ceph:v15...

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network...

Creating mgr...

Verifying port 9283 ...

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Wrote config to /etc/ceph/ceph.conf

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/10)...

mgr not available, waiting (2/10)...

mgr not available, waiting (3/10)...

mgr not available, waiting (4/10)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 5...

Mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to to /etc/ceph/ceph.pub

Adding key to root@localhost's authorized_keys...

Adding host node1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 13...

Mgr epoch 13 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://iZ7xva6bpev0ne09dnfcgdZ:8443/

User: admin

Password: ok7udrdc7t

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid ceeaecf0-ea1c-11ec-a587-00163e0157e5 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.

# 此时根据上面反馈信息可以访问ceph dashboard该命令执行以下操作:

- 在本地主机上为新集群创建monitor 和 manager daemon守护程序。

- 为Ceph集群生成一个新的SSH密钥,并将其添加到root用户的/root/.ssh/authorized_keys文件中。

- 将与新群集进行通信所需的最小配置文件保存到/etc/ceph/ceph.conf。

- 向/etc/ceph/ceph.client.admin.keyring写入client.admin管理(特权!)secret key的副本。

- 将public key的副本写入/etc/ceph/ceph.pub。

查看当前集群状态

[root@node1 ~]# ll /etc/ceph/

total 12

-rw------- 1 root root 63 Jun 12 14:56 ceph.client.admin.keyring

-rw-r--r-- 1 root root 173 Jun 12 14:56 ceph.conf

-rw-r--r-- 1 root root 595 Jun 12 14:57 ceph.pub

# 查看ceph配置文件

[root@node1 ~]# cat /etc/ceph/ceph.conf

# minimal ceph.conf for ceeaecf0-ea1c-11ec-a587-00163e0157e5

[global]

fsid = ceeaecf0-ea1c-11ec-a587-00163e0157e5

mon_host = [v2:172.16.8.22:3300/0,v1:172.16.8.22:6789/0]

# 查看docker 镜像

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/ceph/ceph v15 296d7fe77d4e 39 hours ago 1.12GB

quay.io/ceph/ceph-grafana 6.7.4 557c83e11646 10 months ago 486MB

quay.io/prometheus/prometheus v2.18.1 de242295e225 2 years ago 140MB

quay.io/prometheus/alertmanager v0.20.0 0881eb8f169f 2 years ago 52.1MB

quay.io/prometheus/node-exporter v0.18.1 e5a616e4b9cf 3 years ago 22.9MB

# 查看此时ceph 集群容器

quay.io/prometheus/node-exporter v0.18.1 e5a616e4b9cf 3 years ago 22.9MB

[root@node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e7e2cd965a02 quay.io/ceph/ceph-grafana:6.7.4 "/bin/sh -c 'grafana…" 2 minutes ago Up 2 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-grafana.node1

555024e2ba60 quay.io/prometheus/alertmanager:v0.20.0 "/bin/alertmanager -…" 2 minutes ago Up 2 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-alertmanager.node1

060877a859ed quay.io/prometheus/prometheus:v2.18.1 "/bin/prometheus --c…" 2 minutes ago Up 2 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-prometheus.node1

577948d3e018 quay.io/prometheus/node-exporter:v0.18.1 "/bin/node_exporter …" 3 minutes ago Up 3 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-node-exporter.node1

11243d558481 quay.io/ceph/ceph:v15 "/usr/bin/ceph-crash…" 3 minutes ago Up 3 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-crash.node1

a4bf1d984cbb quay.io/ceph/ceph:v15 "/usr/bin/ceph-mgr -…" 4 minutes ago Up 4 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-mgr.node1.nyhaks

b0aa920a88ce quay.io/ceph/ceph:v15 "/usr/bin/ceph-mon -…" 4 minutes ago Up 4 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-mon.node1

安装ceph命令

[root@node1 ~]# cp /etc/yum.repos.d/ceph.repo{,.bak}

[root@node1 ~]# sed 's@download.ceph.com@mirrors.aliyun.com/ceph@g' /etc/yum.repos.d/ceph.repo -i

[root@node1 ~]# cephadm install ceph-common

Installing packages ['ceph-common']...

[root@node1 ~]# ceph -v

ceph version 15.2.16 (d46a73d6d0a67a79558054a3a5a72cb561724974) octopus (stable)查看集群状态

[root@node1 ~]# ceph -s

cluster:

id: ceeaecf0-ea1c-11ec-a587-00163e0157e5

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum node1 (age 19m)

mgr: node1.nyhaks(active, since 19m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 查看所有组件状态

[root@node1 ~]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.node1 node1 running (81s) 74s ago 88s 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f d9eb0a059bb6

crash.node1 node1 running (87s) 74s ago 87s 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7e86816b21e4

grafana.node1 node1 running (80s) 74s ago 86s 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 636a9055f284

mgr.node1.flvtob node1 running (2m) 74s ago 2m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e d07b5e138d3a

mon.node1 node1 running (2m) 74s ago 2m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 0ca6d9fce053

node-exporter.node1 node1 running (85s) 74s ago 85s 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 5265145ccfac

prometheus.node1 node1 running (84s) 74s ago 84s 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 16d1813d0ad7

集群添加节点

# 配置节点免密通信

[root@node1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/etc/ceph/ceph.pub"

The authenticity of host 'node2 (172.16.8.23)' can't be established.

ECDSA key fingerprint is SHA256:791Nd4fSWk3El/nBGNx1dsL+wqQRxiaftnhtR2973qg.

ECDSA key fingerprint is MD5:3d:b5:de:6a:b2:97:74:c8:65:5c:2d:68:45:4c:34:7c.

Are you sure you want to continue connecting (yes/no)? yes

root@node2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@node2'"

and check to make sure that only the key(s) you wanted were added.

[root@node1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@node3

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/etc/ceph/ceph.pub"

The authenticity of host 'node3 (172.16.8.24)' can't be established.

ECDSA key fingerprint is SHA256:/a9HiWfuXlk4AevoxSkH/EGHhzLnWxoSxnqf26X44/s.

ECDSA key fingerprint is MD5:71:b3:32:0c:a8:1d:ff:7c:d0:54:e3:d3:71:5f:b7:1d.

Are you sure you want to continue connecting (yes/no)? yes

root@node3's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@node3'"

and check to make sure that only the key(s) you wanted were added.

# 添加节点

[root@node1 ~]# ceph orch host add node2

Added host 'node2'

[root@node1 ~]# ceph orch host add node3

Added host 'node3'

# 查看此时集群节点

[root@node1 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

node1 node1

node2 node2

node3 node3

# 稍等几分钟,其他节点自动部署所需要的容器组件

[root@node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b4b92f911938 quay.io/ceph/ceph:v15 "/usr/bin/ceph-mon -…" About a minute ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-mon.node2

db51d9e6857b quay.io/prometheus/node-exporter:v0.18.1 "/bin/node_exporter …" About a minute ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-node-exporter.node2

8db4a43a95a9 quay.io/ceph/ceph:v15 "/usr/bin/ceph-mgr -…" 2 minutes ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-mgr.node2.irgivl

ea1e4124d646 quay.io/ceph/ceph:v15 "/usr/bin/ceph-crash…" 2 minutes ago Up 2 minutes ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-crash.node2

[root@node3 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bb32ba96b483 quay.io/prometheus/node-exporter:v0.18.1 "/bin/node_exporter …" About a minute ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-node-exporter.node3

4cf9181cf65d quay.io/ceph/ceph:v15 "/usr/bin/ceph-mon -…" About a minute ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-mon.node3

8f920c59976e quay.io/ceph/ceph:v15 "/usr/bin/ceph-crash…" About a minute ago Up About a minute ceph-ceeaecf0-ea1c-11ec-a587-00163e0157e5-crash.node3

# 查看此时集群状态

[root@node1 ~]# ceph -s

cluster:

id: ceeaecf0-ea1c-11ec-a587-00163e0157e5

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum node1,node3,node2 (age 105s)

mgr: node1.nyhaks(active, since 26m), standbys: node2.irgivl

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

部署OSD

# 显示可用的存储设备

[root@node1 ~]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

node1 /dev/vdb hdd 7xv5uqbxfsv9plz6xmq6 128G Unknown N/A N/A Yes

node2 /dev/vdb hdd 7xv2dhp9aq6ke5m884kd 128G Unknown N/A N/A Yes

node3 /dev/vdb hdd 7xv236hjphj3zi12h01e 128G Unknown N/A N/A Yes

node3 /dev/vdc hdd 7xv236hjphj3zi12h01f 128G Unknown N/A N/A Yes

node3 /dev/vdd hdd 7xv236hjphj3zi12h01g 128G Unknown N/A N/A Yes

# 添加指定osd

[root@node1 ~]# ceph orch daemon add osd node1:/dev/vdb

Created osd(s) 0 on host 'node1'

[root@node1 ~]# ceph orch daemon add osd node2:/dev/vdb

Created osd(s) 1 on host 'node2'

[root@node1 ~]# ceph orch daemon add osd node3:/dev/vdb

Created osd(s) 2 on host 'node3'

# 查看此时可用设备

[root@node1 ~]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

node1 /dev/vdb hdd 7xv5uqbxfsv9plz6xmq6 128G Unknown N/A N/A No

node2 /dev/vdb hdd 7xv2dhp9aq6ke5m884kd 128G Unknown N/A N/A No

node3 /dev/vdc hdd 7xv236hjphj3zi12h01f 128G Unknown N/A N/A Yes

node3 /dev/vdd hdd 7xv236hjphj3zi12h01g 128G Unknown N/A N/A Yes

node3 /dev/vdb hdd 7xv236hjphj3zi12h01e 128G Unknown N/A N/A No

# 查看此时集群状态

[root@node1 ~]# ceph -s

cluster:

id: fd1e4b14-ea2e-11ec-a6a0-00163e0157e5

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node3,node2 (age 2m)

mgr: node1.flvtob(active, since 8m), standbys: node2.rrlrgg

osd: 3 osds: 3 up (since 21s), 3 in (since 21s)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 357 GiB / 360 GiB avail

pgs: 1 active+clean

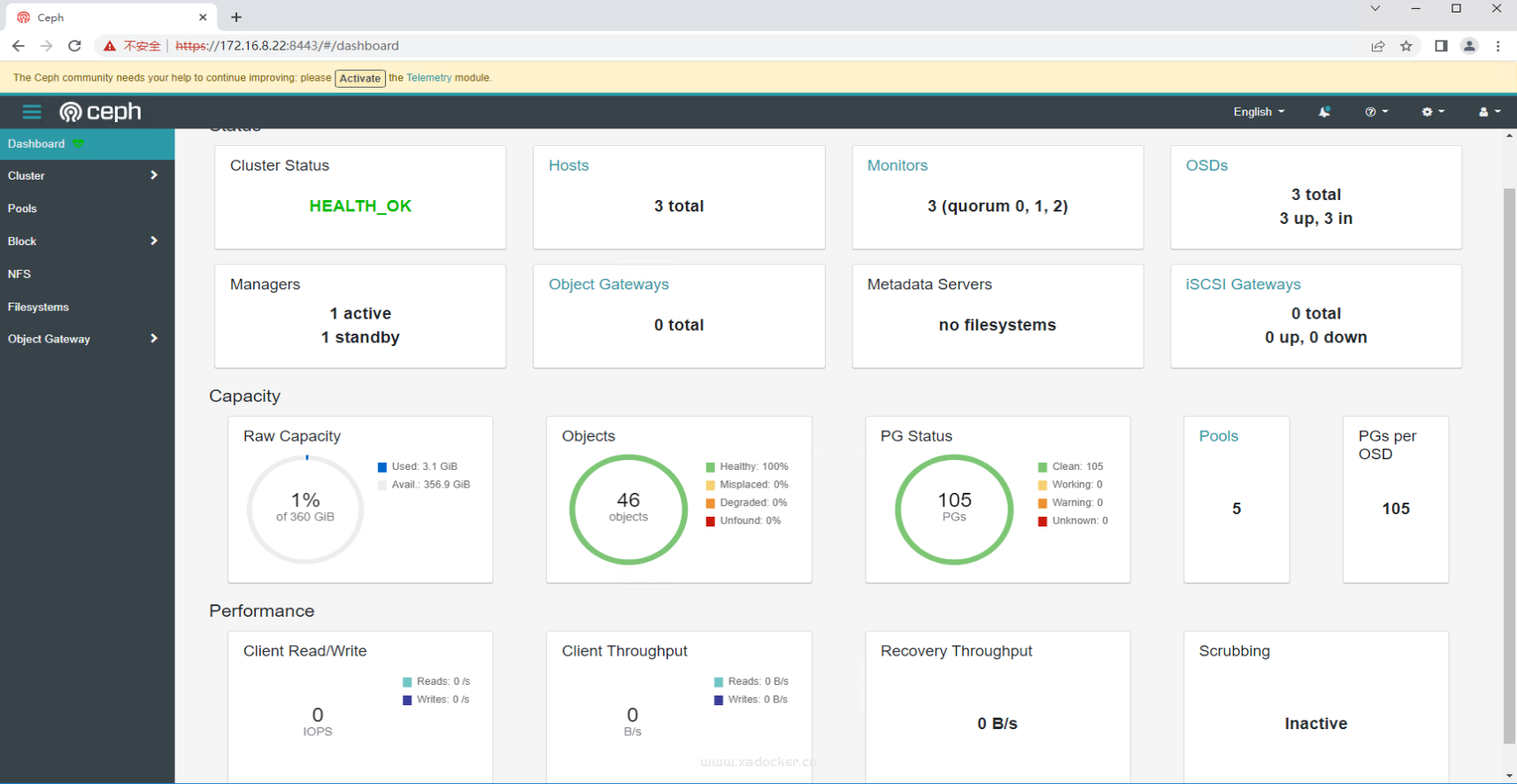

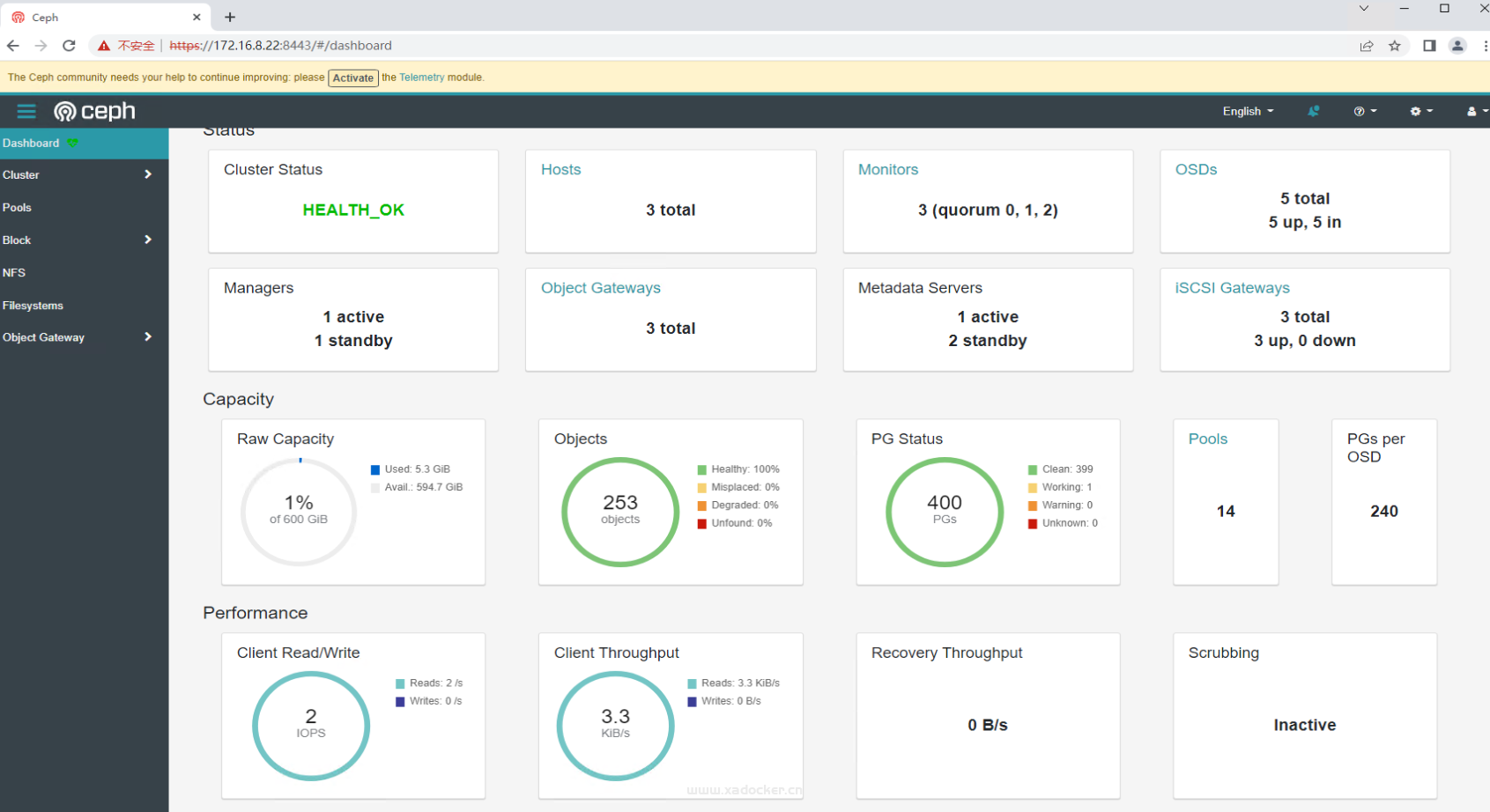

访问dashboard

# 若是忘记dashboard密码,可以使用如下方式重置

[root@node1 ~]# echo 1qaz@WSX > passwd

[root@node1 ~]# ceph dashboard ac-user-set-password admin -i passwd

{"username": "admin", "password": "$2b$12$mczHG859YvLjsQ9/hPPN9.RmqjmpBJAfLRSP.qPdWACBU.GslUf6q", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": 1655026784, "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}

部署MDS

创建mds

[root@node1 ~]# ceph osd pool create cephfs_data 64 64

pool 'cephfs_data' created

[root@node1 ~]# ceph osd pool create cephfs_metadata 64 64

pool 'cephfs_metadata' created

[root@node1 ~]# ceph fs new cephfs cephfs_metadata cephfs_data

new fs with metadata pool 7 and data pool 6

[root@node1 ~]# ceph fs ls

name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

[root@node1 ~]# ceph orch apply mds cephfs --placement="3 node1 node2 node3"

Scheduled mds.cephfs update...

# 此时每个节点启动一个mds容器

[root@node1 ~]# docker ps -a | grep mds

b4d05fb6cad0 quay.io/ceph/ceph:v15 "/usr/bin/ceph-mds -…" 48 seconds ago Up 47 seconds ceph-fd1e4b14-ea2e-11ec-a6a0-00163e0157e5-mds.cephfs.node1.drbqgc

[root@node2 ~]# docker ps -a | grep mds

3f3c9422bbd5 quay.io/ceph/ceph:v15 "/usr/bin/ceph-mds -…" About a minute ago Up About a minute ceph-fd1e4b14-ea2e-11ec-a6a0-00163e0157e5-mds.cephfs.node2.yyqcqw

[root@node3 ~]# docker ps -a | grep mds

71fd5910f01e quay.io/ceph/ceph:v15 "/usr/bin/ceph-mds -…" About a minute ago Up About a minute ceph-fd1e4b14-ea2e-11ec-a6a0-00163e0157e5-mds.cephfs.node3.klxkgm

[root@node3 ~]#

查看集群状态

[root@node1 ~]# ceph -s

cluster:

id: fd1e4b14-ea2e-11ec-a6a0-00163e0157e5

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node3,node2 (age 36m)

mgr: node1.flvtob(active, since 42m), standbys: node2.rrlrgg

mds: cephfs:1 {0=cephfs.node3.klxkgm=up:active} 2 up:standby

osd: 3 osds: 3 up (since 34m), 3 in (since 34m)

data:

pools: 7 pools, 212 pgs

objects: 68 objects, 7.3 KiB

usage: 3.1 GiB used, 357 GiB / 360 GiB avail

pgs: 212 active+clean

progress:

PG autoscaler decreasing pool 7 PGs from 64 to 16 (2m)

[========....................] (remaining: 4m)

部署RGWS

[root@node1 ~]# radosgw-admin realm create --rgw-realm=myorg --default

{

"id": "fdef13e0-5f19-4160-ac0d-e37563c53d90",

"name": "myorg",

"current_period": "bcb9c751-4b92-4350-a03d-f01d8ba79582",

"epoch": 1

}

[root@node1 ~]# radosgw-admin zonegroup create --rgw-zonegroup=default --master --default

failed to create zonegroup default: (17) File exists

[root@node1 ~]# radosgw-admin zone create --rgw-zonegroup=default --rgw-zone=cn-east-1 --master --default

2022-06-12T17:52:00.025+0800 7f5136aec040 0 NOTICE: overriding master zone: 317c300c-97d7-483b-9043-033738232dc7

{

"id": "914055bb-4ce1-4375-8b01-c0e35c14ea2a",

"name": "cn-east-1",

"domain_root": "cn-east-1.rgw.meta:root",

"control_pool": "cn-east-1.rgw.control",

"gc_pool": "cn-east-1.rgw.log:gc",

"lc_pool": "cn-east-1.rgw.log:lc",

"log_pool": "cn-east-1.rgw.log",

"intent_log_pool": "cn-east-1.rgw.log:intent",

"usage_log_pool": "cn-east-1.rgw.log:usage",

"roles_pool": "cn-east-1.rgw.meta:roles",

"reshard_pool": "cn-east-1.rgw.log:reshard",

"user_keys_pool": "cn-east-1.rgw.meta:users.keys",

"user_email_pool": "cn-east-1.rgw.meta:users.email",

"user_swift_pool": "cn-east-1.rgw.meta:users.swift",

"user_uid_pool": "cn-east-1.rgw.meta:users.uid",

"otp_pool": "cn-east-1.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "cn-east-1.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "cn-east-1.rgw.buckets.data"

}

},

"data_extra_pool": "cn-east-1.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"realm_id": "fdef13e0-5f19-4160-ac0d-e37563c53d90"

}

[root@node1 ~]# ceph orch apply rgw myorg cn-east-1 --placement="3 node1 node2 node3"

Scheduled rgw.myorg.cn-east-1 update...

查看服务状态

[root@node1 ~]# ceph orch ls | grep rgw

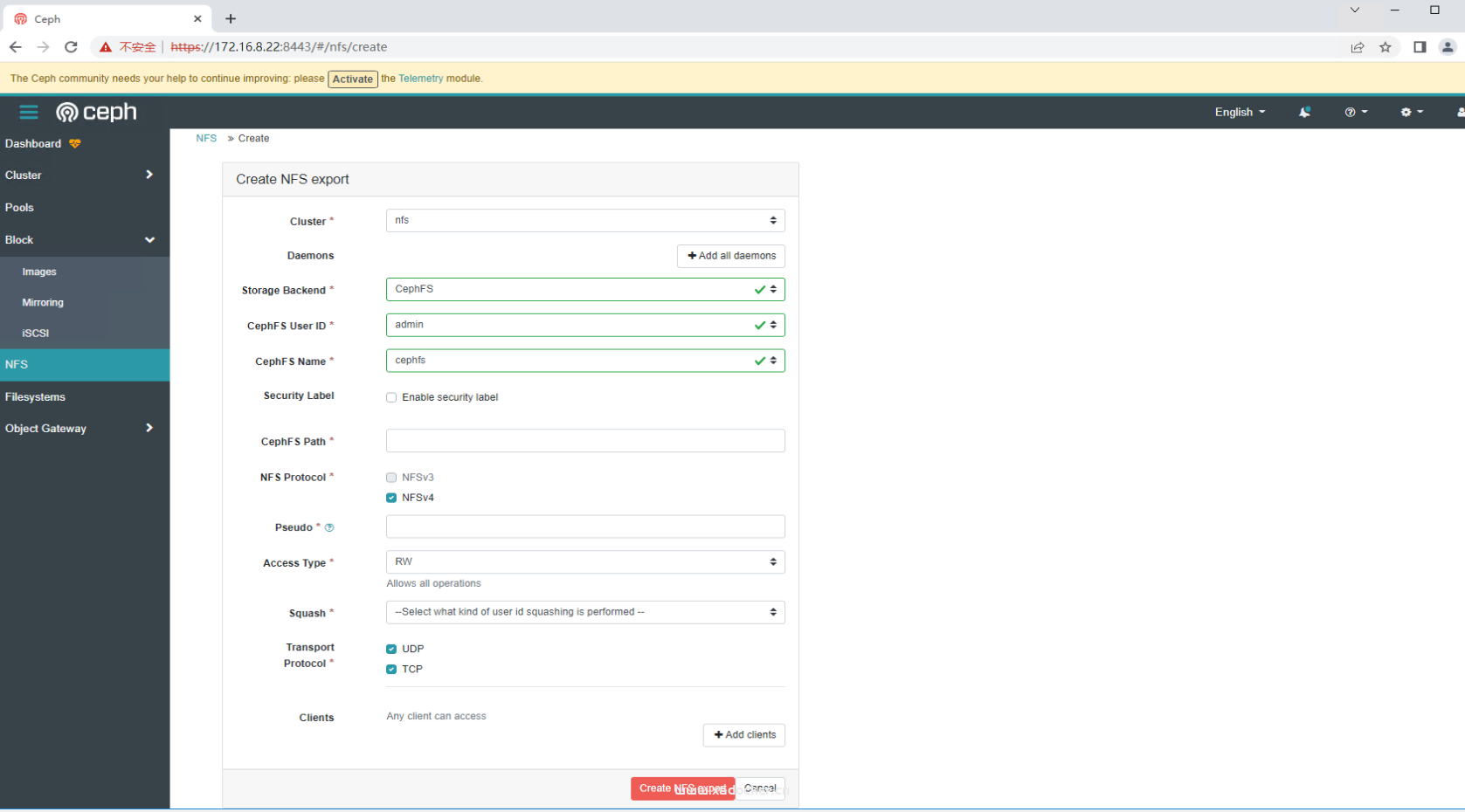

rgw.myorg.cn-east-1 3/3 66s ago 70s node1;node2;node3;count:3 quay.io/ceph/ceph:v15 部署NFS

# 创建NFS所需要的pool

[root@node1 ~]# ceph osd pool create ganesha_data 32

pool 'ganesha_data' created

[root@node1 ~]# ceph osd pool application enable ganesha_data nfs

enabled application 'nfs' on pool 'ganesha_data'

# 部署NFS service

[root@node1 ~]# ceph orch apply nfs nfs ganesha_data --placement=3

Scheduled nfs.nfs update...

# 查看NFS状态

[root@node1 ~]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 10m ago 64m count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 10m ago 65m * quay.io/ceph/ceph:v15 296d7fe77d4e

grafana 1/1 10m ago 64m count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

mds.cephfs 3/3 10m ago 53s count:3 quay.io/ceph/ceph:v15 296d7fe77d4e

mgr 2/2 10m ago 65m count:2 quay.io/ceph/ceph:v15 296d7fe77d4e

mon 3/5 10m ago 65m count:5 quay.io/ceph/ceph:v15 296d7fe77d4e

nfs.nfs 3/3 - 3s count:3 <unknown> <unknown>

node-exporter 3/3 10m ago 64m * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.None 5/0 10m ago - <unmanaged> quay.io/ceph/ceph:v15 296d7fe77d4e

prometheus 1/1 10m ago 65m count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

rgw.myorg.cn-east-1 0/3 10m ago 20m node1;node2;node3;count:3 quay.io/ceph/ceph:v15 <unknown>

# 查看Daemon状态

[root@node1 ~]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.node1 node1 running (61m) 2m ago 67m 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f 1ac2ed70a93d

crash.node1 node1 running (67m) 2m ago 67m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7e86816b21e4

crash.node2 node2 running (61m) 2m ago 61m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e e7259e86560e

crash.node3 node3 running (61m) 2m ago 61m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7331bdc2ebe0

grafana.node1 node1 running (67m) 2m ago 67m 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 636a9055f284

mds.cephfs.node1.drbqgc node1 running (27m) 2m ago 27m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e b4d05fb6cad0

mds.cephfs.node2.yyqcqw node2 running (27m) 2m ago 27m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 3f3c9422bbd5

mds.cephfs.node3.klxkgm node3 running (27m) 2m ago 27m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 71fd5910f01e

mgr.node1.flvtob node1 running (67m) 2m ago 67m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e d07b5e138d3a

mgr.node2.rrlrgg node2 running (61m) 2m ago 61m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e aa48b78b2a51

mon.node1 node1 running (67m) 2m ago 68m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 0ca6d9fce053

mon.node2 node2 running (61m) 2m ago 61m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 938b49e61b55

mon.node3 node3 running (61m) 2m ago 61m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e f22b488700e3

nfs.nfs.node1 node1 running 2m ago 2m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

nfs.nfs.node2 node2 running (2m) 2m ago 2m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e 5de322652afb

nfs.nfs.node3 node3 running 2m ago 2m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

node-exporter.node1 node1 running (67m) 2m ago 67m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 5265145ccfac

node-exporter.node2 node2 running (61m) 2m ago 61m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 419ffe27a151

node-exporter.node3 node3 running (61m) 2m ago 61m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 55b4b57b2f63

osd.0 node1 running (59m) 2m ago 59m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 03c2c8a94553

osd.1 node2 running (59m) 2m ago 59m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 670b8d4e33a2

osd.2 node3 running (59m) 2m ago 59m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 8a67ad30f08c

osd.3 node3 running (12m) 2m ago 12m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e ba026070d1a2

osd.4 node3 running (12m) 2m ago 12m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 67ba0baeb5ea

prometheus.node1 node1 running (61m) 2m ago 67m 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 0687fc4d2a8a

rgw.myorg.cn-east-1.node1.ulvfvr node1 error 2m ago 22m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node2.qlwlov node2 error 2m ago 22m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node3.tsbpyu node3 error 2m ago 22m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

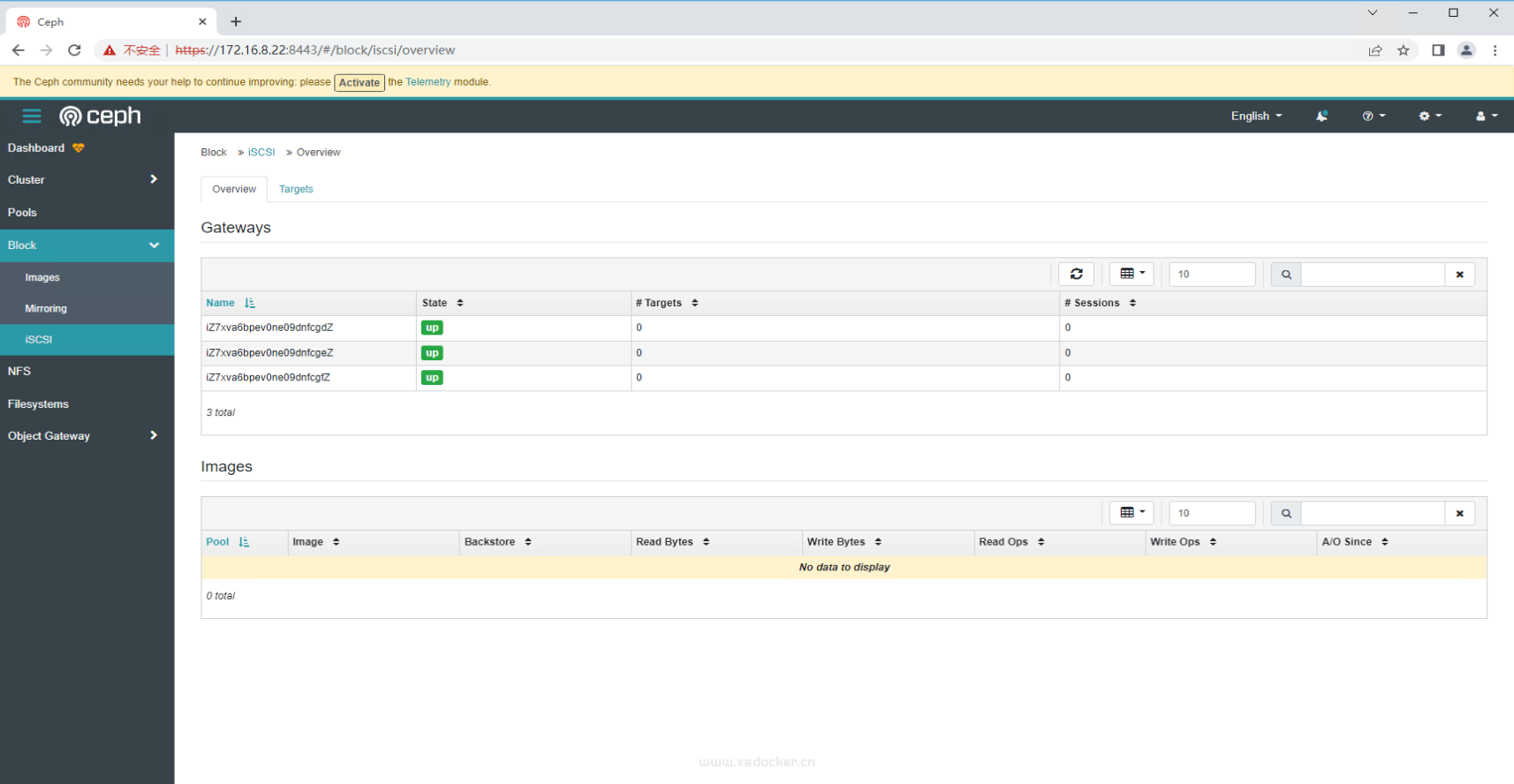

部署iscsi

[root@node1 ~]# ceph osd pool create iscsi_pool 32 32

pool 'iscsi_pool' created

[root@node1 ~]# ceph osd pool application enable iscsi_pool iscsi

enabled application 'iscsi' on pool 'iscsi_pool'

[root@node1 ~]# vim iscsi.yaml

[root@node1 ~]# cat iscsi.yaml

service_type: iscsi

service_id: gw

placement:

hosts:

- node1

- node2

- node3

spec:

pool: iscsi_pool

trusted_ip_list: "172.16.8.22,172.16.8.23,172.16.8.24"

api_user: admin

api_password: admin

api_secure: false

[root@node1 ~]# ceph orch apply -i iscsi.yaml

Scheduled iscsi.gw update...

[root@node1 ~]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 6m ago 71m count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 6m ago 71m * quay.io/ceph/ceph:v15 296d7fe77d4e

grafana 1/1 6m ago 71m count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

iscsi.gw 3/3 0s ago 4s node1;node2;node3 mix mix

mds.cephfs 3/3 6m ago 7m count:3 quay.io/ceph/ceph:v15 296d7fe77d4e

mgr 2/2 6m ago 71m count:2 quay.io/ceph/ceph:v15 296d7fe77d4e

mon 3/5 6m ago 71m count:5 quay.io/ceph/ceph:v15 296d7fe77d4e

nfs.nfs 3/3 6m ago 6m count:3 quay.io/ceph/ceph:v15 mix

node-exporter 3/3 6m ago 71m * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.None 5/0 6m ago - <unmanaged> quay.io/ceph/ceph:v15 296d7fe77d4e

prometheus 1/1 6m ago 71m count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

rgw.myorg.cn-east-1 0/3 6m ago 26m node1;node2;node3;count:3 quay.io/ceph/ceph:v15 <unknown>

[root@node1 ~]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.node1 node1 running (65m) 9s ago 71m 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f 1ac2ed70a93d

crash.node1 node1 running (71m) 9s ago 71m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7e86816b21e4

crash.node2 node2 running (65m) 10s ago 65m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e e7259e86560e

crash.node3 node3 running (65m) 10s ago 65m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7331bdc2ebe0

grafana.node1 node1 running (70m) 9s ago 71m 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 636a9055f284

iscsi.gw.node1.dzihur node1 running (12s) 9s ago 12s 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e ebd429cdcacf

iscsi.gw.node2.hbmjvc node2 running (14s) 10s ago 14s 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e 561e91ce82b4

iscsi.gw.node3.ivkbna node3 running (13s) 10s ago 13s 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e 22700c0b7f6e

mds.cephfs.node1.drbqgc node1 running (31m) 9s ago 31m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e b4d05fb6cad0

mds.cephfs.node2.yyqcqw node2 running (30m) 10s ago 30m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 3f3c9422bbd5

mds.cephfs.node3.klxkgm node3 running (31m) 10s ago 31m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 71fd5910f01e

mgr.node1.flvtob node1 running (71m) 9s ago 71m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e d07b5e138d3a

mgr.node2.rrlrgg node2 running (65m) 10s ago 65m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e aa48b78b2a51

mon.node1 node1 running (71m) 9s ago 71m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 0ca6d9fce053

mon.node2 node2 running (65m) 10s ago 65m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 938b49e61b55

mon.node3 node3 running (65m) 10s ago 65m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e f22b488700e3

nfs.nfs.node1 node1 running (6m) 9s ago 6m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e b55ee3969f19

nfs.nfs.node2 node2 running (6m) 10s ago 6m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e 5de322652afb

nfs.nfs.node3 node3 running (6m) 10s ago 6m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e d45725b3b4fd

node-exporter.node1 node1 running (71m) 9s ago 71m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 5265145ccfac

node-exporter.node2 node2 running (65m) 10s ago 65m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 419ffe27a151

node-exporter.node3 node3 running (65m) 10s ago 65m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 55b4b57b2f63

osd.0 node1 running (63m) 9s ago 63m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 03c2c8a94553

osd.1 node2 running (63m) 10s ago 63m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 670b8d4e33a2

osd.2 node3 running (63m) 10s ago 63m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 8a67ad30f08c

osd.3 node3 running (16m) 10s ago 16m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e ba026070d1a2

osd.4 node3 running (16m) 10s ago 16m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 67ba0baeb5ea

prometheus.node1 node1 running (65m) 9s ago 71m 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 0687fc4d2a8a

rgw.myorg.cn-east-1.node1.ulvfvr node1 error 9s ago 26m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node2.qlwlov node2 error 10s ago 26m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node3.tsbpyu node3 error 10s ago 26m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

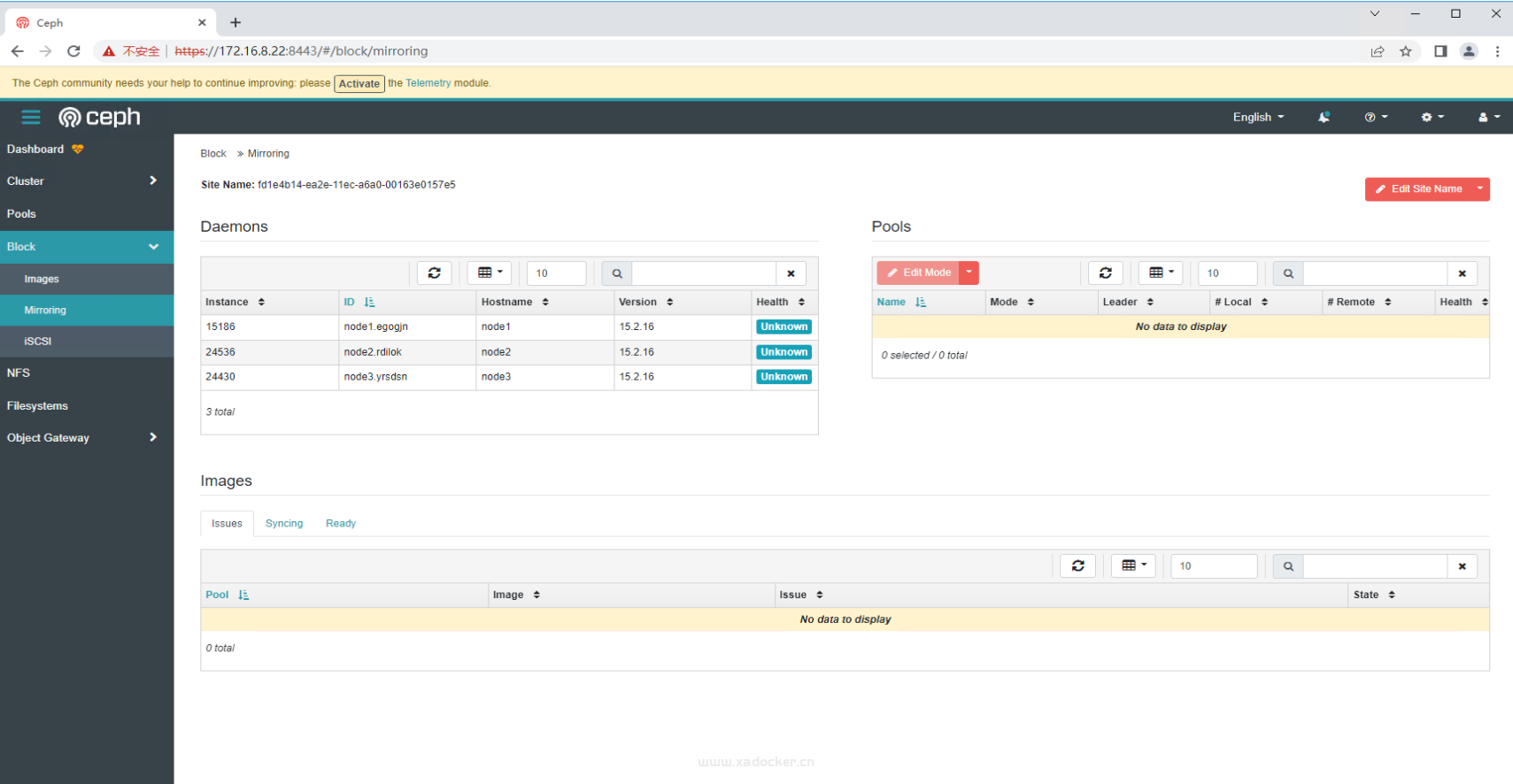

部署rbd-mirror

[root@node1 ~]# ceph orch apply rbd-mirror --placement=3

Scheduled rbd-mirror update...

[root@node1 ~]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 0s ago 75m count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 1s ago 76m * quay.io/ceph/ceph:v15 296d7fe77d4e

grafana 1/1 0s ago 75m count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

iscsi.gw 3/3 1s ago 4m node1;node2;node3 quay.io/ceph/ceph:v15 296d7fe77d4e

mds.cephfs 3/3 1s ago 11m count:3 quay.io/ceph/ceph:v15 296d7fe77d4e

mgr 2/2 1s ago 76m count:2 quay.io/ceph/ceph:v15 296d7fe77d4e

mon 3/5 1s ago 76m count:5 quay.io/ceph/ceph:v15 296d7fe77d4e

nfs.nfs 3/3 1s ago 10m count:3 quay.io/ceph/ceph:v15 296d7fe77d4e

node-exporter 3/3 1s ago 75m * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.None 5/0 1s ago - <unmanaged> quay.io/ceph/ceph:v15 296d7fe77d4e

prometheus 1/1 0s ago 75m count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

rbd-mirror 3/3 1s ago 6s count:3 quay.io/ceph/ceph:v15 296d7fe77d4e

rgw.myorg.cn-east-1 0/3 1s ago 31m node1;node2;node3;count:3 quay.io/ceph/ceph:v15 <unknown>

[root@node1 ~]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.node1 node1 running (70m) 16s ago 75m 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f 1ac2ed70a93d

crash.node1 node1 running (75m) 16s ago 75m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7e86816b21e4

crash.node2 node2 running (70m) 17s ago 70m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e e7259e86560e

crash.node3 node3 running (70m) 16s ago 70m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 7331bdc2ebe0

grafana.node1 node1 running (75m) 16s ago 75m 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 636a9055f284

iscsi.gw.node1.dzihur node1 running (5m) 16s ago 5m 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e ebd429cdcacf

iscsi.gw.node2.hbmjvc node2 running (5m) 17s ago 5m 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e 561e91ce82b4

iscsi.gw.node3.ivkbna node3 running (5m) 16s ago 5m 3.5 quay.io/ceph/ceph:v15 296d7fe77d4e 22700c0b7f6e

mds.cephfs.node1.drbqgc node1 running (35m) 16s ago 35m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e b4d05fb6cad0

mds.cephfs.node2.yyqcqw node2 running (35m) 17s ago 35m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 3f3c9422bbd5

mds.cephfs.node3.klxkgm node3 running (35m) 16s ago 35m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 71fd5910f01e

mgr.node1.flvtob node1 running (76m) 16s ago 76m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e d07b5e138d3a

mgr.node2.rrlrgg node2 running (70m) 17s ago 70m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e aa48b78b2a51

mon.node1 node1 running (76m) 16s ago 76m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 0ca6d9fce053

mon.node2 node2 running (70m) 17s ago 70m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 938b49e61b55

mon.node3 node3 running (70m) 16s ago 70m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e f22b488700e3

nfs.nfs.node1 node1 running (11m) 16s ago 11m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e b55ee3969f19

nfs.nfs.node2 node2 running (11m) 17s ago 11m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e 5de322652afb

nfs.nfs.node3 node3 running (11m) 16s ago 11m 3.3 quay.io/ceph/ceph:v15 296d7fe77d4e d45725b3b4fd

node-exporter.node1 node1 running (75m) 16s ago 75m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 5265145ccfac

node-exporter.node2 node2 running (70m) 17s ago 70m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 419ffe27a151

node-exporter.node3 node3 running (70m) 16s ago 70m 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 55b4b57b2f63

osd.0 node1 running (68m) 16s ago 68m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 03c2c8a94553

osd.1 node2 running (68m) 17s ago 68m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 670b8d4e33a2

osd.2 node3 running (68m) 16s ago 68m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 8a67ad30f08c

osd.3 node3 running (21m) 16s ago 21m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e ba026070d1a2

osd.4 node3 running (21m) 16s ago 21m 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 67ba0baeb5ea

prometheus.node1 node1 running (70m) 16s ago 75m 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 0687fc4d2a8a

rbd-mirror.node1.egogjn node1 running (19s) 16s ago 19s 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 99ae86f9ea6f

rbd-mirror.node2.rdilok node2 running (18s) 17s ago 18s 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e fb0abd423ba6

rbd-mirror.node3.yrsdsn node3 running (20s) 16s ago 20s 15.2.16 quay.io/ceph/ceph:v15 296d7fe77d4e 722586e8fd25

rgw.myorg.cn-east-1.node1.ulvfvr node1 error 16s ago 31m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node2.qlwlov node2 error 17s ago 31m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

rgw.myorg.cn-east-1.node3.tsbpyu node3 error 16s ago 31m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

最终状态

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站