共计 11795 个字符,预计需要花费 30 分钟才能阅读完成。

安装好prometheus-operator后,它的grafana会自带一份dashboard default目录,该目录下有很多可以直接使用的监控面板,此处我们给他扩展下,增加下ingres的监控和面板,通过此示例来学习prometheus-operator的使用方式。ingress没有安装的读者自行百度哈,或者以后我再写个ingress安装咯

ingress-controller调整

ingress部署资源状况

[root@k8s-master ~]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-fqkpb 0/1 Completed 0 12d

pod/ingress-nginx-admission-patch-xp4vr 0/1 Completed 1 12d

pod/ingress-nginx-controller-cg8vh 1/1 Running 6 12d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.96.38.23 <pending> 80:30645/TCP,443:32540/TCP 12d

service/ingress-nginx-controller-admission ClusterIP 10.96.162.3 <none> 443/TCP 12d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 1 1 1 1 1 <none> 12d

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 5s 12d

job.batch/ingress-nginx-admission-patch 1/1 6s 12d

对于ingress监控,它的默认端口一般是10254,监控路劲也是/metrics,但是根据上面信息可以看到我的ingress的svc没有暴露10254端口,而且查看我们下我们的ingress-controller ds似乎也没有暴露这个端口

[root@k8s-master ~]# kubectl get ds -n ingress-nginx

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ingress-nginx-controller 1 1 1 1 1 <none> 12d

[root@k8s-master ~]#

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get ds -n ingress-nginx ingress-nginx-controller -o yaml

########略

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=ingress-nginx/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=ingress-nginx/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.32.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

- containerPort: 8443

hostPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

########略根据上述现状,我们需要暴露pod和svc 10254端口的服务,所以需要做如下调整

pod开启暴露监控服务的端口

# 此处我的是ds,读者自行修改自己集群的资源类型

[root@k8s-master ~]# kubectl get ds -n ingress-nginx ingress-nginx-controller -o yaml

########略

name: controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

- containerPort: 8443

hostPort: 8443

name: webhook

protocol: TCP

# 暴露监控服务端口

- containerPort: 10254

name: metrics

protocol: TCP

########略svc开启暴露监控服务的端口

[root@k8s-master ~]# kubectl edit -n ingress-nginx svc ingress-nginx-controller

########略

ports:

- name: http

nodePort: 30645

port: 80

protocol: TCP

targetPort: http

- name: https

nodePort: 32540

port: 443

protocol: TCP

targetPort: https

# 暴露监控服务端口

- name: https-metrics

port: 10254

protocol: TCP

targetPort: 10254

########略测试监服务是否正常

[root@k8s-master ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.96.38.23 <pending> 80:30645/TCP,443:32540/TCP,10254:31798/TCP 12d

ingress-nginx-controller-admission ClusterIP 10.96.162.3 <none> 443/TCP 12d

[root@k8s-master ~]# curl http://10.96.38.23:10254/metrics

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 1.2897e-05

go_gc_duration_seconds{quantile="0.25"} 0.000107621

go_gc_duration_seconds{quantile="0.5"} 0.000143304

go_gc_duration_seconds{quantile="0.75"} 0.000195811

go_gc_duration_seconds{quantile="1"} 0.000363567

go_gc_duration_seconds_sum 0.002012389

go_gc_duration_seconds_count 13

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 97

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.14.2"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 8.94268e+06

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 7.0024184e+07

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.477274e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 356960

# HELP go_memstats_gc_cpu_fraction The fraction of this program's available CPU time used by the GC since the program started.

# TYPE go_memstats_gc_cpu_fraction gauge

go_memstats_gc_cpu_fraction 3.0767321548476896e-05

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 3.5986e+06

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 8.94268e+06

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 5.3338112e+07

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

########略使用Servicemonitors抓取数据

servicemonitors是prometheus-operator的CRD资源,可以通过这个方式自动帮我们抓取监控目标

[root@k8s-master ~]# kubectl api-resources | grep monitoring

alertmanagers monitoring.coreos.com true Alertmanager

podmonitors monitoring.coreos.com true PodMonitor

prometheuses monitoring.coreos.com true Prometheus

prometheusrules monitoring.coreos.com true PrometheusRule

servicemonitors monitoring.coreos.com true ServiceMonitor

thanosrulers monitoring.coreos.com true ThanosRuler

查看下初始安装时自带的servicemonitors

[root@k8s-master ~]# kubectl get servicemonitors -A

NAMESPACE NAME AGE

monitoring alertmanager 9d

monitoring coredns 9d

monitoring grafana 9d

monitoring kube-apiserver 9d

monitoring kube-controller-manager 9d

monitoring kube-scheduler 9d

monitoring kube-state-metrics 9d

monitoring kubelet 9d

monitoring node-exporter 9d

monitoring prometheus 9d

monitoring prometheus-operator 9d

[root@k8s-master ~]# kubectl get servicemonitors -n monitoring coredns -o yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"monitoring.coreos.com/v1","kind":"ServiceMonitor","metadata":{"annotations":{},"labels":{"k8s-app":"coredns"},"name":"coredns","namespace":"monitoring"},"spec":{"endpoints":[{"bearerTokenFile":"/var/run/secrets/kubernetes.io/serviceaccount/token","interval":"15s","port":"metrics"}],"jobLabel":"k8s-app","namespaceSelector":{"matchNames":["kube-system"]},"selector":{"matchLabels":{"k8s-app":"kube-dns"}}}}

creationTimestamp: "2020-08-18T13:14:16Z"

generation: 1

labels:

k8s-app: coredns

managedFields:

- apiVersion: monitoring.coreos.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:labels:

.: {}

f:k8s-app: {}

f:spec:

.: {}

f:endpoints: {}

f:jobLabel: {}

f:namespaceSelector:

.: {}

f:matchNames: {}

f:selector:

.: {}

f:matchLabels:

.: {}

f:k8s-app: {}

manager: kubectl

operation: Update

time: "2022-08-18T13:14:16Z"

name: coredns

namespace: monitoring

resourceVersion: "60047"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/monitoring/servicemonitors/coredns

uid: 8d06d95c-751e-4f74-ad2c-d9b102a8b2d1

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 15s

port: metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-dns

servicemonitors声明监控ingress-nginx

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: nginx-ingress

namespace: monitoring

labels:

app.kubernetes.io/component: controller

spec:

jobLabel: app.kubernetes.io/component

endpoints:

- port: https-metrics #之前定义的ingress的监控端口,一定要用名称。不能使用数字

interval: 10s

selector:

matchLabels:

app.kubernetes.io/component: controller #该标签匹配ingress-nginx-controller的pod

namespaceSelector:

matchNames:

- ingress-nginx #指定ingress控制器的名称空间为ingress-nginx

[root@k8s-master ~]# kubectl apply -f ingress-nginx-servicemonitors.yaml

servicemonitor.monitoring.coreos.com/nginx-ingress created

此时修改完后我们再登录查看prometheus服务发现还是没有ingress的target,排查日志发现没有权限获取ingress-nginx空间下的资源权限

[root@k8s-master ~]# kubectl logs prometheus-k8s-0 -n monitoring prometheus

########略

level=info ts=2020-08-28T11:09:01.868Z caller=kubernetes.go:190 component="discovery manager notify" discovery=k8s msg="Using pod service account via in-cluster config"

level=error ts=2020-08-28T11:09:01.875Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:262: Failed to list *v1.Service: services is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"services\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:01.875Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:263: Failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"pods\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:01.876Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:261: Failed to list *v1.Endpoints: endpoints is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"endpoints\" in API group \"\" in the namespace \"ingress-nginx\""

level=info ts=2020-08-28T11:09:01.907Z caller=main.go:762 msg="Completed loading of configuration file" filename=/etc/prometheus/config_out/prometheus.env.yaml

level=error ts=2020-08-28T11:09:02.878Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:262: Failed to list *v1.Service: services is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"services\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:02.881Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:263: Failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"pods\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:02.883Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:261: Failed to list *v1.Endpoints: endpoints is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"endpoints\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:03.881Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:262: Failed to list *v1.Service: services is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"services\" in API group \"\" in the namespace \"ingress-nginx\""

level=error ts=2020-08-28T11:09:03.883Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:263: Failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"pods\" in API group \"\" in the namespace \"ingress-nginx\""

########略看来需要排查下prometheus-operator的角色权限了

[root@k8s-master ~]# kubectl get clusterroles -n monitoring prometheus-k8s -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

# 修改如下

[root@k8s-master ~]# kubectl get clusterroles -n monitoring prometheus-k8s -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

- pods

- services

- endpoints

verbs:

- list

- get

- watch

- nonResourceURLs:

- /metrics

verbs:

- get

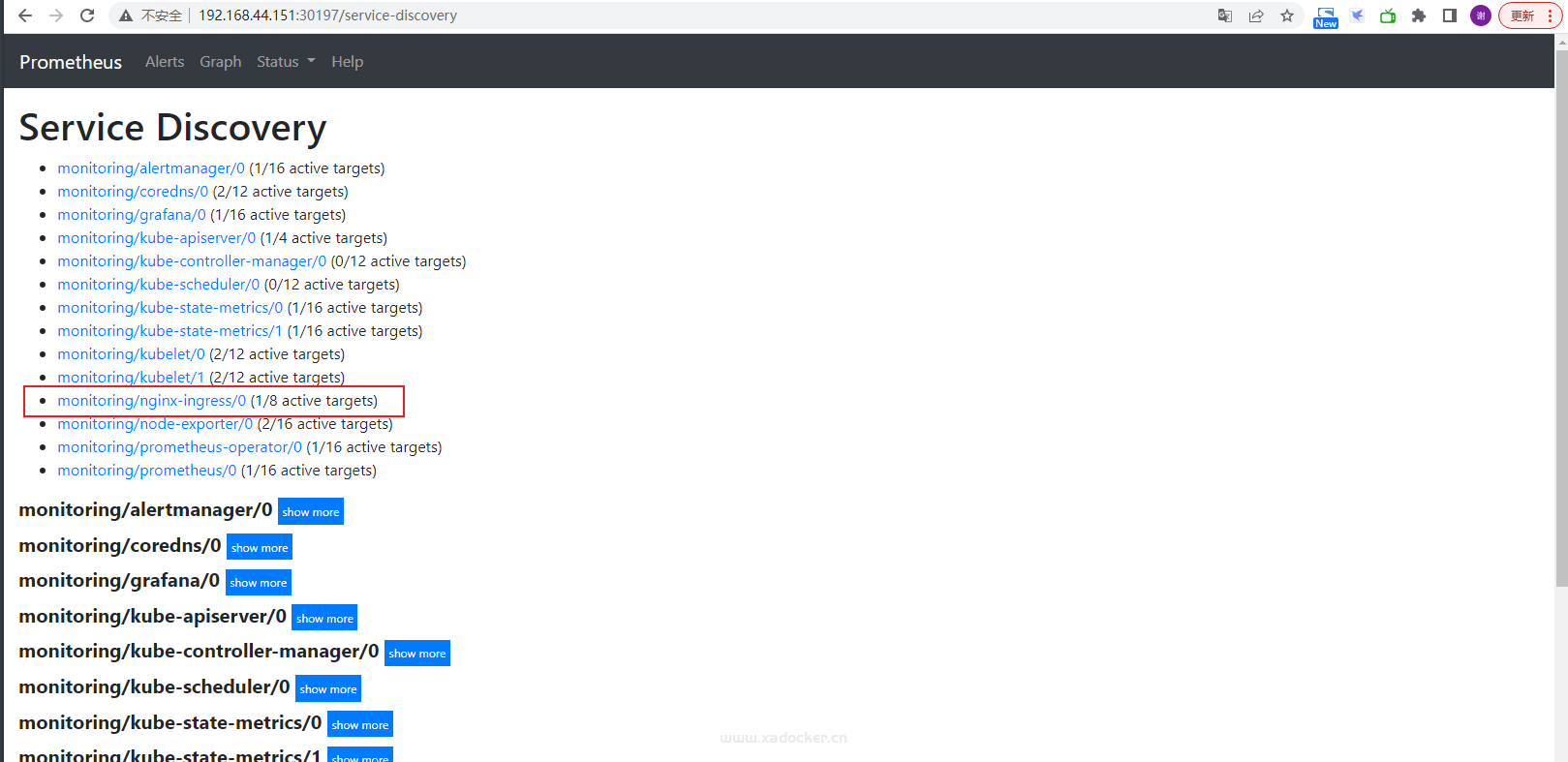

修改后稍等片刻就可以看到ingress-nginx的target

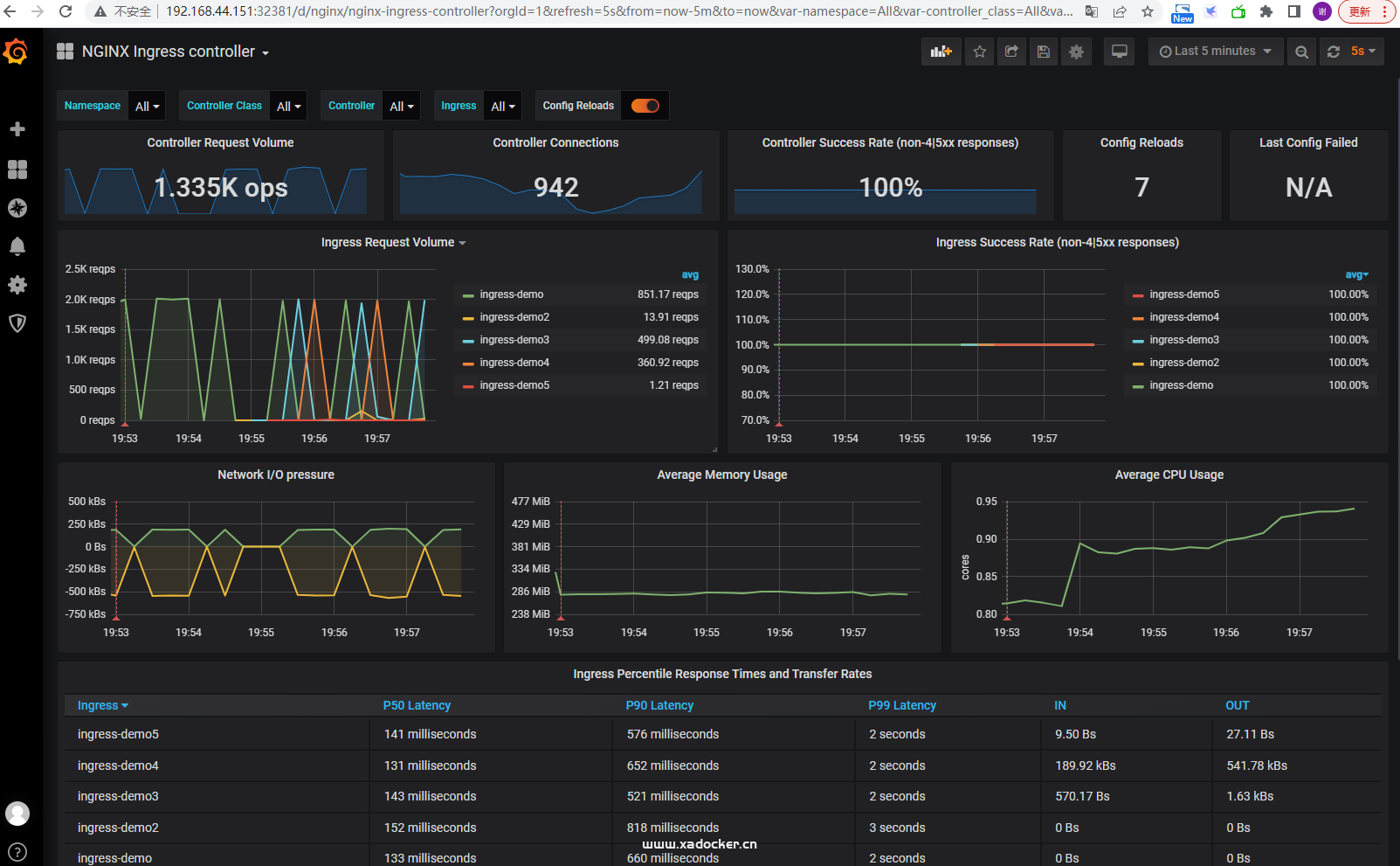

打开grafana服务,添加面板ID: 9614

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站