共计 13472 个字符,预计需要花费 34 分钟才能阅读完成。

prometheus-opreator对于k8s组件是默认安装时就自动配置了对应得servicemonitor,但是我的集群一些组件是静态pod方式启动的,所以我需要改改

kube-scheduler的ServiceMonitor

查看ServiceMonitor

[root@k8s-master ~]# kubectl get servicemonitor -n monitoring kube-scheduler -o yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"monitoring.coreos.com/v1","kind":"ServiceMonitor","metadata":{"annotations":{},"labels":{"k8s-app":"kube-scheduler"},"name":"kube-scheduler","namespace":"monitoring"},"spec":{"endpoints":[{"interval":"30s","port":"http-metrics"}],"jobLabel":"k8s-app","namespaceSelector":{"matchNames":["kube-system"]},"selector":{"matchLabels":{"k8s-app":"kube-scheduler"}}}}

creationTimestamp: "2020-08-26T04:47:11Z"

generation: 1

labels:

k8s-app: kube-scheduler

managedFields:

- apiVersion: monitoring.coreos.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:labels:

.: {}

f:k8s-app: {}

f:spec:

.: {}

f:endpoints: {}

f:jobLabel: {}

f:namespaceSelector:

.: {}

f:matchNames: {}

f:selector:

.: {}

f:matchLabels:

.: {}

f:k8s-app: {}

manager: kubectl

operation: Update

time: "2020-08-26T04:47:11Z"

name: kube-scheduler

namespace: monitoring

resourceVersion: "869690"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/monitoring/servicemonitors/kube-scheduler

uid: 7a2cd417-0682-49e6-89e8-df53d6a9c1b5

spec:

endpoints:

- interval: 30s

port: http-metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-scheduler创建kube-scheduler svc

从上面得servicemonitor中可以看到对kube-scheduler使用selector得标签为k8s-app: kube-scheduler,且抓取得端口名字为:http-metrics,所以我们需要为kube-scheduler创建一个符合上述条件得svc,kube-scheduler得监控服务端口为10251

[root@k8s-master ~]# cat kube-scheduler-svc.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

k8s-app: kube-scheduler

spec:

selector:

component: kube-scheduler

ports:

- name: http-metrics

port: 10251

targetPort: 10251

protocol: TCP

[root@k8s-master kube-scheduler]# kubectl apply -f kube-scheduler-svc.yaml

service/kube-scheduler created

[root@k8s-master kube-scheduler]#

[root@k8s-master kube-scheduler]# kubectl get -f kube-scheduler-svc.yaml

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-scheduler ClusterIP 10.96.29.112 <none> 10251/TCP 15s

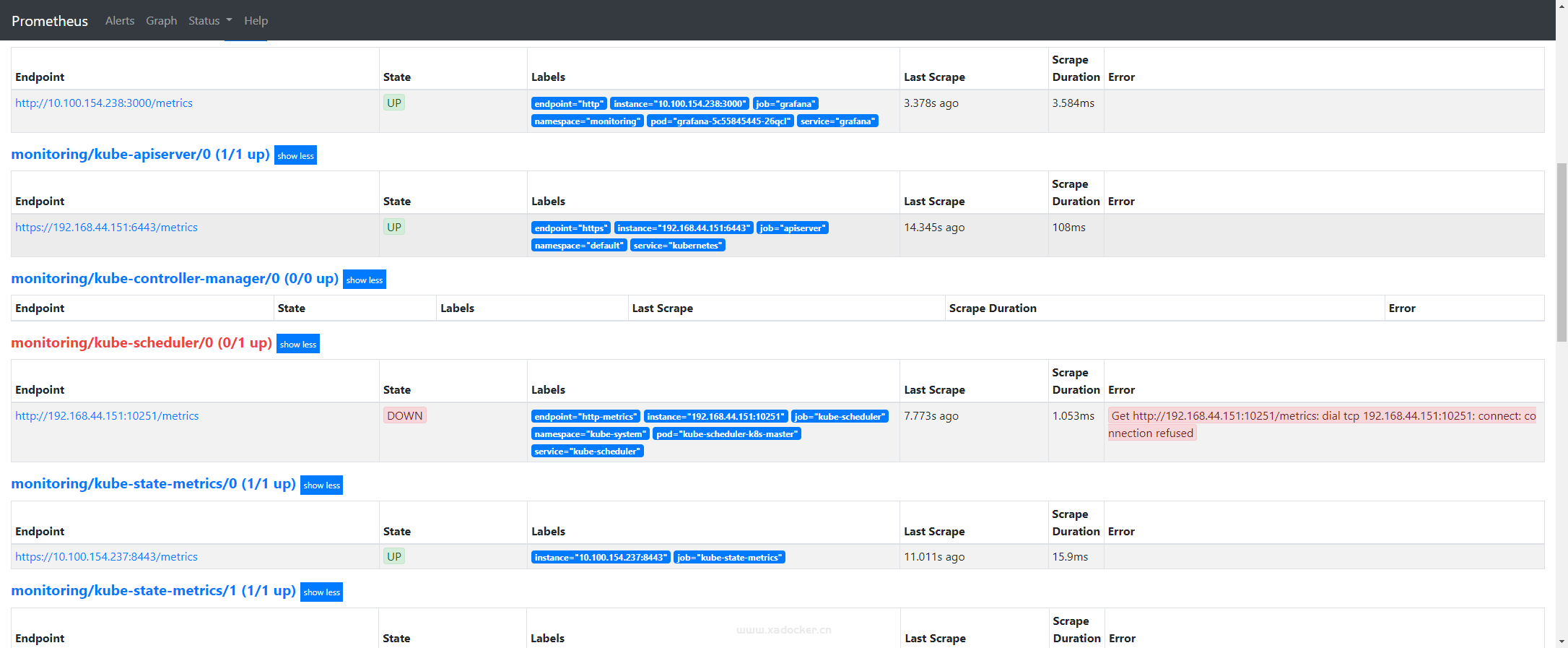

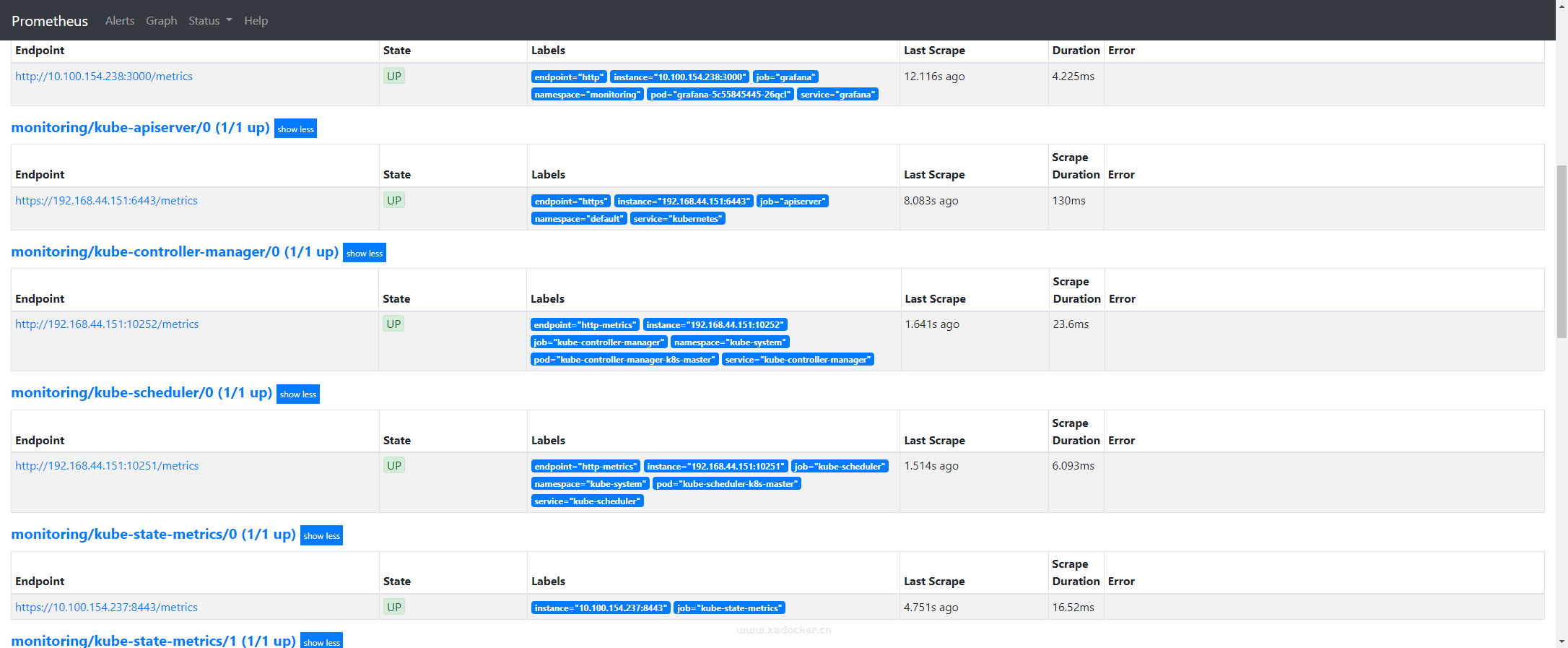

此时登录prometheus查看该组件的target中已有endpoint,但是该endpoint报地址无法连接

curl测试下似乎真的无法访问,突然想起我的kube-scheduler是静态pod的hostnetwork方式部署,查看下是不是监听的地址问题

[root@k8s-master manifests]# cat kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

image: registry.aliyuncs.com/k8sxio/kube-scheduler:v1.18.9

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

status: {}

看来我们需要将监听地址修改为0.0.0.0才行,修改后如下

[root@k8s-master manifests]# cat kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

image: registry.aliyuncs.com/k8sxio/kube-scheduler:v1.18.9

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

status: {}

但是还是发现没有10251端口(+_+)?

[root@k8s-master kube-scheduler]# netstat -tunlp | grep 10251查看启动日志也没有报错

[root@k8s-master kube-scheduler]# kubectl logs -n kube-system kube-scheduler-k8s-master

I0903 07:27:06.803726 1 registry.go:150] Registering EvenPodsSpread predicate and priority function

I0903 07:27:06.803818 1 registry.go:150] Registering EvenPodsSpread predicate and priority function

I0903 07:27:07.419715 1 serving.go:313] Generated self-signed cert in-memory

I0903 07:27:08.549379 1 registry.go:150] Registering EvenPodsSpread predicate and priority function

I0903 07:27:08.549423 1 registry.go:150] Registering EvenPodsSpread predicate and priority function

I0903 07:27:08.552835 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0903 07:27:08.552946 1 shared_informer.go:223] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0903 07:27:08.552804 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0903 07:27:08.553160 1 shared_informer.go:223] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0903 07:27:08.553441 1 secure_serving.go:178] Serving securely on [::]:10259

I0903 07:27:08.553595 1 tlsconfig.go:240] Starting DynamicServingCertificateController

I0903 07:27:08.653415 1 shared_informer.go:230] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0903 07:27:08.653531 1 shared_informer.go:230] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0903 07:27:08.654134 1 leaderelection.go:242] attempting to acquire leader lease kube-system/kube-scheduler...

I0903 07:27:26.377070 1 leaderelection.go:252] successfully acquired lease kube-system/kube-scheduler

一顿搜索后是启动参数–port=0的影响,需要将此注释即可

[root@k8s-master kube-scheduler]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

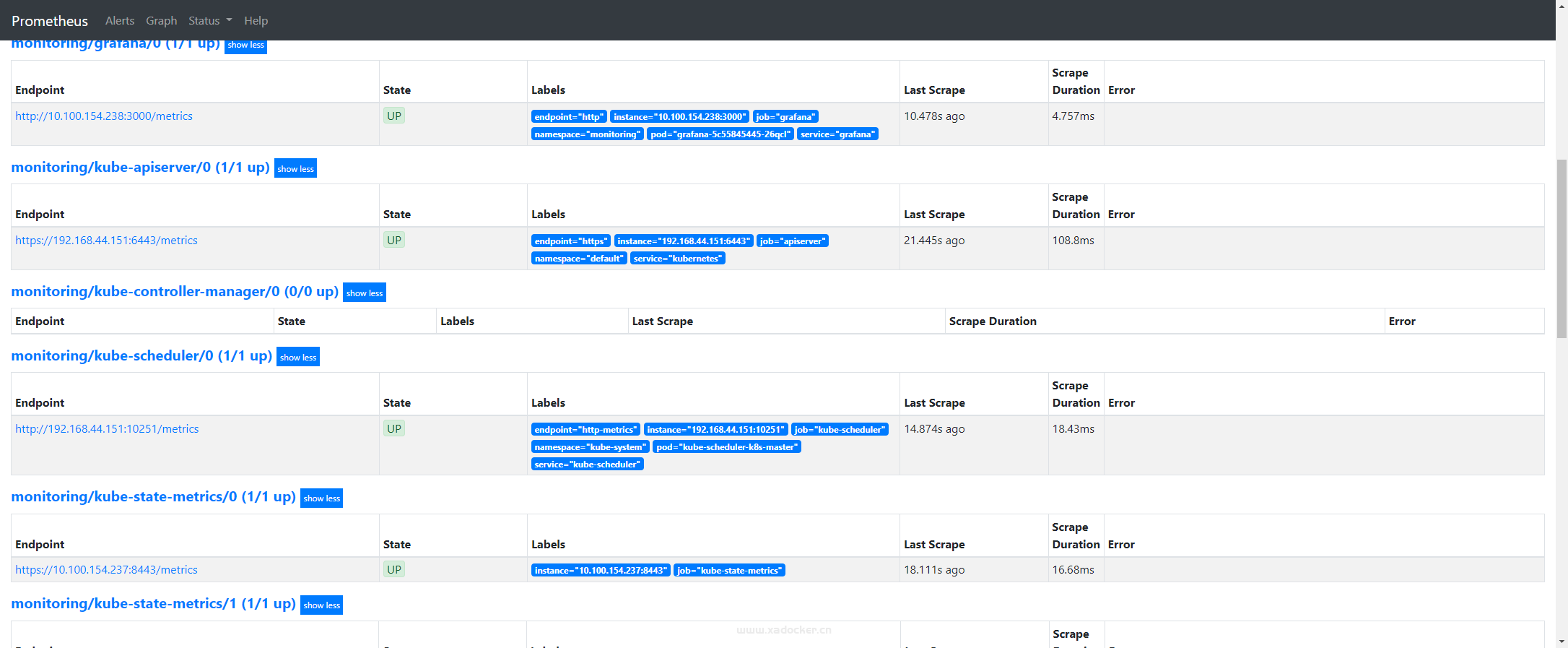

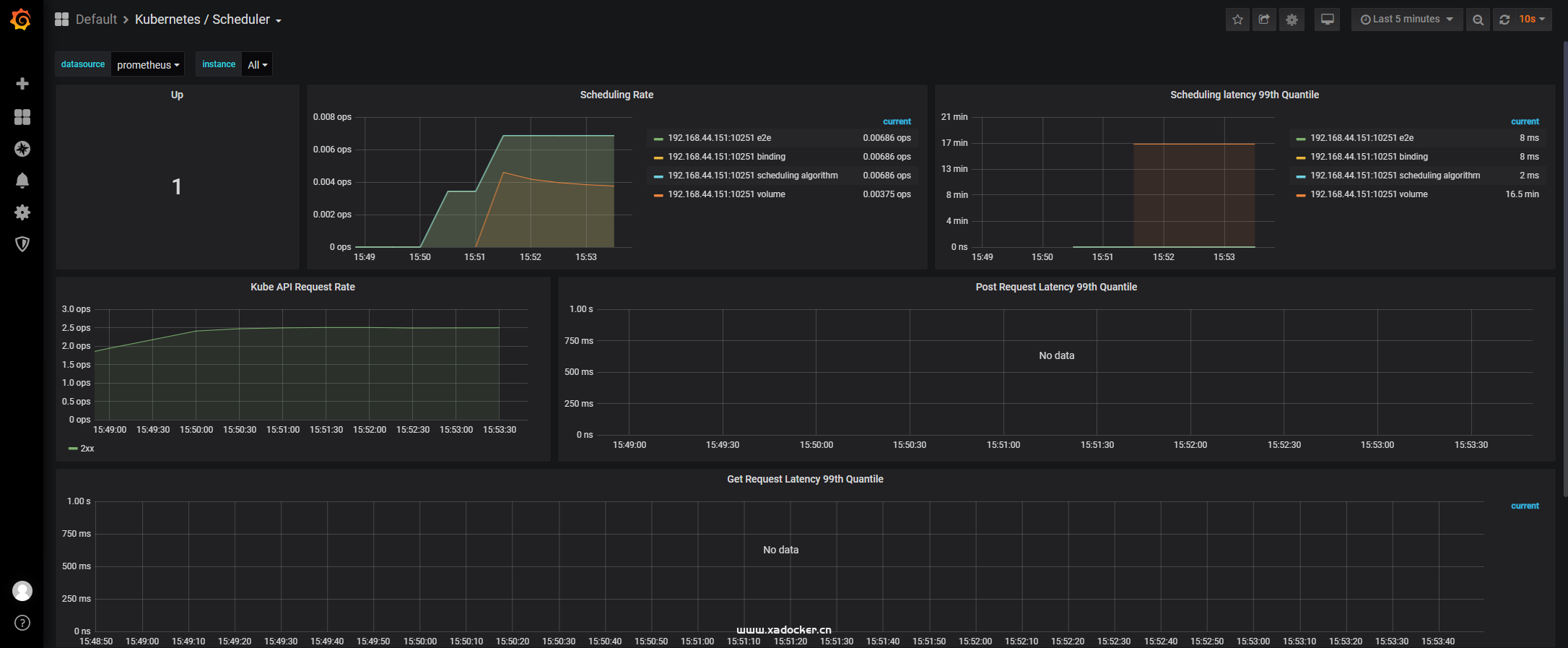

注释完成后该组件endpoint恢复up状态

kube-controller-manager的ServiceMonitor

查看ServiceMonitor

[root@k8s-master manifests]# kubectl get servicemonitor -n monitoring kube-controller-manager -o yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: monitoring

resourceVersion: "60057"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/monitoring/servicemonitors/kube-controller-manager

uid: f2671f29-cc89-40d4-ba5a-ac66fd82530c

spec:

endpoints:

- interval: 30s

metricRelabelings:

- action: drop

regex: kubelet_(pod_worker_latency_microseconds|pod_start_latency_microseconds|cgroup_manager_latency_microseconds|pod_worker_start_latency_microseconds|pleg_relist_latency_microseconds|pleg_relist_interval_microseconds|runtime_operations|runtime_operations_latency_microseconds|runtime_operations_errors|eviction_stats_age_microseconds|device_plugin_registration_count|device_plugin_alloc_latency_microseconds|network_plugin_operations_latency_microseconds)

sourceLabels:

- __name__

- action: drop

regex: scheduler_(e2e_scheduling_latency_microseconds|scheduling_algorithm_predicate_evaluation|scheduling_algorithm_priority_evaluation|scheduling_algorithm_preemption_evaluation|scheduling_algorithm_latency_microseconds|binding_latency_microseconds|scheduling_latency_seconds)

sourceLabels:

- __name__

- action: drop

regex: apiserver_(request_count|request_latencies|request_latencies_summary|dropped_requests|storage_data_key_generation_latencies_microseconds|storage_transformation_failures_total|storage_transformation_latencies_microseconds|proxy_tunnel_sync_latency_secs)

sourceLabels:

- __name__

- action: drop

regex: kubelet_docker_(operations|operations_latency_microseconds|operations_errors|operations_timeout)

sourceLabels:

- __name__

- action: drop

regex: reflector_(items_per_list|items_per_watch|list_duration_seconds|lists_total|short_watches_total|watch_duration_seconds|watches_total)

sourceLabels:

- __name__

- action: drop

regex: etcd_(helper_cache_hit_count|helper_cache_miss_count|helper_cache_entry_count|request_cache_get_latencies_summary|request_cache_add_latencies_summary|request_latencies_summary)

sourceLabels:

- __name__

- action: drop

regex: transformation_(transformation_latencies_microseconds|failures_total)

sourceLabels:

- __name__

- action: drop

regex: (admission_quota_controller_adds|crd_autoregistration_controller_work_duration|APIServiceOpenAPIAggregationControllerQueue1_adds|AvailableConditionController_retries|crd_openapi_controller_unfinished_work_seconds|APIServiceRegistrationController_retries|admission_quota_controller_longest_running_processor_microseconds|crdEstablishing_longest_running_processor_microseconds|crdEstablishing_unfinished_work_seconds|crd_openapi_controller_adds|crd_autoregistration_controller_retries|crd_finalizer_queue_latency|AvailableConditionController_work_duration|non_structural_schema_condition_controller_depth|crd_autoregistration_controller_unfinished_work_seconds|AvailableConditionController_adds|DiscoveryController_longest_running_processor_microseconds|autoregister_queue_latency|crd_autoregistration_controller_adds|non_structural_schema_condition_controller_work_duration|APIServiceRegistrationController_adds|crd_finalizer_work_duration|crd_naming_condition_controller_unfinished_work_seconds|crd_openapi_controller_longest_running_processor_microseconds|DiscoveryController_adds|crd_autoregistration_controller_longest_running_processor_microseconds|autoregister_unfinished_work_seconds|crd_naming_condition_controller_queue_latency|crd_naming_condition_controller_retries|non_structural_schema_condition_controller_queue_latency|crd_naming_condition_controller_depth|AvailableConditionController_longest_running_processor_microseconds|crdEstablishing_depth|crd_finalizer_longest_running_processor_microseconds|crd_naming_condition_controller_adds|APIServiceOpenAPIAggregationControllerQueue1_longest_running_processor_microseconds|DiscoveryController_queue_latency|DiscoveryController_unfinished_work_seconds|crd_openapi_controller_depth|APIServiceOpenAPIAggregationControllerQueue1_queue_latency|APIServiceOpenAPIAggregationControllerQueue1_unfinished_work_seconds|DiscoveryController_work_duration|autoregister_adds|crd_autoregistration_controller_queue_latency|crd_finalizer_retries|AvailableConditionController_unfinished_work_seconds|autoregister_longest_running_processor_microseconds|non_structural_schema_condition_controller_unfinished_work_seconds|APIServiceOpenAPIAggregationControllerQueue1_depth|AvailableConditionController_depth|DiscoveryController_retries|admission_quota_controller_depth|crdEstablishing_adds|APIServiceOpenAPIAggregationControllerQueue1_retries|crdEstablishing_queue_latency|non_structural_schema_condition_controller_longest_running_processor_microseconds|autoregister_work_duration|crd_openapi_controller_retries|APIServiceRegistrationController_work_duration|crdEstablishing_work_duration|crd_finalizer_adds|crd_finalizer_depth|crd_openapi_controller_queue_latency|APIServiceOpenAPIAggregationControllerQueue1_work_duration|APIServiceRegistrationController_queue_latency|crd_autoregistration_controller_depth|AvailableConditionController_queue_latency|admission_quota_controller_queue_latency|crd_naming_condition_controller_work_duration|crd_openapi_controller_work_duration|DiscoveryController_depth|crd_naming_condition_controller_longest_running_processor_microseconds|APIServiceRegistrationController_depth|APIServiceRegistrationController_longest_running_processor_microseconds|crd_finalizer_unfinished_work_seconds|crdEstablishing_retries|admission_quota_controller_unfinished_work_seconds|non_structural_schema_condition_controller_adds|APIServiceRegistrationController_unfinished_work_seconds|admission_quota_controller_work_duration|autoregister_depth|autoregister_retries|kubeproxy_sync_proxy_rules_latency_microseconds|rest_client_request_latency_seconds|non_structural_schema_condition_controller_retries)

sourceLabels:

- __name__

- action: drop

regex: etcd_(debugging|disk|request|server).*

sourceLabels:

- __name__

port: http-metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-controller-manager创建kube-controller-manager svc

从上面得servicemonitor中可以看到对kube-controller-manager使用selector得标签为k8s-app: kube-controller-manager,且抓取得端口名字为:http-metrics,所以我们需要为kube-controller-manager创建一个符合上述条件得svc,kube-controller-manager得监控服务端口为10252

[root@k8s-master ~]# cat >kube-controller-manager-k8s-master-svc.yaml<<-'EOF'

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

k8s-app: kube-controller-manager

spec:

selector:

component: kube-controller-manager

ports:

- name: http-metrics

port: 10252

targetPort: 10252

protocol: TCP

EOF

[root@k8s-master ~]# kubectl apply -f kube-controller-manager-k8s-master-svc.yaml

service/kube-controller-manager createdprometheus报和kube-scheduler一样的错误,按相同的方式修改即可:

Get http://192.168.44.151:10252/metrics: dial tcp 192.168.44.151:10252: connect: connection refused,

# 修改完后组件状态正常

[root@k8s-master manifests]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站