共计 22979 个字符,预计需要花费 58 分钟才能阅读完成。

前言

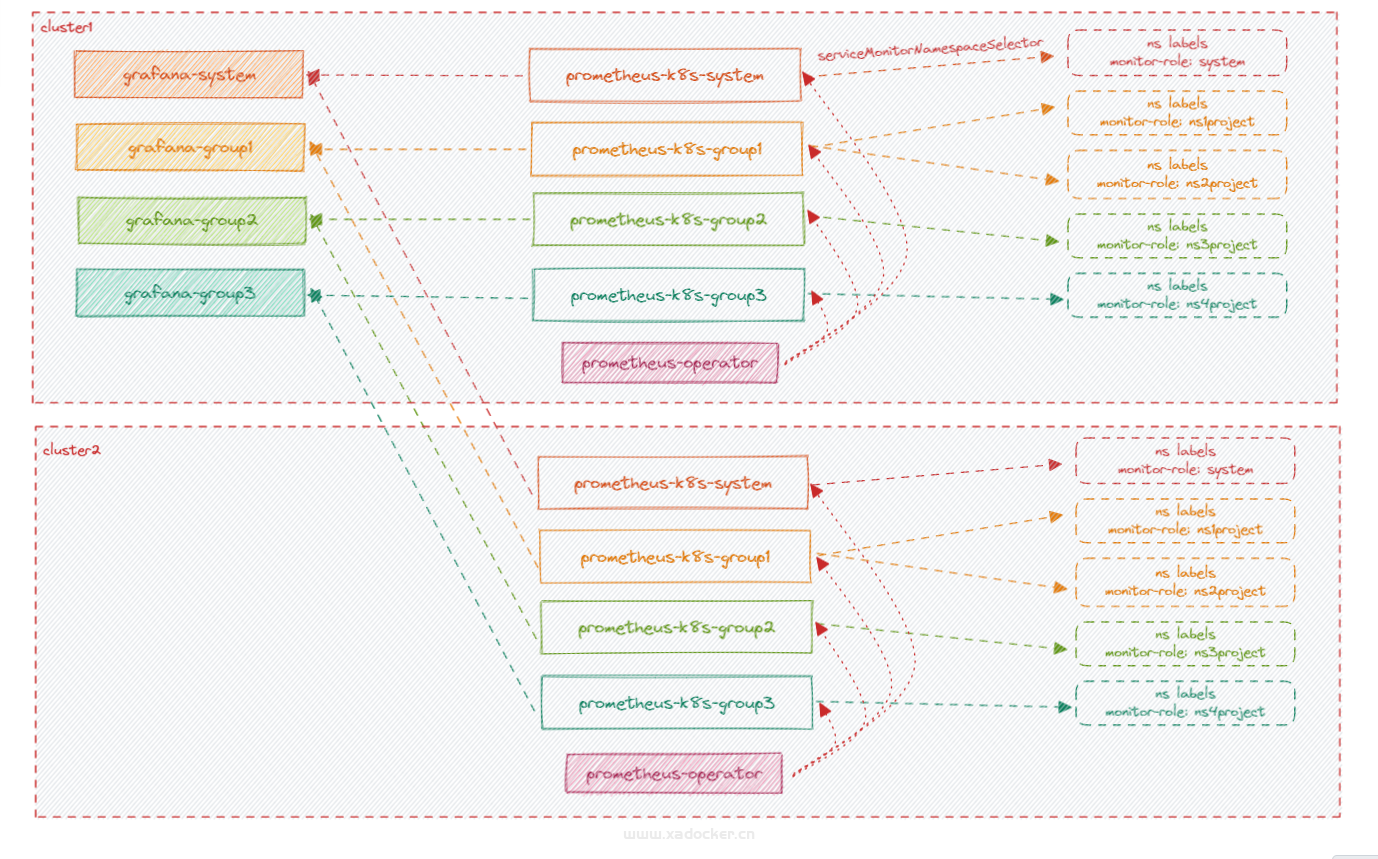

对于多个技术部门,多个项目系统的背景,不仅要满足权限管理的隔离,又要资源隔离,但同时还得追求资源共用,毕竟都是要钱的玩意。。。这种场景是不是有点矛盾?目前博主手上掌控着不到20个k8s集群,总资源达9600c/19T,由于国有化原因一直没有上prometheus上(很多其他云原生的东西都没有上),我也不知道他们是怎么过来的╮(╯▽╰)╭。最近听老大说终于终于终于审批过了测试环境安装prometheus的申请,整起(☆▽☆)

方案需求:

- 管理上需要满足各个项目组的隔离,各项目组只能看自己负责的系统应用(我们这边是用ns隔离)

- grafana需要开发项目权限编辑,减轻运维负担

- 尽可能节省资源

思考了下可以先这样改造

- 第一点:一个prometheus实例对应一个tsdb,不能怼多个,看来需要多个prometheus实例,每个集群一个总控prometheus监控当前集群组件+各ns一个prometheus实例监控项目组

- 第二点:我既然给项目组权限编辑,就得给他们edit角色,这个角色可以随意查看已接入得数据源数据,对于grafana博主开始以为这玩意能有数据源权限隔离,没想到是商业版上的功能。看来也得建多个实例啊

- 第三点:根据前两点,好像这一点没得节省。。。顶多prometheus-operator公用,其他声明多个资源呗

初步假想以小组的管理拓扑,此处无alertmanager,因为网络出不了外网的

后续上升到部门视图则由一个grafana提供,这个资源成本由运维管理部门组提供,对于这个grafana,除了运维部门组有edit权限,其他业务部门都是view权限。该grafana会为每个部门提供部门级资源视图,给部门领导看的~,所以不会有太细的指标。。。

prometheus多实例部署

创建专门抓取kube-system空间的prometheus

# 删除prometheus-operator安装时起来得prometheus

[root@k8s-master manifests]# kubectl delete -f prometheus-prometheus.yaml

# 修改prometheus-prometheus.yaml,调整其只监控kube-system空间下得资源

[root@k8s-master manifests]# cat >prometheus-prometheus.yaml<<-'EOF'

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s-system

name: k8s-system

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.mirrors.ustc.edu.cn/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector:

matchLabels:

monitoring-role: system

podMonitorSelector: {}

replicas: 1

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector:

matchLabels:

monitoring-role: system

serviceMonitorSelector: {}

version: v2.15.2

storage: # 添加pvc模板,存储类指向nfs

volumeClaimTemplate:

apiVersion: v1

kind: PersistentVolumeClaim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: nfs-storage

EOF创建专门抓取groupX空间的prometheus

[root@k8s-master manifests]# cat >prometheus-prometheus-group1.yaml<<-'EOF'

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s-group1

name: k8s-group1

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.mirrors.ustc.edu.cn/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector:

matchLabels:

monitoring-role: group1

podMonitorSelector: {}

replicas: 1

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector:

matchLabels:

monitoring-role: group1

serviceMonitorSelector: {}

version: v2.15.2

storage: # 添加pvc模板,存储类指向nfs

volumeClaimTemplate:

apiVersion: v1

kind: PersistentVolumeClaim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: nfs-storage

EOF

# 其他空间组的prometheus实例类似

[root@k8s-master manifests]# cp prometheus-prometheus-group1.yaml prometheus-prometheus-group2.yaml

[root@k8s-master manifests]# cp prometheus-prometheus-group1.yaml prometheus-prometheus-group3.yaml

[root@k8s-master manifests]# sed -i 's/group1/group2/g' prometheus-prometheus-group2.yaml

[root@k8s-master manifests]# sed -i 's/group1/group3/g' prometheus-prometheus-group3.yaml

[root@k8s-master manifests]# kubectl apply -f prometheus-prometheus-group1.yaml

prometheus.monitoring.coreos.com/k8s-group1 created

[root@k8s-master manifests]# kubectl apply -f prometheus-prometheus-group2.yaml

prometheus.monitoring.coreos.com/k8s-group2 created

[root@k8s-master manifests]# kubectl apply -f prometheus-prometheus-group3.yaml

prometheus.monitoring.coreos.com/k8s-group3 created创建prometheus的svc服务

[root@k8s-master manifests]# cat >prometheus-service.yaml<<-'EOF'

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s-system

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

selector:

app: prometheus

prometheus: k8s-system

sessionAffinity: ClientIP

EOF

[root@k8s-master manifests]# sed 's/system/group1/g' prometheus-service.yaml > prometheus-service-group1.yaml

[root@k8s-master manifests]# sed 's/system/group2/g' prometheus-service.yaml > prometheus-service-group2.yaml

[root@k8s-master manifests]# sed 's/system/group3/g' prometheus-service.yaml > prometheus-service-group3.yaml此时集群内拥有几个prometheus实例

[root@k8s-master manifests]# kubectl get prometheus -n monitoring --show-labels

NAME VERSION REPLICAS AGE LABELS

k8s-group1 v2.15.2 1 63s prometheus=k8s-group1

k8s-group2 v2.15.2 1 55s prometheus=k8s-group2

k8s-group3 v2.15.2 1 46s prometheus=k8s-group3

k8s-system v2.15.2 1 88m prometheus=k8s-system

创建测试空间

# 创建测试空间

[root@k8s-master manifests]# kubectl create ns group1

namespace/group1 created

[root@k8s-master manifests]# kubectl create ns group2

namespace/group2 created

[root@k8s-master manifests]# kubectl create ns group3

namespace/group3 created

# 为空间打标签

[root@k8s-master manifests]# kubectl label ns group1 monitoring-role=group1

namespace/group1 labeled

[root@k8s-master manifests]# kubectl label ns group2 monitoring-role=group2

namespace/group2 labeled

[root@k8s-master manifests]# kubectl label ns group3 monitoring-role=group3

namespace/group2 labeled

[root@k8s-master manifests]# kubectl label ns kube-system monitoring-role=system

namespace/kube-system labeled

# 查看当前空间标签情况

[root@k8s-master manifests]# kubectl get ns --show-labels

NAME STATUS AGE LABELS

default Active 3h43m <none>

group1 Active 2m46s monitoring-role=group1

group2 Active 2m44s monitoring-role=group2

group3 Active 2m42s monitoring-role=group3

kube-node-lease Active 3h43m <none>

kube-public Active 3h43m <none>

kube-system Active 3h43m monitoring-role=system

monitoring Active 3h41m <none>

为各空间创建app样例应用

[root@k8s-master example-app]# cat >group1-example-app.yaml<<-'EOF'

kind: Service

apiVersion: v1

metadata:

name: example-app

labels:

tier: frontend

group: group1

namespace: group1

spec:

selector:

app: example-app

ports:

- name: web

protocol: TCP

port: 8080

targetPort: web

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-app

namespace: group1

spec:

selector:

matchLabels:

app: example-app

version: 1.1.3

group: group1

replicas: 4

template:

metadata:

labels:

app: example-app

version: 1.1.3

group: group1

spec:

containers:

- name: example-app

image: quay.io/fabxc/prometheus_demo_service

ports:

- name: web

containerPort: 8080

protocol: TCP

EOF

[root@k8s-master example-app]# sed 's/group1/group2/g' group1-example-app.yaml > group2-example-app.yaml

[root@k8s-master example-app]# sed 's/group1/group3/g' group1-example-app.yaml > group3-example-app.yaml

[root@k8s-master example-app]# kubectl apply -f group1-example-app.yaml

service/example-app created

deployment.apps/example-app created

[root@k8s-master example-app]# kubectl apply -f group2-example-app.yaml

service/example-app created

deployment.apps/example-app created

[root@k8s-master example-app]# kubectl apply -f group3-example-app.yaml

service/example-app created

deployment.apps/example-app created

在各空间下创建servicemonitor

[root@k8s-master example-app]# cat >servicemonitor-frontend-group1.yaml<<-'EOF'

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: monitor-frontend

namespace: group1

labels:

tier: frontend

group: group1

spec:

selector:

matchLabels:

tier: frontend

group: group1

targetLabels:

- tier

- group

endpoints:

- port: web

interval: 10s

namespaceSelector:

matchNames:

- group1

EOF

[root@k8s-master example-app]# sed 's/group1/group2/g' servicemonitor-frontend-group1.yaml > servicemonitor-frontend-group2.yaml

[root@k8s-master example-app]# sed 's/group1/group3/g' servicemonitor-frontend-group1.yaml > servicemonitor-frontend-group3.yaml

# 查看当前所有servicemonitor,自行将monitoring空间下的servicemonitor迁移到kube-system。。。

[root@k8s-master example-app]# kubectl get servicemonitor -A

NAMESPACE NAME AGE

group1 monitor-frontend 9m

group2 monitor-frontend 8s

group3 monitor-frontend 3s

kube-system kube-apiserver 4h12m

monitoring alertmanager 4h12m

monitoring coredns 4h12m

monitoring grafana 4h12m

monitoring kube-controller-manager 4h12m

monitoring kube-scheduler 4h12m

monitoring kube-state-metrics 4h12m

monitoring kubelet 4h12m

monitoring node-exporter 4h12m

monitoring prometheus 4h12m

monitoring prometheus-operator 4h12m

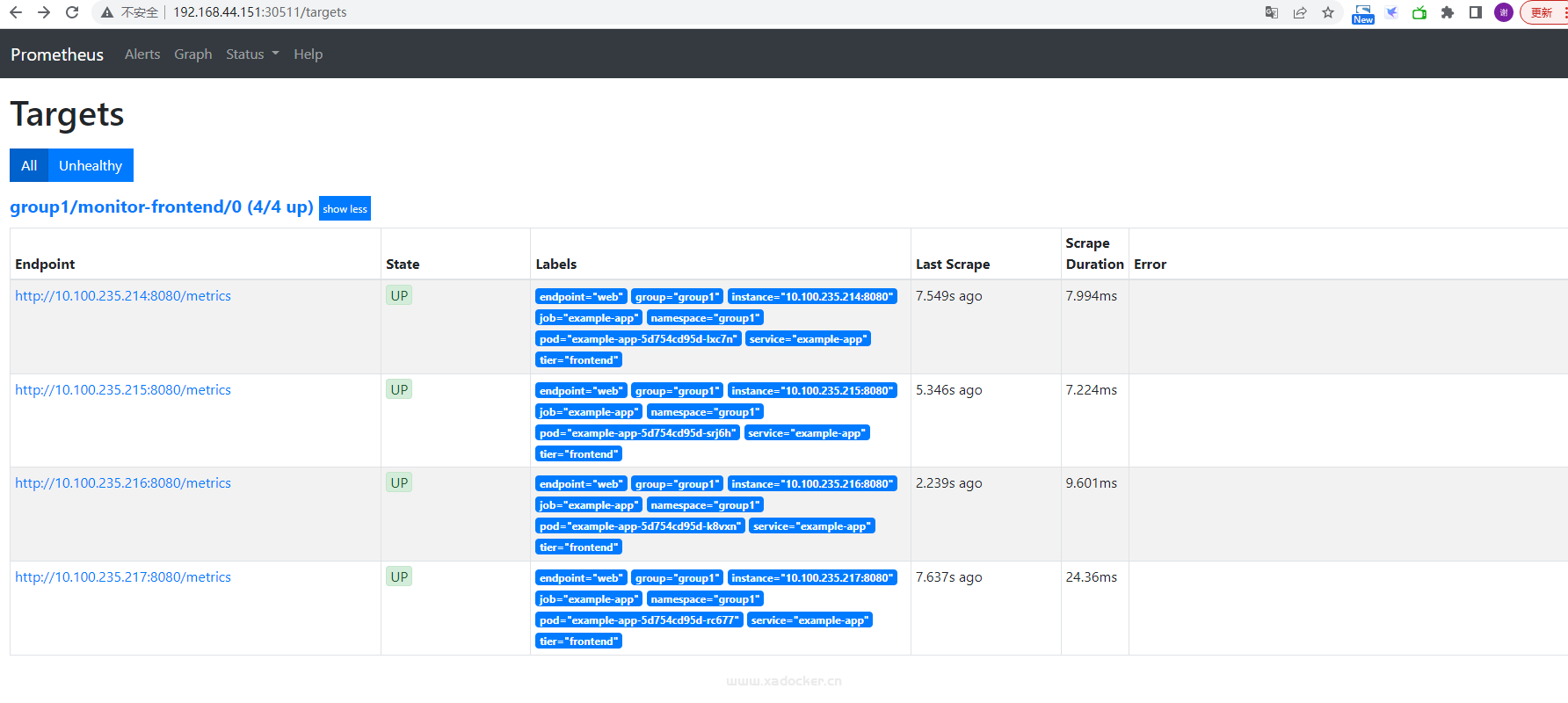

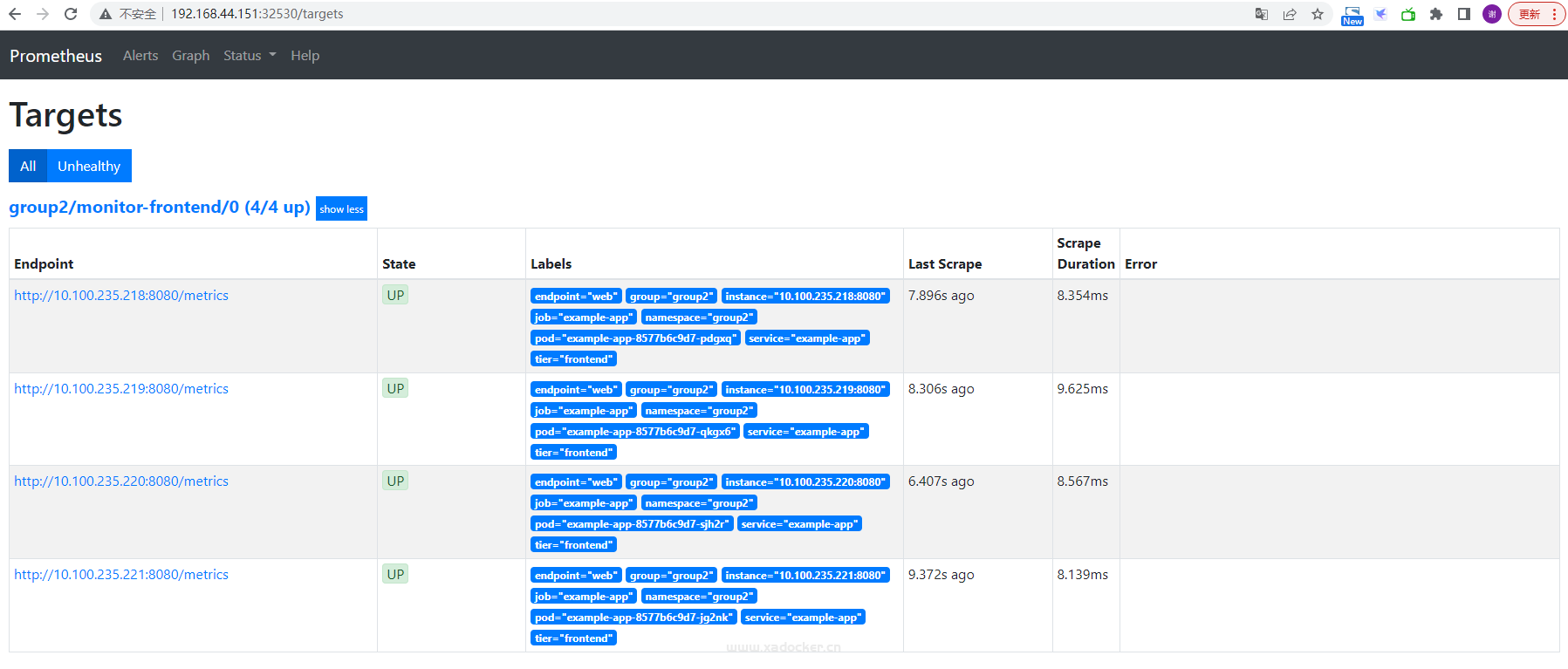

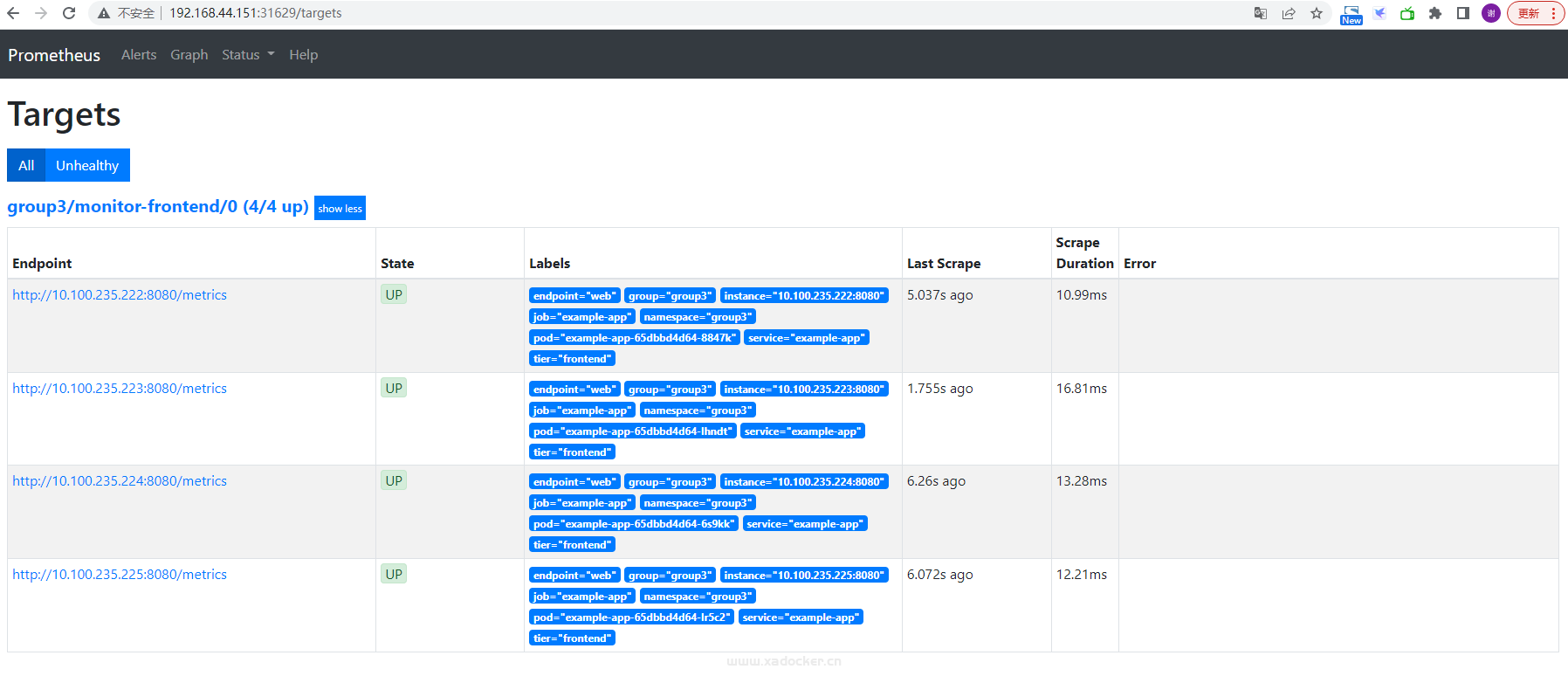

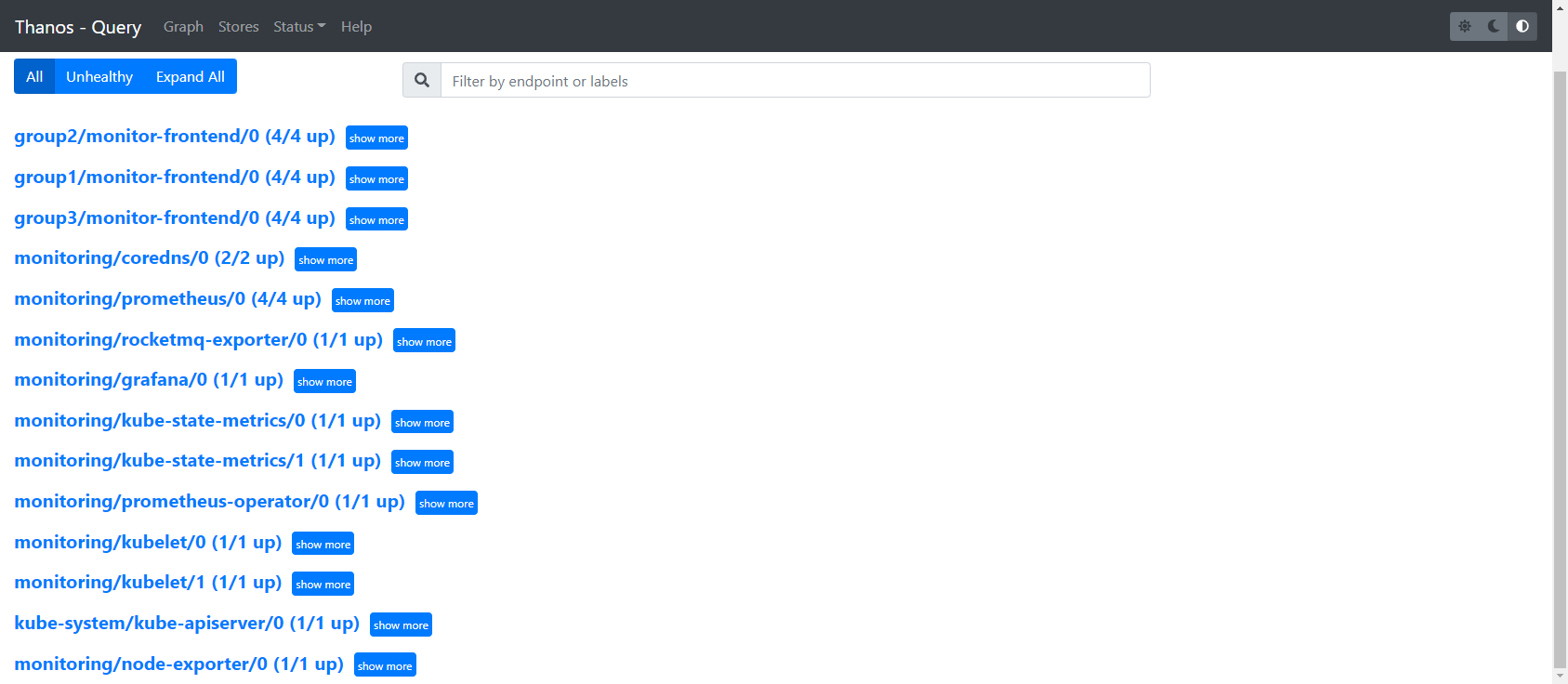

查看每个prometheus此时的target

至此,我们实现了每个prometheus监控特定的空间资源,接下就是grafan的多个实例部署

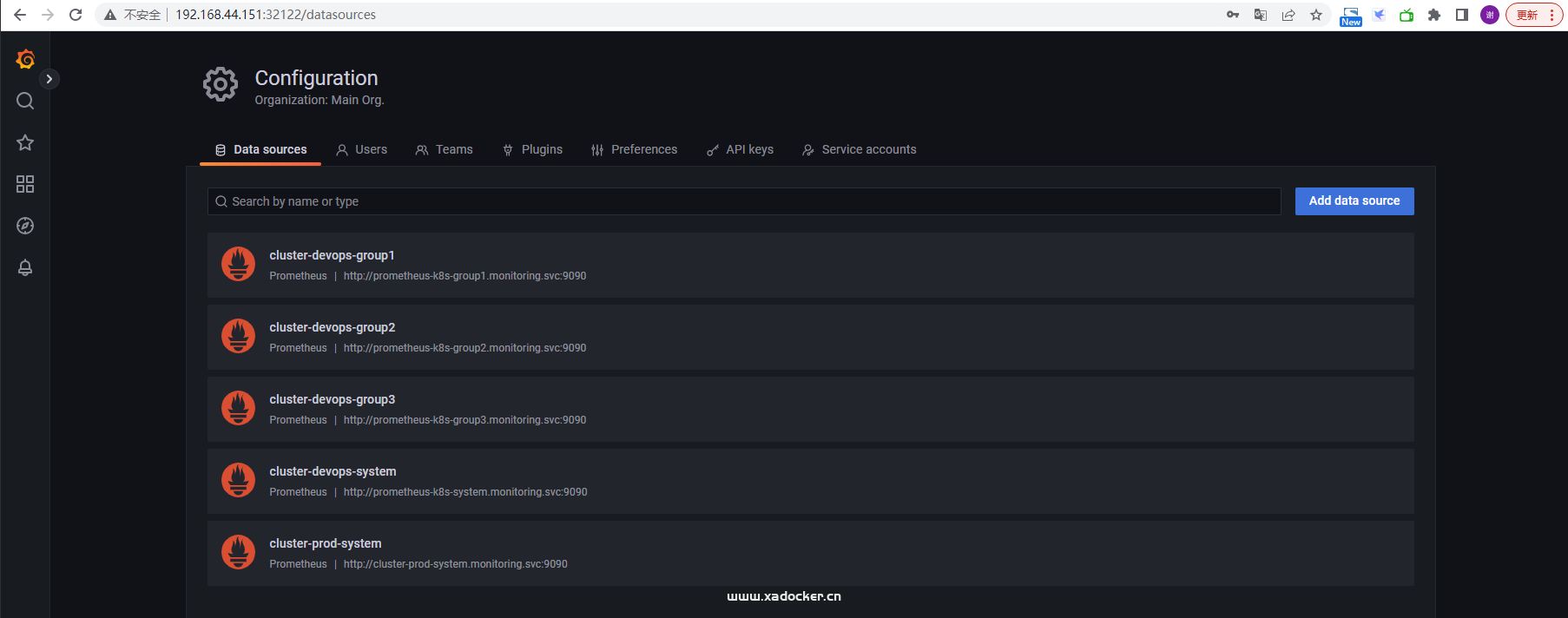

grafana接入多个数据源

此处只建一个grafana接入多个数据源,自行参考grafana-deployment.yaml修改创建多个grafana实例,然后为多个grafana接入不同数据源来分配给各个部门

使用configmap配置grafana

grafan的dashboardDatasources

[root@k8s-master manifests]# cat grafana-dashboardDatasources.yaml

apiVersion: v1

data:

datasources.yaml: ewogICAgImFwaVZlcnNpb24iOiAxLAogICAgImRhdGFzb3VyY2VzIjogWwogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgIm9yZ0lkIjogMSwKICAgICAgICAgICAgInR5cGUiOiAicHJvbWV0aGV1cyIsCiAgICAgICAgICAgICJ1cmwiOiAiaHR0cDovL3Byb21ldGhldXMtazhzLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9CiAgICBdCn0=

kind: Secret

metadata:

name: grafana-datasources

namespace: monitoring

type: Opaque

[root@k8s-master manifests]# echo 'ewogICAgImFwaVZlcnNpb24iOiAxLAogICAgImRhdGFzb3VyY2VzIjogWwogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgIm9yZ0lkIjogMSwKICAgICAgICAgICAgInR5cGUiOiAicHJvbWV0aGV1cyIsCiAgICAgICAgICAgICJ1cmwiOiAiaHR0cDovL3Byb21ldGhldXMtazhzLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9CiAgICBdCn0=' | base64 -d

{

"apiVersion": 1,

"datasources": [

{

"access": "proxy",

"editable": false,

"name": "prometheus",

"orgId": 1,

"type": "prometheus",

"url": "http://prometheus-k8s.monitoring.svc:9090",

"version": 1

}

]

}

# 上面是源文件内容,根据内容添加相应的数据源

[root@k8s-master manifests]# cat >datasource.json<<-'EOF'

{

"apiVersion": 1,

"datasources": [

{

"access": "proxy",

"editable": false,

"name": "cluster-devops-group1",

"orgId": 1,

"type": "prometheus",

"url": "http://prometheus-k8s-group1.monitoring.svc:9090",

"version": 1

},

{

"access": "proxy",

"editable": false,

"name": "cluster-devops-group2",

"orgId": 1,

"type": "prometheus",

"url": "http://prometheus-k8s-group2.monitoring.svc:9090",

"version": 1

},

{

"access": "proxy",

"editable": false,

"name": "cluster-devops-group3",

"orgId": 1,

"type": "prometheus",

"url": "http://prometheus-k8s-group3.monitoring.svc:9090",

"version": 1

},

{

"access": "proxy",

"editable": false,

"name": "cluster-devops-system",

"orgId": 1,

"type": "prometheus",

"url": "http://prometheus-k8s-system.monitoring.svc:9090",

"version": 1

}

]

}

EOF

[root@k8s-master manifests]# cat datasource.json |base64 | tr -d '\n'

ewogICAgImFwaVZlcnNpb24iOiAxLAogICAgImRhdGFzb3VyY2VzIjogWwogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJjbHVzdGVyLWRldm9wcy1ncm91cDEiLAogICAgICAgICAgICAib3JnSWQiOiAxLAogICAgICAgICAgICAidHlwZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgInVybCI6ICJodHRwOi8vcHJvbWV0aGV1cy1rOHMtZ3JvdXAxLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9LAogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJjbHVzdGVyLWRldm9wcy1ncm91cDIiLAogICAgICAgICAgICAib3JnSWQiOiAxLAogICAgICAgICAgICAidHlwZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgInVybCI6ICJodHRwOi8vcHJvbWV0aGV1cy1rOHMtZ3JvdXAyLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9LAogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJjbHVzdGVyLWRldm9wcy1ncm91cDMiLAogICAgICAgICAgICAib3JnSWQiOiAxLAogICAgICAgICAgICAidHlwZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgInVybCI6ICJodHRwOi8vcHJvbWV0aGV1cy1rOHMtZ3JvdXAzLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9LAogICAgICAgIHsKICAgICAgICAgICAgImFjY2VzcyI6ICJwcm94eSIsCiAgICAgICAgICAgICJlZGl0YWJsZSI6IGZhbHNlLAogICAgICAgICAgICAibmFtZSI6ICJjbHVzdGVyLWRldm9wcy1zeXN0ZW0iLAogICAgICAgICAgICAib3JnSWQiOiAxLAogICAgICAgICAgICAidHlwZSI6ICJwcm9tZXRoZXVzIiwKICAgICAgICAgICAgInVybCI6ICJodHRwOi8vcHJvbWV0aGV1cy1rOHMtc3lzdGVtLm1vbml0b3Jpbmcuc3ZjOjkwOTAiLAogICAgICAgICAgICAidmVyc2lvbiI6IDEKICAgICAgICB9CiAgICBdCn0gCg==

# 将上面base64编码后的内容重新写入grafana-dashboardDatasources.yaml并重建grafana应用

[root@k8s-master manifests]# kubectl replace -f grafana-dashboardDatasources.yaml

[root@k8s-master manifests]# kubectl delete pods -n monitoring grafana-7fbbc6cb58-zssh5上面配置的是本集群的数据源,外部数据则提供nodeport或ingress方式接入,此处使用nodeport方式

# prod集群中的prometheus-system

[root@k8s-master manifests]# kubectl get svc -n monitoring | grep k8s

prometheus-k8s-system NodePort 10.96.98.221 <none> 9090:32551/TCP,10901:32712/TCP 6s

# 回到中控集群为prod集群创建svc/ep

[root@k8s-master thanos]# cat >cluster-prod-system.yaml<<-'EOF'

---

apiVersion: v1

kind: Endpoints

metadata:

name: cluster-prod-system

namespace: monitoring

subsets:

- addresses:

- ip: 192.168.44.169

ports:

- name: http

port: 32551

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/instance: cluster-prod-system

name: cluster-prod-system

namespace: monitoring

spec:

ports:

- name: http

port: 9090

protocol: TCP

targetPort: http

type: ClusterIP

EOF

[root@k8s-master thanos]# kubectl apply -f cluster-prod-system.yaml

endpoints/cluster-prod-system created

service/cluster-prod-system created

[root@k8s-master thanos]# kubectl get -f cluster-prod-system.yaml

NAME ENDPOINTS AGE

endpoints/cluster-prod-system 192.168.44.169:32551 4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cluster-prod-system ClusterIP 10.96.224.101 <none> 9090/TCP 4s

# 重新修改grafana-dashboardDatasources.yaml添加数据源:cluster-prod-system.monitoring.svc:9090即可,此处略

至此我们的grafana已经可以接入多个项目组数据源,只需要一个部门一个grafana即可,让部门内在grafan使用team来划分group,实现部门自治

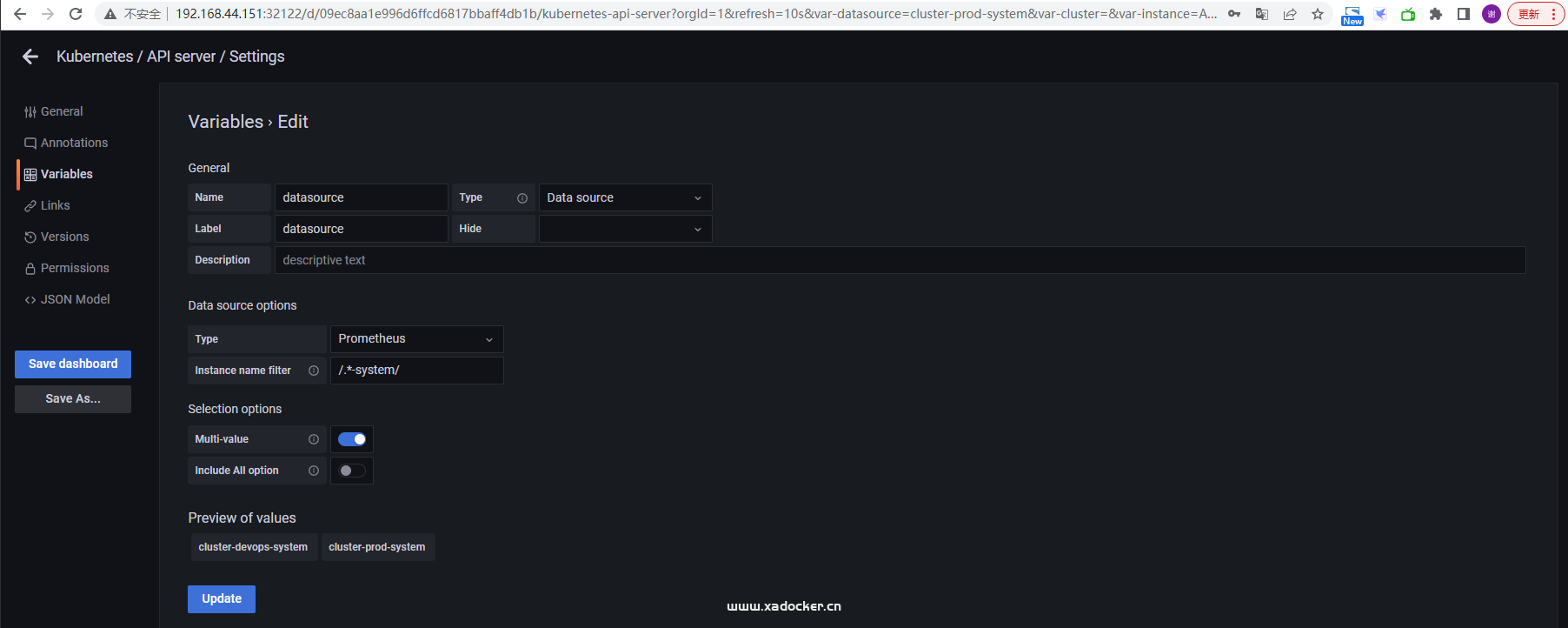

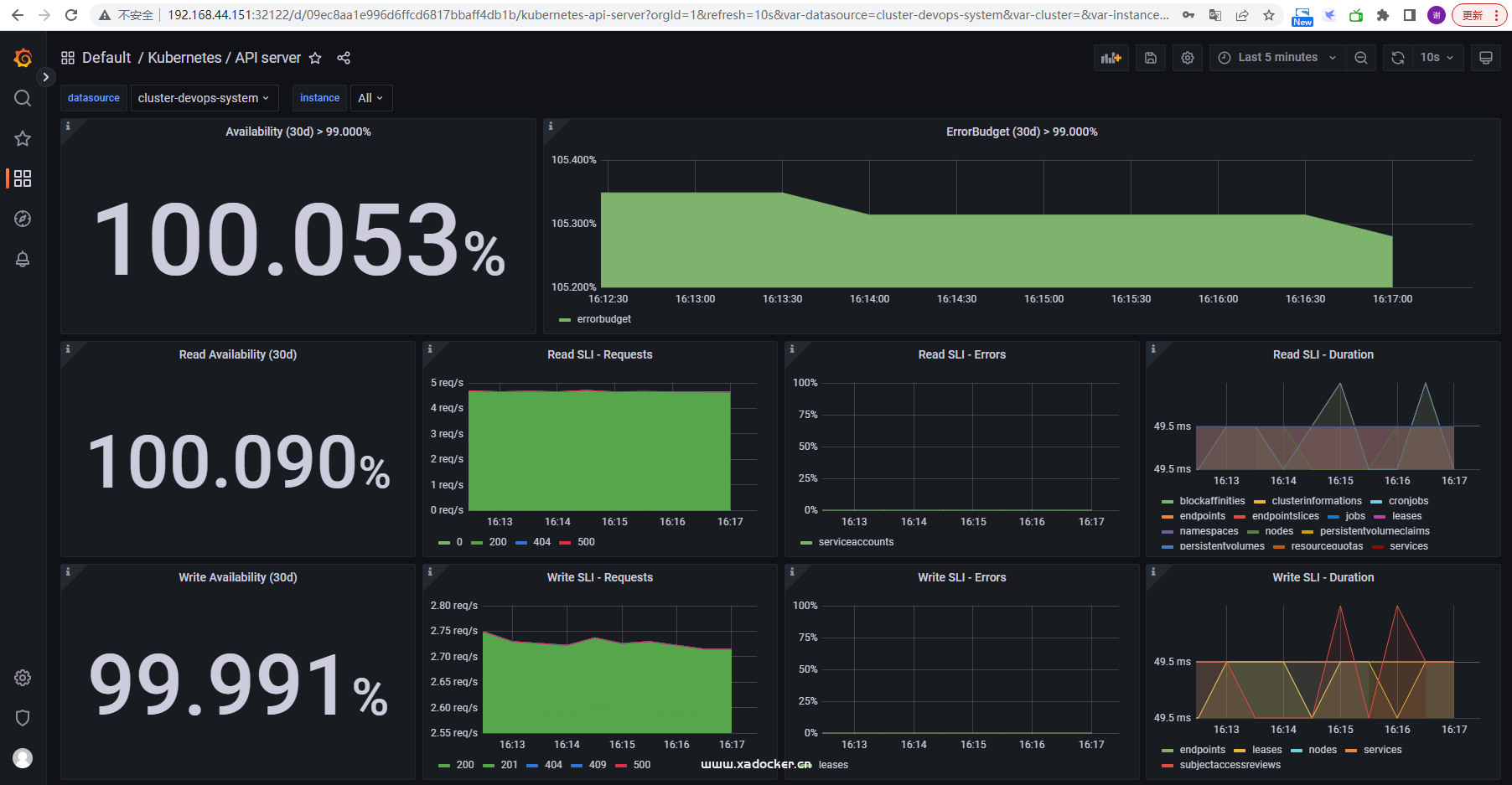

grafan的dashboard面板json

当有了集群划分后,则对于system空间得面板就有得调整了,我们需将面板的datasource变量过滤下(不然会有很多无关数据源),以apiserver面板为例

上面修改完成后无法是无法保存的,需要将修改后的json文件拿下来重新创建dashboard.yaml即可,dashboard资源定义在grafana-dashboardDefinitions.yaml,自行参考并未该json创建对应的confimap文件

[root@k8s-master manifests]# kubectl get cm -n monitoring | grep das

grafana-dashboard-apiserver 1 4d3h

grafana-dashboard-cluster-total 1 4d3h

grafana-dashboard-controller-manager 1 4d3h

grafana-dashboard-k8s-resources-cluster 1 4d3h

grafana-dashboard-k8s-resources-namespace 1 4d3h

grafana-dashboard-k8s-resources-node 1 4d3h

grafana-dashboard-k8s-resources-pod 1 4d3h

grafana-dashboard-k8s-resources-workload 1 4d3h

grafana-dashboard-k8s-resources-workloads-namespace 1 4d3h

grafana-dashboard-kubelet 1 4d3h

grafana-dashboard-namespace-by-pod 1 4d3h

grafana-dashboard-namespace-by-workload 1 4d3h

grafana-dashboard-node-cluster-rsrc-use 1 4d3h

grafana-dashboard-node-rsrc-use 1 4d3h

grafana-dashboard-nodes 1 4d3h

grafana-dashboard-persistentvolumesusage 1 4d3h

grafana-dashboard-pod-total 1 4d3h

grafana-dashboard-prometheus 1 4d3h

grafana-dashboard-prometheus-remote-write 1 4d3h

grafana-dashboard-proxy 1 4d3h

grafana-dashboard-scheduler 1 4d3h

grafana-dashboard-statefulset 1 4d3h

grafana-dashboard-workload-total 1 4d3h

grafana-dashboards 1 4d3h

# 自行准备apiserver.json

[root@k8s-master manifests]# kubectl -n monitoring delete cm grafana-dashboard-apiserver

[root@k8s-master manifests]# kubectl -n monitoring create cm grafana-dashboard-apiserver --from-file=apiserver.json

# dashobard挂载文件在grafana-deployment.yaml,自行参考新增

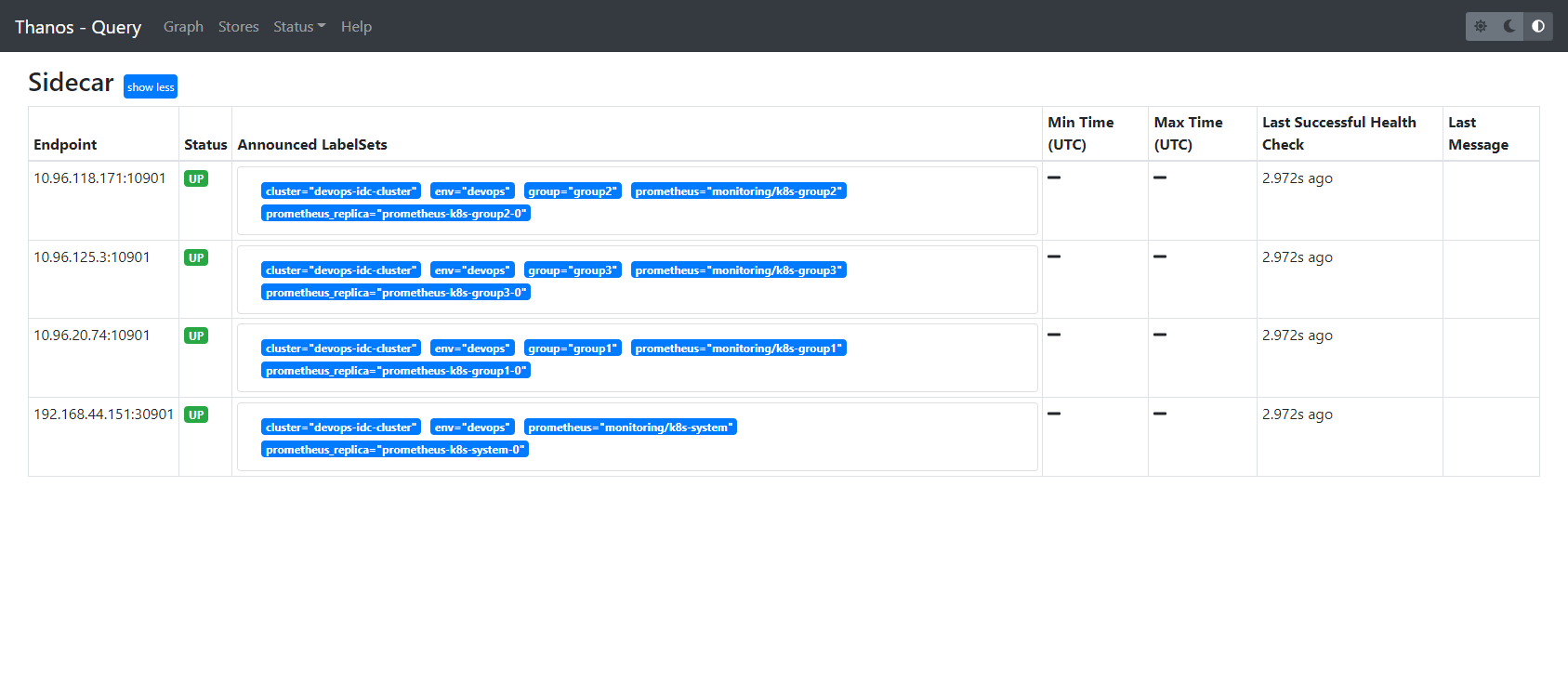

使用thanos模式优化管理员视角

部署thanos sidecar

[root@k8s-master manifests]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s-system

name: k8s-system

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.mirrors.ustc.edu.cn/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector:

matchLabels:

monitoring-role: system

podMonitorSelector: {}

replicas: 1

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector:

matchLabels:

monitoring-role: system

serviceMonitorSelector: {}

version: v2.15.2

externalLabels:

env: devops # 部署到不同环境需要修改此处label

cluster: devops-idc-cluster # 部署到不同环境需要修改此处label

storage: # 添加pvc模板,存储类指向nfs

volumeClaimTemplate:

apiVersion: v1

kind: PersistentVolumeClaim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: nfs-storage

thanos: # 添加thano-sidecar容器

baseImage: thanosio/thanos

version: main-2022-09-22-ff9ee9ac

部署query组件

[root@k8s-master thanos]# cat thanos-query.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: thanos-query

namespace: monitoring

labels:

app: thanos-query

spec:

selector:

matchLabels:

app: thanos-query

template:

metadata:

labels:

app: thanos-query

spec:

containers:

- name: thanos

image: thanosio/thanos:main-2022-09-22-ff9ee9ac

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica # prometheus-operator 里面配置的副本标签为 prometheus_replica

# Discover local store APIs using DNS SRV.

- --store=192.168.44.151:30901

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

livenessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 10

readinessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 15

---

apiVersion: v1

kind: Service

metadata:

name: thanos-query

namespace: monitoring

labels:

app: thanos-query

spec:

ports:

- port: 9090

targetPort: http

name: http

nodePort: 30909

selector:

app: thanos-query

type: NodePort

打开thanos-query界面

grafana接入keycloak进行统一认证管理

bash-5.0$ egrep -v '^$|^#' /etc/grafana/grafana.ini

;app_mode = production

;instance_name = ${HOSTNAME}

[paths]

;data = /var/lib/grafana

;temp_data_lifetime = 24h

;logs = /var/log/grafana

;plugins = /var/lib/grafana/plugins

;provisioning = conf/provisioning

[server]

;protocol = http

;http_addr =

;http_port = 3000

;domain = localhost

;enforce_domain = false

;root_url = %(protocol)s://%(domain)s:%(http_port)s/

;serve_from_sub_path = false

;router_logging = false

;static_root_path = public

;enable_gzip = false

;cert_file =

;cert_key =

;socket =

[database]

;type = sqlite3

;host = 127.0.0.1:3306

;name = grafana

;user = root

;password =

;url =

;ssl_mode = disable

;ca_cert_path =

;client_key_path =

;client_cert_path =

;server_cert_name =

;path = grafana.db

;max_idle_conn = 2

;max_open_conn =

;conn_max_lifetime = 14400

;log_queries =

;cache_mode = private

[remote_cache]

;type = database

;connstr =

[dataproxy]

;logging = false

;timeout = 30

;send_user_header = false

[analytics]

;reporting_enabled = true

;check_for_updates = true

;google_analytics_ua_id =

;google_tag_manager_id =

[security]

;disable_initial_admin_creation = false

;admin_user = admin

;admin_password = admin

;secret_key = SW2YcwTIb9zpOOhoPsMm

;disable_gravatar = false

;data_source_proxy_whitelist =

;disable_brute_force_login_protection = false

;cookie_secure = false

;cookie_samesite = lax

;allow_embedding = false

;strict_transport_security = false

;strict_transport_security_max_age_seconds = 86400

;strict_transport_security_preload = false

;strict_transport_security_subdomains = false

;x_content_type_options = false

;x_xss_protection = false

[snapshots]

;external_enabled = true

;external_snapshot_url = https://snapshots-origin.raintank.io

;external_snapshot_name = Publish to snapshot.raintank.io

;public_mode = false

;snapshot_remove_expired = true

[dashboards]

;versions_to_keep = 20

[users]

;allow_sign_up = true

;allow_org_create = true

;auto_assign_org = true

;auto_assign_org_id = 1

;auto_assign_org_role = Viewer

;verify_email_enabled = false

;login_hint = email or username

;password_hint = password

;default_theme = dark

;external_manage_link_url =

;external_manage_link_name =

;external_manage_info =

;viewers_can_edit = false

;editors_can_admin = false

[auth]

;login_cookie_name = grafana_session

;login_maximum_inactive_lifetime_days = 7

;login_maximum_lifetime_days = 30

;token_rotation_interval_minutes = 10

;disable_login_form = false

;disable_signout_menu = false

;signout_redirect_url =

;oauth_auto_login = false

;api_key_max_seconds_to_live = -1

[auth.anonymous]

;enabled = false

;org_name = Main Org.

;org_role = Viewer

[auth.github]

;enabled = false

;allow_sign_up = true

;client_id = some_id

;client_secret = some_secret

;scopes = user:email,read:org

;auth_url = https://github.com/login/oauth/authorize

;token_url = https://github.com/login/oauth/access_token

;api_url = https://api.github.com/user

;allowed_domains =

;team_ids =

;allowed_organizations =

[auth.gitlab]

;enabled = false

;allow_sign_up = true

;client_id = some_id

;client_secret = some_secret

;scopes = api

;auth_url = https://gitlab.com/oauth/authorize

;token_url = https://gitlab.com/oauth/token

;api_url = https://gitlab.com/api/v4

;allowed_domains =

;allowed_groups =

[auth.google]

;enabled = false

;allow_sign_up = true

;client_id = some_client_id

;client_secret = some_client_secret

;scopes = https://www.googleapis.com/auth/userinfo.profile https://www.googleapis.com/auth/userinfo.email

;auth_url = https://accounts.google.com/o/oauth2/auth

;token_url = https://accounts.google.com/o/oauth2/token

;api_url = https://www.googleapis.com/oauth2/v1/userinfo

;allowed_domains =

;hosted_domain =

[auth.grafana_com]

;enabled = false

;allow_sign_up = true

;client_id = some_id

;client_secret = some_secret

;scopes = user:email

;allowed_organizations =

[auth.generic_oauth]

;enabled = false

;name = OAuth

;allow_sign_up = true

;client_id = some_id

;client_secret = some_secret

;scopes = user:email,read:org

;email_attribute_name = email:primary

;email_attribute_path =

;auth_url = https://foo.bar/login/oauth/authorize

;token_url = https://foo.bar/login/oauth/access_token

;api_url = https://foo.bar/user

;allowed_domains =

;team_ids =

;allowed_organizations =

;role_attribute_path =

;tls_skip_verify_insecure = false

;tls_client_cert =

;tls_client_key =

;tls_client_ca =

[auth.saml] # Enterprise only

;enabled = false

;certificate =

;certificate_path =

;private_key =

;# Path to the private key. Used to decrypt assertions from the IdP

;private_key_path =

;idp_metadata =

;idp_metadata_path =

;idp_metadata_url =

;max_issue_delay = 90s

;metadata_valid_duration = 48h

;assertion_attribute_name = displayName

;assertion_attribute_login = mail

;assertion_attribute_email = mail

[auth.basic]

;enabled = true

[auth.proxy]

;enabled = false

;header_name = X-WEBAUTH-USER

;header_property = username

;auto_sign_up = true

;sync_ttl = 60

;whitelist = 192.168.1.1, 192.168.2.1

;headers = Email:X-User-Email, Name:X-User-Name

;enable_login_token = false

[auth.ldap]

;enabled = false

;config_file = /etc/grafana/ldap.toml

;allow_sign_up = true

;sync_cron = "0 0 1 * * *"

;active_sync_enabled = true

[smtp]

;enabled = false

;host = localhost:25

;user =

;password =

;cert_file =

;key_file =

;skip_verify = false

;from_address = admin@grafana.localhost

;from_name = Grafana

;ehlo_identity = dashboard.example.com

[emails]

;welcome_email_on_sign_up = false

;templates_pattern = emails/*.html

[log]

;mode = console file

;level = info

;filters =

[log.console]

;level =

;format = console

[log.file]

;level =

;format = text

;log_rotate = true

;max_lines = 1000000

;max_size_shift = 28

;daily_rotate = true

;max_days = 7

[log.syslog]

;level =

;format = text

;network =

;address =

;facility =

;tag =

[quota]

; enabled = false

; org_user = 10

; org_dashboard = 100

; org_data_source = 10

; org_api_key = 10

; user_org = 10

; global_user = -1

; global_org = -1

; global_dashboard = -1

; global_api_key = -1

; global_session = -1

[alerting]

;enabled = true

;execute_alerts = true

;error_or_timeout = alerting

;nodata_or_nullvalues = no_data

;concurrent_render_limit = 5

;evaluation_timeout_seconds = 30

;notification_timeout_seconds = 30

;max_attempts = 3

;min_interval_seconds = 1

[explore]

;enabled = true

[metrics]

;enabled = true

;interval_seconds = 10

;disable_total_stats = false

; basic_auth_username =

; basic_auth_password =

[metrics.graphite]

;address =

;prefix = prod.grafana.%(instance_name)s.

[grafana_com]

;url = https://grafana.com

[tracing.jaeger]

;address = localhost:6831

;always_included_tag = tag1:value1

;sampler_type = const

;sampler_param = 1

;zipkin_propagation = false

;disable_shared_zipkin_spans = false

[external_image_storage]

;provider =

[external_image_storage.s3]

;endpoint =

;path_style_access =

;bucket =

;region =

;path =

;access_key =

;secret_key =

[external_image_storage.webdav]

;url =

;public_url =

;username =

;password =

[external_image_storage.gcs]

;key_file =

;bucket =

;path =

[external_image_storage.azure_blob]

;account_name =

;account_key =

;container_name =

[external_image_storage.local]

[rendering]

;server_url =

;callback_url =

[panels]

;disable_sanitize_html = false

[plugins]

;enable_alpha = false

;app_tls_skip_verify_insecure = false

[enterprise]

;license_path =

[feature_toggles]

;enable =

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站