共计 1668 个字符,预计需要花费 5 分钟才能阅读完成。

以前公司曾经有段时间,一些开发在做微前端的技术调研时,曾让我帮忙写个pipeline,一个前端工程下有多个子组件,而博主构建时需要去到每个目录中进行npm操作,随着子组件的增加,博主就需要重写pipeline增加新组件构建,属实麻烦

起初是一个一个dir进去执行,类似如下:

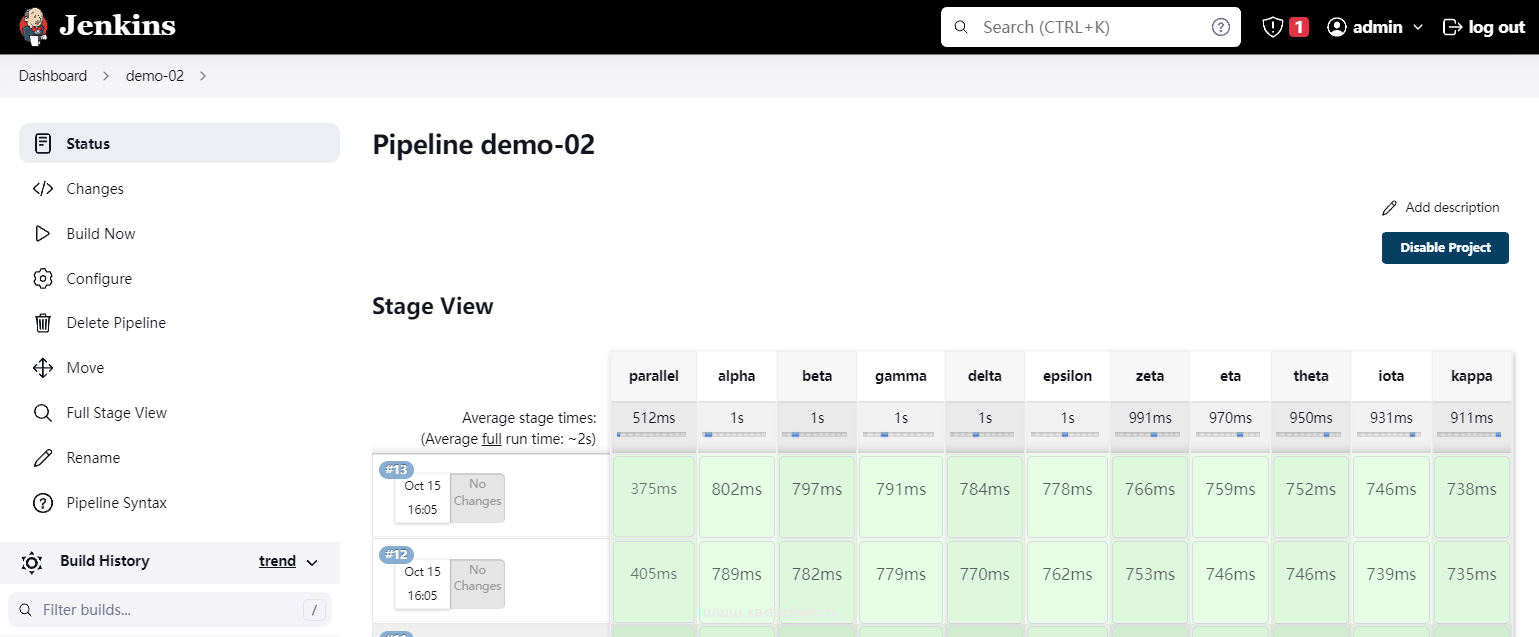

目前初步想法就是使用parallel并行任务来处理多个组件构建

pipeline {

agent any

stages {

stage('parallel') {

steps {

script {

def tasks = [:]

def componentlist = ["alpha", "beta", "gamma", "delta", "epsilon", "zeta", "eta", "theta", "iota", "kappa"]

for (component in componentlist) {

def component_inside = "${component}"

tasks["${component_inside}"] = {

stage("${component_inside}") {

echo "${component_inside}"

}

}

}

parallel tasks

}

}

}

}

}

目前效果如上,可以通过一个指定列表来动态扩展stage,但依然还要人工来写这个componentlist,依然很麻烦******,一顿搜索后可以将这个列表从文件中读取(readFile),而这个文件则由开发人员提交上代码仓库,这样博主就不需要修改pipeline了,完全由开发自己决定。。。

pipeline {

agent any

stages {

stage() {

git: xxxx@xxxxx branch: xxxx

}

stage('parallel') {

steps {

script {

def tasks = [:]

//def componentlist = ["alpha", "beta", "gamma", "delta", "epsilon", "zeta", "eta", "theta", "iota", "kappa"]

def componentlist = readFile('componentlist').replace("\n", "").split(',')

for (component in componentlist) {

def component_inside = "${component}"

tasks["${component_inside}"] = {

stage("${component_inside}") {

echo "${component_inside}"

}

}

}

parallel tasks

}

}

}

}

}而该componentlist文件内容如下

cat componentlist

alpha,beta,gamma,delta一段时间后,问题又来了,因为并发构建,随着组件数量增加,博主的jenkins服务器吃不消,本来jenkins服务设置的按核心数量来运行任务数,现在这个任务直接并发多个运行,需要给他限制下并发2个组件构建,不然扛不住

pipeline {

agent any

stages {

stage('parallel') {

steps {

script {

def componentlist = ["alpha", "beta", "gamma", "delta", "epsilon", "zeta", "eta", "theta", "iota", "kappa"]

def parallelBatches = componentlist.collate(2);

for (parallelBatch in parallelBatches) {

def tasks = [:]

for (component in parallelBatch) {

def component_inside = "${component}"

tasks["${component_inside}"] = {

stage("${component_inside}") {

echo "${component_inside}"

sh "sleep 5"

}

}

}

parallel tasks

}

}

}

}

}

}

正文完

隐私政策

隐私政策 留言板

留言板 金色传说

金色传说 kubernetes

kubernetes terraform

terraform 云生原

云生原 helm

helm 代码编程

代码编程 Java

Java Python

Python Shell

Shell DevOps

DevOps Ansible

Ansible Gitlab

Gitlab Jenkins

Jenkins 运维

运维 老司机

老司机 Linux 杂锦

Linux 杂锦 Nginx

Nginx 数据库

数据库 elasticsearch

elasticsearch 监控

监控 上帝视角

上帝视角 DJI FPV

DJI FPV DJI mini 3 pro

DJI mini 3 pro 关于本站

关于本站